- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to search for null values in fields when the f...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am working on creating a monitoring dashboard that will alert us when one of our customers databases stop sending event data that we need for reporting. However, I am struggling to filter my results down to those customers that are not sending data.

Here's my search:

| inputlookup HealthcareMasterList.csv

| search ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

| table propertyId FullHospitalName MarinaVersion

| append

[ search index=hceventmonitoring

[| inputlookup HealthcareMasterList.csv

| search ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

| table propertyId

| format]

| dedup _raw

| stats dc(monitorEventName) as TotalEventTypes by eventDate propertyId

| eval {eventDate}=TotalEventTypes

| fields - eventDate TotalEventTypes

| stats values(*) as * by propertyId]

| selfjoin keepsingle=t max=0 propertyId

The first part of the search is establishing a list of which customers I should be receiving event data from so they show up on the results even if there is no event data in Splunk. The second part is determining how my distinct event types a customer is sending each day.

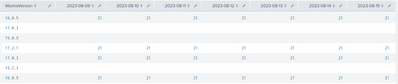

Below is a screenshot of a portion of my results:

What I need to have happen next is to filter down to any rows with NULL in any of the displayed dates. I tried to use | where isnull(2023*) but then found out you can't have wildcards in field names.

If I filter down to the nulls before doing |eval {date}=TotalEventTypes then I don't have any dates to work with as that field is blank for those rows (since they aren't sending event data, I don't have any dates from the event data to display).

I've seen other posts that suggest using foreach but I struggle to see how I could use that here since my field names are changing each day and I need the actual date to display as the field name when I view this in the dashboard. If I filter out the nulls first is there a way to dynamically create a field with the dates of the last 7 days and then I can add the |eval {date}=TotalEventTypes and then have those dates as field names?

Any thoughts or suggestions are highly appreciated! I've been racking my brain for almost two days trying to figure this out. LOL.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

TLDR;

Add this to the end - it sums all the fields in the table and then filters for Total=0

| addtotals *

| where Total=0

| fields - TotalLong answer:

This type of "proving absence" is generally done with a construct the other way round to the way you have it.

You are saying

- give me source of truth - (inputlookup)

- add actual events (search)

- join the sets together (stats)

The way you should approach it is to

- Search data wanted (search)

- Append source of truth (inputlookup)

- Join sets together

The reason for this is that append and subsearches have limitations, so it's always good to take the primary data set first, and this way round will perform faster, so your search could look like

index=hceventmonitoring

[| inputlookup HealthcareMasterList.csv where ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

| fields propertyId ]

``` The above finds all events for your wanted master list ```

``` Aggregate the results ```

| stats dc(monitorEventName) as TotalEventTypes by eventDate propertyId

``` Now append the source of truth - note these will have a null value for TotalEventTypes ```

| inputlookup append=t HealthcareMasterList.csv where ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

``` Now join the two sets of data together - this collapses the 3 fields from the first stats with the wanted additional fields from the lookup ```

| fields propertyId FullHospitalName MarinaVersion TotalEventTypes eventDate

| stats values(*) as * by propertyId

``` Now make the new columns ```

| eval {eventDate}=coalesce(TotalEventTypes, 0)

| fields - eventDate TotalEventTypes

| stats values(*) as * by propertyId

``` Create a total field for the total event types for the marina ```

| addtotals *

| where Total=0

| fields - Totalforeach also could be used to sum the totals, e.g.

| eval Total=0

| foreach 2023* [ eval Total=Total+`<<FIELD>>' ]

| where Total=0

| fields - Totalbut addtotals will do the same

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

TLDR;

Add this to the end - it sums all the fields in the table and then filters for Total=0

| addtotals *

| where Total=0

| fields - TotalLong answer:

This type of "proving absence" is generally done with a construct the other way round to the way you have it.

You are saying

- give me source of truth - (inputlookup)

- add actual events (search)

- join the sets together (stats)

The way you should approach it is to

- Search data wanted (search)

- Append source of truth (inputlookup)

- Join sets together

The reason for this is that append and subsearches have limitations, so it's always good to take the primary data set first, and this way round will perform faster, so your search could look like

index=hceventmonitoring

[| inputlookup HealthcareMasterList.csv where ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

| fields propertyId ]

``` The above finds all events for your wanted master list ```

``` Aggregate the results ```

| stats dc(monitorEventName) as TotalEventTypes by eventDate propertyId

``` Now append the source of truth - note these will have a null value for TotalEventTypes ```

| inputlookup append=t HealthcareMasterList.csv where ITV=1 AND ITV_INSTALLED>1 AND MarinaVersion IN (15*,16*,17*,18*)

``` Now join the two sets of data together - this collapses the 3 fields from the first stats with the wanted additional fields from the lookup ```

| fields propertyId FullHospitalName MarinaVersion TotalEventTypes eventDate

| stats values(*) as * by propertyId

``` Now make the new columns ```

| eval {eventDate}=coalesce(TotalEventTypes, 0)

| fields - eventDate TotalEventTypes

| stats values(*) as * by propertyId

``` Create a total field for the total event types for the marina ```

| addtotals *

| where Total=0

| fields - Totalforeach also could be used to sum the totals, e.g.

| eval Total=0

| foreach 2023* [ eval Total=Total+`<<FIELD>>' ]

| where Total=0

| fields - Totalbut addtotals will do the same

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you! I was able to work this out and have gotten the results I was looking for. I did change up the order of my search per your suggestion and thank you for explaining the why. I've been working with Splunk for about 3 years now but I'm mostly self taught so additional info is always welcome.

I was eager to try your different way of writing this search but I've stayed with using the selfjoin to join the two sources. The line |stats values(*) as * by propertyId wouldn't maintain a direct relationship with the TotalEventTypes and eventDate which is crucial to this dashboard. Also when I ran the line |eval {eventDate}=coalesce(TotalEventTypes,0), it left all the dates as one column when I want to display each date as it's own separate column so we can see very specific dates when the data isn't being returned.

The addtotals was the perfect solution! However I couldn't use 0 as a criteria because a site could be sending data for the first 6 days and then stop on the 7th (which is what we are trying to catch). However the index was built using another event we have that reports back from the site how many individual events we should have for each event type even if that count is 0 so I know I should expect a minimum of 21 of those every day so instead I used Total<147.

Thank you again for your help. Once again Splunk community has been a life saver!

P.S. I noticed you used "fields" in the places where I used "table". Is there a general rule I should consider when deciding which to use? Is there a benefit to using fields over table?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer to the question of fields vs table has probably changed over time - the Splunk optimiser will sometimes optimise a table statement to a fields statement,.

However as a 'rule', it's worth familiarising yourself with the command types

https://docs.splunk.com/Documentation/Splunk/9.1.0/SearchReference/Commandsbytype#Streaming_commands

and in a clustered environment, where you have one or more indexers and a search head that searches those indexers, the type of command you want to have as much of as possible BEFORE any other types are the Distributable Streaming commands

You will see that fields is one of these, so when you use the fields statement, the operation of this command runs on the indexer, so will keep the parallelisation of multiple indexers.

If you use the table command, you will see that this is a transforming command. Transforming commands cannot run on the indexers, so as soon as you use a transforming command in your search pipeline, all the data from all the indexers will have to be sent to the search head, where the pipeline will continue.

See what runs where in this table

https://docs.splunk.com/Documentation/Splunk/9.1.0/Search/Typesofcommands#Processing_attributes

So, take advantage of this ability to keep the data at the indexers for as long as possible, as data at the search head will never go back to the indexers.