Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Splunk Search

×

Are you a member of the Splunk Community?

Sign in or Register with your Splunk account to get your questions answered, access valuable resources and connect with experts!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to limit the triggering of a Splunk alert acco...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to limit the triggering of a Splunk alert according to a time range to avoid having several similar results?

elmadi_fares

Loves-to-Learn Everything

09-05-2022

08:10 AM

I have a problem triggering an alert on a splunk request based on a cron job that runs this way:

Search query:

index=pdx_pfmseur0_fxs_event sourcetype=st_xfmseur0_fxs_event

| eval

trackingid=mvindex('DOC.doc_keylist.doc_key.key_val',mvfind('DOC.doc_keylist.doc_key.key_name', "MCH-TrackingID"))

| rename gxsevent.gpstatusruletracking.eventtype as events_found

| rename file.receiveraddress as receiveraddress

| rename file.aprf as AJRF

| table trackingid events_found source receiveraddress AJRF

| stats values(trackingid) as trackingid, values(events_found) as events_found, values(receiveraddress) as receiveraddress, values(AJRF) as AJRF by source

| stats values(events_found) as events_found, values(receiveraddress) as receiveraddress, values(AJRF) as AJRF by trackingid

| search AJRF=ORDERS2 OR AJRF=ORDERS1 | stats count as total | appendcols [search index= idx_pk8seur2_logs sourcetype="kube:container:8wj-order-service" processType=avro-order-create JPABS | stats dc(nativeId) as rush ] | appendcols [search index= idx_pk8seur2_logs sourcetype="kube:container:9wj-order-avro-consumer" flowName=9wj-order-avro-consumer customer="AB" (message="HBKK" OR message="MANU") | stats count as hbkk] | eval gap = total-hbkk-rush | table gap, total, rush

| eval status=if(gap>0, "OK", "KO")

| eval ressource="FME-FME-R:AB"

| eval service_offring="FME-FME-R"

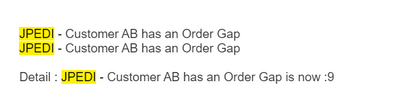

| eval description="JPEDI - Customer AB has an Order Gap \n \nDetail : JPEDI - Customer AB has an Order Gap is now :" + gap + "\n\n\n\n;support_group=AL-XX-MAI-L2;KB=KB0078557"

| table ressource description gap total rush description service_offringe_offring

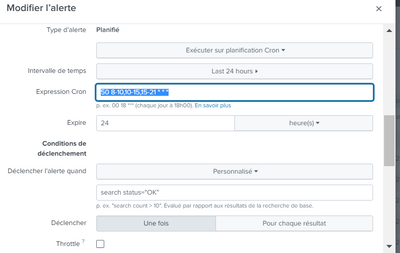

cronjob make on this alerte

I received three alerts containing the same result according to cron job

17H50 18H50 21H50 with same result of gap=9

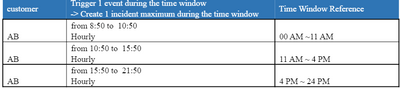

is there a solution to limit the alert triggering just for once for each time interval from 08:50 => 10:50

from 10:50 a.m. => 3:50 p.m.

from 3:50 p.m. to 9:50 p.m.

is there a solution to limit the alert triggering just for once for each time interval from 08:50 => 10:50

from 10:50 a.m. => 3:50 p.m.

from 3:50 p.m. to 9:50 p.m.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ITWhisperer

SplunkTrust

09-05-2022

08:31 AM

Your cron expression determines when the report is executed, not the period it covers - in your scenario, the report will run at 50 minutes past the hour for the hours 8am to 9pm, i.e. 8:50 to 21:50. You should then look at throttling of the alert. You may need to have 3 reports, one for each period, so that a new throttle kicks in for each period.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

elmadi_fares

Loves-to-Learn Everything

09-06-2022

01:39 PM

yes i need to have 3 reports

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

elmadi_fares

Loves-to-Learn Everything

09-06-2022

01:47 AM

I believe to reduce the frequency of triggering alerts I have to configure a period during which I delete the results??

Get Updates on the Splunk Community!

What’s New & Next in Splunk SOAR

Security teams today are dealing with more alerts, more tools, and more pressure than ever. Join us on ...

Your Voice Matters! Help Us Shape the New Splunk Lantern Experience

Splunk Lantern is a Splunk customer success center that provides advice from Splunk experts on valuable data ...

September Community Champions: A Shoutout to Our Contributors!

As we close the books on another fantastic month, we want to take a moment to celebrate the people who are the ...