- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to find incremental or constant rate points of...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to find incremental or constant rate points of last 24 hours?

Hi

I have challenge that need to know how with splunk, math, statistics, ... able to solve it.

Here is the log: sample:

2022-10-21 13:19:23:120 10

2022-10-21 13:19:23:120 20

2022-10-21 13:19:23:120 9999

2022-10-21 13:19:23:120 10

2022-10-21 13:19:23:120 0

2022-10-21 13:19:23:120 40

FYI1:don't want to summerize or get avrage of values.

FYI2:between each second contain over thousands of data points. Need to find abnormal in last 24 hours.

FYI3:I need optimise solution because there is a lot of data.

FYI4:don't want to set threshold e.g. 100 to filter only above that value, because abnormal situation might occur under that value.

FYI5:there is no constant value to detect this abnormal e.g check 10 vlue if increase set as abnormal!

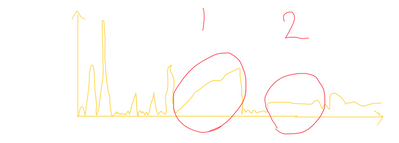

How can i find 1 incremental or 2 constant rate points of last 24 hours? (show on below chart)

Does not need to show this on chart because of lots of data points. Just list them.

Any idea?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mathematically, this is a best-fit problem. SPL itself doesn't provide a library for such. It is probably easier to write a custom command using a language that you are familiar with. (Splunk has Python binding; there are other options including an R plugin. Both Python and R have zillions of libraries for such tasks.)

Alternatively, take a look at Machine Learning Toolkit. Many of its algorithms apply best-fit. Their goal is to discern deviation from best-fit. Maybe you can adapt them to perform best-fit. (Logically, low deviation means a fit.)

Hope this helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@yuanliu thanks,

1-About below part do you have any suggestions?

“Alternatively, take a look at Machine Learning Toolkit. Many of its algorithms apply best-fit.”

2-and this part, would please explain more, specially this part “low deviation means a fit”

Their goal is to discern deviation from best-fit. Maybe you can adapt them to perform best-fit. (Logically, low deviation means a fit.)

3-how about performance? which one work faster on this data?

Any reference or more description appreciated.

Thanks,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

MLTK (2890) is a free "application" in Splunk Base. It comes with various basic fitting algorithms. You'll have to look at them individually to see if any fits your needs. The basic idea of the fitting algorithms is to calculate best fit for some past period or periods, make a "prediction" into a "future" relative to sample period, typically now; if current data deviates from prediction, that's an anomaly. "Predictions" are expressed by parameters of the fitting curve (yes, linear fitting is one of them).

Obviously I have not done the research myself. But generally, if you eye on a given interval, you can set it to be the sampling period and apply, or "train" in ML lingo, linear fitting. MLTK will give you stats such as deviation. "Constant rate" means linear; if deviation is zero, that means your data is linear in the sampling period. If slope is zero, that's a flat period. MLTK also allows you to manually adjust parameters so you can do other experiments as well.

"Training" is not designed to be dynamic, so I don't know how easy it is to incorporate training process into your real world use case. In addition to MLTK, Splunk Base also offers MLTK Algorithms on Github (4403).

This app is based on Splunk GitHub for Machine learning Toolkit OpenSource repo initiative to encourage building a community around sharing and reusing Machine Learning algorithms .Custom algorithms using Machine learning Toolkit libraries can be added by adhering to ML-SPL API by using the libraries which comes with Python for scientific computing app.

For linear fitting, it's not terribly difficult to write in SPL. But using a MLTK, Python, or R library is just easier to maintain. (In the past, I asked about flat detection and a community legend helped with that. But the SPL is not easily adaptable. I don't have it now and it isn't very easy to find from history although you can always search.)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@yuanliu actually currently don’t want to predict or forecast something.

simply need to discover them on last 24 hours of data. Data exist and time scope is 24 hours.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The idea, if feasible at all, is not to predict, but to use MLTK's training function as a detection tool. It's like calculating a multiplication (16 x 25) by performing a division (16/4).