- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to delete events which is decreasing inbetween...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

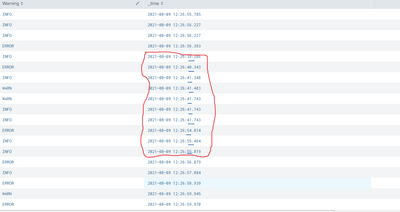

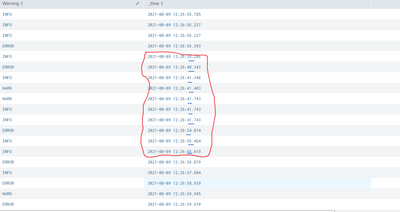

How to delete events which is decreasing inbetween?

How to delete events which is decreasing inbetween. I have extracted the _time column using regex so that splunk default sorting won't happens.

| _time | Warning |

| 2021-08-09 12:26:55.7852 | INFO |

| 2021-08-09 12:26:56.2278 | INFO |

| 2021-08-09 12:26:56.2278 | INFO |

| 2021-08-09 12:26:56.3939 | ERROR |

| 2021-08-09 12:26:39.2861 | INFO |

| 2021-08-09 12:26:40.3430 | ERROR |

| 2021-08-09 12:26:41.3482 | INFO |

| 2021-08-09 12:26:41.4832 | WARN |

| 2021-08-09 12:26:41.7433 | WARN |

| 2021-08-09 12:26:41.7433 | INFO |

| 2021-08-09 12:26:41.7433 | INFO |

| 2021-08-09 12:26:54.8140 | ERROR |

| 2021-08-09 12:26:55.4640 | INFO |

| 2021-08-09 12:26:55.8192 | INFO |

| 2021-08-09 12:26:56.8794 | ERROR |

| 2021-08-09 12:26:57.8846 | INFO |

| 2021-08-09 12:26:58.9398 | ERROR |

| 2021-08-09 12:26:59.9450 | WARN |

| 2021-08-09 12:26:59.9700 | ERROR |

| 2021-08-09 12:26:59.9700 | INFO |

| 2021-08-09 12:27:00.8201 | INFO |

| 2021-08-09 12:27:00.8401 | INFO |

| 2021-08-09 12:27:01.0352 | ERROR |

| 2021-08-09 12:27:00.8901 | INFO |

| 2021-08-09 12:27:00.8701 | INFO |

| 2021-08-09 12:27:01.0452 | ERROR |

It should ignore the events which i marked in "arial black", because "seconds" value starting decreasing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to share more information about your data and process. For starters,

- "extracted the _time column using regex." How is _time before this extraction?

- Regex produces strings in _time column. Did you convert it to numeric representation with strptime as @gcusello suggested? "Delta value is coming as 'negative' or 'zero'" would imply that _time is already numeric. But could you confirm?

- Did you sort (or otherwise alter order of events) before delta? "Delta value is coming as 'negative' or 'zero' for all the rows" strongly suggests that the order has changed. Base on the order in your original post, you would have gotten the following

As you can see, only 3 rows are negative. For all deltas to be negative, _time would have to be all in decremental order.Warning _time delta INFO 2021-08-09 12:26:55.785 INFO 2021-08-09 12:26:56.227 0.442600 INFO 2021-08-09 12:26:56.227 0.000000 ERROR 2021-08-09 12:26:56.393 0.166100 INFO 2021-08-09 12:26:39.286 -17.107800 ERROR 2021-08-09 12:26:40.343 1.056900 INFO 2021-08-09 12:26:41.348 1.005200 WARN 2021-08-09 12:26:41.483 0.135000 WARN 2021-08-09 12:26:41.743 0.260100 INFO 2021-08-09 12:26:41.743 0.000000 INFO 2021-08-09 12:26:41.743 0.000000 ERROR 2021-08-09 12:26:54.814 13.070700 INFO 2021-08-09 12:26:55.464 0.650000 INFO 2021-08-09 12:26:55.819 0.355200 ERROR 2021-08-09 12:26:56.879 1.060200 INFO 2021-08-09 12:26:57.884 1.005200 ERROR 2021-08-09 12:26:58.939 1.055200 WARN 2021-08-09 12:26:59.945 1.005200 ERROR 2021-08-09 12:26:59.970 0.025000 INFO 2021-08-09 12:26:59.970 0.000000 INFO 2021-08-09 12:27:00.820 0.850100 INFO 2021-08-09 12:27:00.840 0.020000 ERROR 2021-08-09 12:27:01.035 0.195100 INFO 2021-08-09 12:27:00.890 -0.145100 INFO 2021-08-09 12:27:00.870 -0.020000 ERROR 2021-08-09 12:27:01.045 0.175100

I realize that using delta alone is not a complete solution because delta is sometimes positive even when the time is older than a previous forwarded row, such as in this segment.

| _time | Warning | ||

| ... | ... | ... | |

| 1 | 2021-08-09 12:26:56.3939 | ERROR | 0.166100 |

| 2 | 2021-08-09 12:26:39.2861 | INFO | -17.107800 |

| 3 | 2021-08-09 12:26:40.3430 | ERROR | 1.056900 |

Before even attempting to calculate, could you answer a more fundamental question: What is so important in the original order that those "decremented" events must be removed (as opposed to simply sort according to your extracted _time)?

Here is an emulation used to calculate deltas in the order you presented:

| makeresults

| eval _raw = "_time Warning

2021-08-09 12:26:55.7852 INFO

2021-08-09 12:26:56.2278 INFO

2021-08-09 12:26:56.2278 INFO

2021-08-09 12:26:56.3939 ERROR

2021-08-09 12:26:39.2861 INFO

2021-08-09 12:26:40.3430 ERROR

2021-08-09 12:26:41.3482 INFO

2021-08-09 12:26:41.4832 WARN

2021-08-09 12:26:41.7433 WARN

2021-08-09 12:26:41.7433 INFO

2021-08-09 12:26:41.7433 INFO

2021-08-09 12:26:54.8140 ERROR

2021-08-09 12:26:55.4640 INFO

2021-08-09 12:26:55.8192 INFO

2021-08-09 12:26:56.8794 ERROR

2021-08-09 12:26:57.8846 INFO

2021-08-09 12:26:58.9398 ERROR

2021-08-09 12:26:59.9450 WARN

2021-08-09 12:26:59.9700 ERROR

2021-08-09 12:26:59.9700 INFO

2021-08-09 12:27:00.8201 INFO

2021-08-09 12:27:00.8401 INFO

2021-08-09 12:27:01.0352 ERROR

2021-08-09 12:27:00.8901 INFO

2021-08-09 12:27:00.8701 INFO

2021-08-09 12:27:01.0452 ERROR"

| multikv forceheader=1

| fields - _* linecount

| eval time = strptime(time, "%F %H:%M:%S.%4N")

| rename time as _time

| delta _time as delta

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @yuanliu ,

Thanks for your reply. I tried your query,

But the marked rows should be deleted because seconds value decreasing in between.

Time before extraction will show the time when data is uploaded

Why I'm ignoring those rows mean, when i'm calculating some calculation, there is a huge difference in hrs

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Could you confirm or deny that when "Delta value is coming as 'negative' or 'zero'", you performed extra sort on _time? Or is the natural order already in decreasing _time order so no exclusion should be necessary in the first place?

- The section you marked are not all in decreasing order. Only the first one in the group (2021-08-09 12:26:39.286) is 17 seconds earlier than the previous one (2021-08-09 12:26:56.393). Not only is each of the following timestamps later than 2021-08-09 12:26:39.286 (first one in the group), but also each is later than the one preceding it. For example, 2021-08-09 12:26:40.343 is 1 second later than 2021-08-09 12:26:39.286; 2021-08-09 12:26:41.348 is another 1 second later than 2021-08-09 12:26:40.343; and so on.

What I really mean is: Why shouldn't these events be sorted to order (descending or ascending)? Is there any significance/meaning of showing the disorder? You can simply do

| sort _timeand the disorder will disappear.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you mean by "delete"? You don't want to show them in the results? And how would you want to find such events as search results are by default sorted in reverse chronological order?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick ,

I don't want to show those rows in search result.

It won't be in sorted order since i extracted time by regex.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, let me explain something to you. The results will be in chronological order according to the default Splunk's _time field. Each event in Splunk has a _time field. So if you're "extracting the _time field by regex", you're overwriting its value after the event has already been found and retrieved from the index.

If the fields you're extracting manually this way is the main timestamp and should be the event's _time field, it means your source(s) is(are) not properly onboarded and no proper timestamp recognition/extraction is being performed on your events.

If, however the timestamp you're extracting is not the main timestamp of the event, you shouldn't extract it as _time but as some other-named field. It's not that it's technically wrong, it's just a matter of convention and good practices.

Anyway, if you get that field with regex, you just have a string value. Splunk doesn't know that it's a timestamp and cannot do any arithmetics or comparisons on that. You need to parse it with strptime function to get a numerical representation of the point in time (so called "unix timestamp"). Then you can try manipulating your data (most probably using streamstats).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kirthika26,

you could calculate delta (https://docs.splunk.com/Documentation/Splunk/9.0.5/SearchReference/Delta#:~:text=The%20delta%20comma....) with the previous event and filter the negative ones:

<your_search>

| delta _time AS delta

| where delta>0

| table _time warningCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Delta value is coming as "negative" or "zero" for all the rows. So can't able to delete the row.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kirthika26,

probably the timestamp you're using isn't _time, so, please try:

<your_search>

| eval timestamp=strptime(timestamp,"%Y-%m-%d %H:%M:%S.%4N")

| delta timestamp AS delta

| where delta>0

| table timestamp warningCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

I have tried the same way, but can't able to get the desired results.

| rex field=_raw "(?<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}.\d{4})"

| eval timestamp=strptime(timestamp,"%Y-%m-%d %H:%M:%S.%4N")

| delta timestamp AS delta

| table timestamp delta

Attached sample data in html.

timestamp delta

1635312047

1635312047 -0.0156

1635312046 -0.3501

1628492228 -6819818.165

1628492227 -1.0652

1628492226 -1.0652

1628492225 -1.0052

1628492224 -1.0502

1628492223 -1.0052

1628492222 -1.0502

1628492221 -1.0052

1628492221 -0.1951

1628492221 -0.02

1628492220 -0.8501

1628492220 0

1628492220 -0.025

1628492219 -1.0052

1628492218 -1.0552

1628492217 -1.0052

1628492216 -1.0602

1628492215 -0.3552

1628492215 -0.65

1628492214 -1.0503

1628492213 -1.0052

1628492212 -1.0552

1628492211 -1.0043

1628492210 -0.8397

1628492210 -0.005

1628492210 -0.1842

1628492210 -0.005

1628492210 -0.02

1628492210 -0.025

1628492210 0

1628492210 0

1628492209 -0.9772

1628492208 -1.0584

1628492207 -1.0052

1628492206 -0.2851

1628492206 -0.01

1628492206 -0.01

1628492206 0

1628492206 -0.015

1628492206 -0.7351

1628492205 -0.3401

1628492205 0

1628492205 -0.6651

1628492203 -1.0502

1628492203 -0.2852

1628492203 0

1628492203 -0.01

1628492203 0

1628492203 0

1628492203 -0.01

1628492202 -0.7001

1628492202 -0.7201

1628492202 0

1628492202 0

1628492201 -0.2601

1628492201 -0.135

1628492200 -1.0052

1628492199 -1.0569

1628492216 17.1078

1628492216 -0.1661

1628492216 0

1628492216 -0.4426

1628492216 -0.05

1628492216 0

1628492215 -0.431

1628492215 -0.0854

1628492215 -0.5434

1628492215 -0.07

1628492215 -0.0611

1628492214 -0.1441

1628492214 -0.033

1628492214 -0.023

1628492214 -0.042

1628492214 -0.0511

1628492214 -0.01

1628492214 0

1628492214 -0.1271

1628492214 -0.037

1628492214 -0.034

1628492214 -0.017

1628492214 -0.0431

1628492214 -0.016

1628492214 -0.025

1628492214 -0.065

1628492214 -0.0351

1628492214 -0.039

1628492214 -0.054

1628492214 -0.1441

1628492214 -0.03

1628492214 -0.02

1628492214 -0.035

1628492213 -0.1901

1628492213 0

1628492213 0

1628492213 0

1628492213 -0.04

1628492213 -0.085

1628492213 0

1628492213 -0.01

1628492213 0

1628492213 -0.115

1628492213 -0.1651

1628492213 -0.015

1628492213 0

1628492213 0

1628492212 -0.4701

1628492212 0

1628492212 0

1628492212 -0.225

1628492212 -0.03

1628492212 -0.02

1628492212 -0.5801

1628492211 -0.6301

1628492211 0

1628492211 -0.01

1628492211 0

1628492211 0

1628492211 -0.1051

1628492211 -0.01

1628492211 -0.145

1628492211 -0.075

1628492211 -0.06

1628492210 -0.1

1628492210 -0.442

1628492210 -0.0156

1628492210 0

1628492210 -0.1094

1628492210 -0.1719

1628492210 -0.1562

1628492209 -0.0469

1628492209 -0.1406

1628492209 0

1628492209 -0.0312

1628492209 0

1628492209 -0.4688

1628492209 -0.0782

1628492209 -0.0312

1628492209 -0.0313

1628492209 -0.0156

1628492209 -0.0156

1628492209 0

1628492208 -0.3594

1626875194 -1617014.747

1626875194 -0.0065- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @kirthika26 ,

please try this:

<your_search>

| rex field=_raw "(?<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}.\d{4})"

| eval timestamp=strptime(timestamp,"%Y-%m-%d %H:%M:%S.%4N")

| delta timestamp AS delta

| eval timestamp=strftime(timestamp,"%Y-%m-%d %H:%M:%S.%4N")

| sort -_time

| table _time timestamp deltaCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

Thanks for your reply.

But those rows marked in red should be removed since seconds value starts decreasing. I have marked in blue color