- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the query below, for each host, I am searching for its performance data for each value for past 5 minutes.

The expected output is the following:

I have solved this problem using 4 joins… But that made the source code large and ugly…

Is there any way I can optimize the size of the query below?

Can a define a custom macro inside the query and call it several times with different parameters instead of copy-pasting the code?

index=perfmon host=lena counter="% Processor Time" earliest=-1m

| fields host,counter,Value

| eval ValueR_1m_Ago = round(Value, 2)

| eval HostUpperCase = upper(host)

| convert ctime(_time) as Time_1m_Ago

| fields HostUpperCase, counter, ValueR_1m_Ago, Time_1m_Ago

| join HostUpperCase

[search index=perfmon host=lena counter="% Processor Time" earliest=-2m latest=-1m

| fields host,counter,Value

| eval ValueR_2m_Ago = round(Value, 2)

| eval HostUpperCase = upper(host)

| convert ctime(_time) as Time_2m_Ago

| fields HostUpperCase, ValueR_2m_Ago, Time_2m_Ago ]

| join HostUpperCase

[search index=perfmon host=lena counter="% Processor Time" earliest=-3m latest=-2m

| fields host,counter,Value

| eval ValueR_3m_Ago = round(Value, 2)

| eval HostUpperCase = upper(host)

| convert ctime(_time) as Time_3m_Ago

| fields HostUpperCase, ValueR_3m_Ago, Time_3m_Ago ]

| join HostUpperCase

[search index=perfmon host=lena counter="% Processor Time" earliest=-4m latest=-3m

| fields host,counter,Value

| eval ValueR_4m_Ago = round(Value, 2)

| eval HostUpperCase = upper(host)

| convert ctime(_time) as Time_4m_Ago

| fields HostUpperCase, ValueR_4m_Ago, Time_4m_Ago ]

| join HostUpperCase

[search index=perfmon host=lena counter="% Processor Time" earliest=-5m latest=-4m

| fields host,counter,Value

| eval ValueR_5m_Ago = round(Value, 2)

| eval HostUpperCase = upper(host)

| convert ctime(_time) as Time_5m_Ago

| fields HostUpperCase, ValueR_5m_Ago, Time_5m_Ago ]

| DEDUP HostUpperCase

| sort -ValueR_1m_Ago

| table HostUpperCase

,counter

,ValueR_1m_Ago

,Time_1m_Ago

,ValueR_2m_Ago

,ValueR_3m_Ago

,ValueR_4m_Ago

,ValueR_5m_Ago

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dzhariy,

looking at your search I was wondering how this could be done by using a stats and some eval tricks and guess what, I found a way to do this 🙂

Let me show you a run everywhere search (assuming you got the permission to search the index=_internal😞

index=_internal earliest=-5min@min sourcetype=splunkd | bucket _time span=1min | stats last(_time) AS last_time count AS per_min_count by _time, host, sourcetype

| eval 5min_ago = if(last_time > exact(relative_time(now(),"-6min@min")) AND last_time <= exact(relative_time(now(),"-5min@min")) , per_min_count ,"0")

| eval 4min_ago = if(last_time > exact(relative_time(now(),"-5min@min")) AND last_time <= exact(relative_time(now(),"-4min@min")) , per_min_count ,"0")

| eval 3min_ago = if(last_time > exact(relative_time(now(),"-4min@min")) AND last_time <= exact(relative_time(now(),"-3min@min")) , per_min_count ,"0")

| eval 2min_ago = if(last_time > exact(relative_time(now(),"-3min@min")) AND last_time <= exact(relative_time(now(),"-2min@min")) , per_min_count ,"0")

| eval 1min_ago = if(last_time > exact(relative_time(now(),"-2min@min")) AND last_time <= exact(relative_time(now(),"-1min@min")) , per_min_count ,"0")

| eval current_count = if(last_time > exact(relative_time(now(),"-1min@min")) AND last_time <= exact(relative_time(now(),"-0min@min")) , per_min_count ,"0")

| stats max(last_time) AS _time, values(host) AS host, values(sourcetype) AS sourcetype, max(current_count) AS current_count, max(1min_ago) AS 1min_ago, max(2min_ago) AS 2min_ago, max(3min_ago) AS 3min_ago, max(4min_ago) AS 4min_ago, max(5min_ago) AS 5min_ago

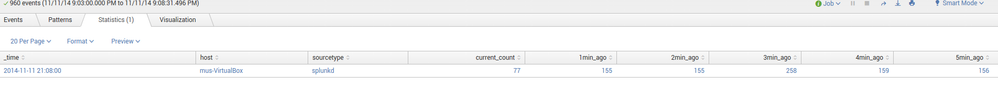

This will produce a table like this, which matches your result:

Now let me try to adapt this to your search and something like this should work:

index=perfmon host=lena counter="% Processor Time" earliest=-5min@min

| bucket _time span=1min

| stats last(_time) AS last_time max(exact(Value)) AS Value by _time, host, counter

| eval 5min_ago = if(last_time > exact(relative_time(now(),"-6min@min")) AND last_time <= exact(relative_time(now(),"-5min@min")) , Value ,"0")

| eval 4min_ago = if(last_time > exact(relative_time(now(),"-5min@min")) AND last_time <= exact(relative_time(now(),"-4min@min")) , Value ,"0")

| eval 3min_ago = if(last_time > exact(relative_time(now(),"-4min@min")) AND last_time <= exact(relative_time(now(),"-3min@min")) , Value ,"0")

| eval 2min_ago = if(last_time > exact(relative_time(now(),"-3min@min")) AND last_time <= exact(relative_time(now(),"-2min@min")) , Value ,"0")

| eval 1min_ago = if(last_time > exact(relative_time(now(),"-2min@min")) AND last_time <= exact(relative_time(now(),"-1min@min")) , Value ,"0")

| eval current_count = if(last_time > exact(relative_time(now(),"-1min@min")) AND last_time <= exact(relative_time(now(),"-0min@min")) , Value ,"0")

| stats max(last_time) AS _time, values(host) AS host, values(counter) AS counter, max(current_count) AS current_count, max(1min_ago) AS 1min_ago, max(2min_ago) AS 2min_ago, max(3min_ago) AS 3min_ago, max(4min_ago) AS 4min_ago, max(5min_ago) AS 5min_ago

The above search is untested due to missing windows events, so if there is any mistake in the search - adapt it to your needs 🙂

hope this helps ...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dzhariy,

looking at your search I was wondering how this could be done by using a stats and some eval tricks and guess what, I found a way to do this 🙂

Let me show you a run everywhere search (assuming you got the permission to search the index=_internal😞

index=_internal earliest=-5min@min sourcetype=splunkd | bucket _time span=1min | stats last(_time) AS last_time count AS per_min_count by _time, host, sourcetype

| eval 5min_ago = if(last_time > exact(relative_time(now(),"-6min@min")) AND last_time <= exact(relative_time(now(),"-5min@min")) , per_min_count ,"0")

| eval 4min_ago = if(last_time > exact(relative_time(now(),"-5min@min")) AND last_time <= exact(relative_time(now(),"-4min@min")) , per_min_count ,"0")

| eval 3min_ago = if(last_time > exact(relative_time(now(),"-4min@min")) AND last_time <= exact(relative_time(now(),"-3min@min")) , per_min_count ,"0")

| eval 2min_ago = if(last_time > exact(relative_time(now(),"-3min@min")) AND last_time <= exact(relative_time(now(),"-2min@min")) , per_min_count ,"0")

| eval 1min_ago = if(last_time > exact(relative_time(now(),"-2min@min")) AND last_time <= exact(relative_time(now(),"-1min@min")) , per_min_count ,"0")

| eval current_count = if(last_time > exact(relative_time(now(),"-1min@min")) AND last_time <= exact(relative_time(now(),"-0min@min")) , per_min_count ,"0")

| stats max(last_time) AS _time, values(host) AS host, values(sourcetype) AS sourcetype, max(current_count) AS current_count, max(1min_ago) AS 1min_ago, max(2min_ago) AS 2min_ago, max(3min_ago) AS 3min_ago, max(4min_ago) AS 4min_ago, max(5min_ago) AS 5min_ago

This will produce a table like this, which matches your result:

Now let me try to adapt this to your search and something like this should work:

index=perfmon host=lena counter="% Processor Time" earliest=-5min@min

| bucket _time span=1min

| stats last(_time) AS last_time max(exact(Value)) AS Value by _time, host, counter

| eval 5min_ago = if(last_time > exact(relative_time(now(),"-6min@min")) AND last_time <= exact(relative_time(now(),"-5min@min")) , Value ,"0")

| eval 4min_ago = if(last_time > exact(relative_time(now(),"-5min@min")) AND last_time <= exact(relative_time(now(),"-4min@min")) , Value ,"0")

| eval 3min_ago = if(last_time > exact(relative_time(now(),"-4min@min")) AND last_time <= exact(relative_time(now(),"-3min@min")) , Value ,"0")

| eval 2min_ago = if(last_time > exact(relative_time(now(),"-3min@min")) AND last_time <= exact(relative_time(now(),"-2min@min")) , Value ,"0")

| eval 1min_ago = if(last_time > exact(relative_time(now(),"-2min@min")) AND last_time <= exact(relative_time(now(),"-1min@min")) , Value ,"0")

| eval current_count = if(last_time > exact(relative_time(now(),"-1min@min")) AND last_time <= exact(relative_time(now(),"-0min@min")) , Value ,"0")

| stats max(last_time) AS _time, values(host) AS host, values(counter) AS counter, max(current_count) AS current_count, max(1min_ago) AS 1min_ago, max(2min_ago) AS 2min_ago, max(3min_ago) AS 3min_ago, max(4min_ago) AS 4min_ago, max(5min_ago) AS 5min_ago

The above search is untested due to missing windows events, so if there is any mistake in the search - adapt it to your needs 🙂

hope this helps ...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MuS,

Thank you. I have executed your original query for splunkd, and looks like it is working.

As you warned, the query for perfomon is not working “out of the box” 😉 However, I believe, the general idea of your query is the way to go for me: I just need more time to figure out why it returns zeros.

I think this is the answer for my question. Thank you, MuS!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update ping: today, I was able to test it on real Windows events and here is the correct first stats

| stats last(_time) AS last_time last(eval(round(max(Value),2 ))) AS Value by _time, host, counter

replace it in the provided search and it will work 🙂 This is needed because the field Value is a multivalue field.

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you MuS!

Now query works on my sandbox environment and I have tried it on production one.

The query speed was increased (according to my naked eye) in 4-5 times.

THANK YOU!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dzhariy,

Take a look at the timewrap app, this will provide a new search command that will simplify such searches.

hope this helps...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you MuS,

I’ve looked through the timewrap app and is definitely worth to look at. Unfortunately, in my organization,

I am a regular splunk user with restricted permissions and connot install apps. I will try to ask my administrators to install the app… Anyway, I still can experiment on my localhost splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dzhariy, have a look at the soon to be posted new answer here....I did some stats craziness - again - which could replace your monster join search

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That, very much so.

For other cases that don't get solved by timewrap you can define macros through Settings -> Advanced search -> Macros and even give them parameters, in this example your varying time ranges.