- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How do you present an All Green Dashboard when no ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a requirement to present a management dashboard that shows the number of alerts triggered for any clients, but they would like to see all green when no alerts have been triggered.

Search is simple atm

index="alerts"

| eval active_alert=if(_time + expires > now(), "t", "f")

| where active_alert="t"

| stats count by ClientName

Dashboard shows a grid with count of alerts per client dynamically using the Trellis option

Ideally, I'd like to be able to show a full list of clients where those with no alerts show as green (list of clients can be retrieved from a lookup) but not sure how to do this - or if it's possible.

Assuming that if I can do this - it would allow me to show all greens when there are no alerts.

I have tried the appendpipe command, but this seems to stuff up the trellis display.

The second part of this is knowing whether I can pass a token that is within the event, but part of the search results — ie sourceIndex for use with drilldown?

Appreciate any assistance with this

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

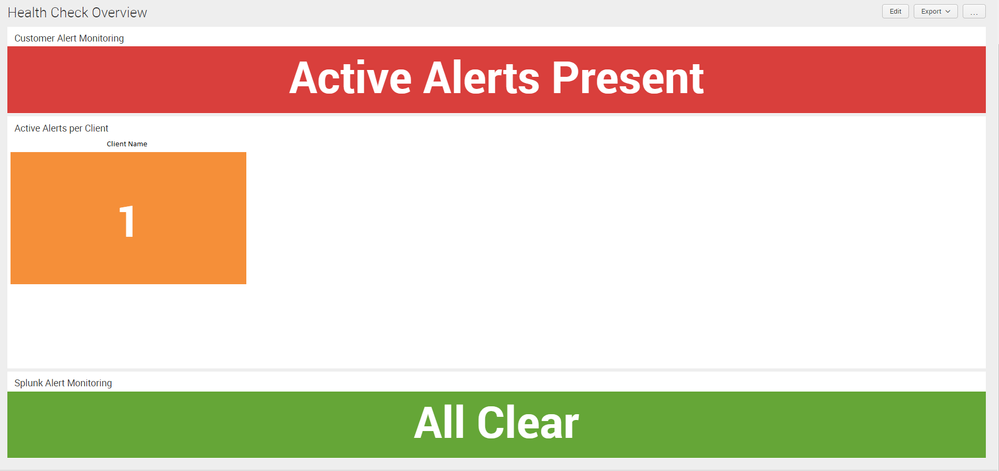

After stuffing around with this for a while and reading many other peoples posts, I managed to figure out something close enough to what I wanted.

As per the top section, when there are alerts present, I get the red and a count of active alerts per client, when there are no alerts, I get the Green as per the bottom.

For anyone else interested in this - I have added the below code - slightly altered to be go anywhere. We generate alerts into a summary table and monitor that for our live dashboard:

<form>

<label>Go Anywhere Health Check Overview</label>

<fieldset submitButton="false">

<input type="dropdown" token="sourcetype" searchWhenChanged="true">

<label>sourcetype</label>

<choice value="splunkd">Splunkd</choice>

<choice value="Spider">random</choice>

<default>splunkd</default>

<initialValue>splunkd</initialValue>

</input>

</fieldset>

<row>

<panel>

<title>Customer Alert Monitoring</title>

<single>

<search>

<query>index=_internal sourcetype=$sourcetype$

| eval active_event=if(_time + 300 > now(), "t", "f")

| where active_event="t"

| stats count

| eval result=if(count>0,"Active Events Present","All Clear")

| table result

| eval range=if(result=="Active Events Present", "severe", "low")</query>

<earliest>-60m@m</earliest>

<latest>now</latest>

<sampleRatio>1</sampleRatio>

<refresh>10m</refresh>

<refreshType>delay</refreshType>

</search>

<option name="colorBy">value</option>

<option name="colorMode">block</option>

</single>

</panel>

</row>

<row>

<panel depends="$ActiveEvents$">

<title>Active Events per Component</title>

<single>

<search id="EventsCheck">

<progress>

<condition match="'job.resultCount' > 0">

<set token="ActiveEvents">true</set>

</condition>

<condition>

<unset token="ActiveEvents"></unset>

</condition>

</progress>

<query>index=_internal sourcetype=$sourcetype$

| eval active_event=if(_time + 300 > now(), "t", "f")

| where active_event="t"

| stats count by log_level</query>

<earliest>-60m@m</earliest>

<latest>now</latest>

<sampleRatio>1</sampleRatio>

<refresh>10m</refresh>

<refreshType>delay</refreshType>

</search>

<option name="colorBy">value</option>

<option name="colorMode">block</option>

<option name="rangeColors">["0xf7bc38","0xf58f39","0xd93f3c"]</option>

<option name="rangeValues">[1,70]</option>

<option name="trellis.enabled">1</option>

<option name="trellis.scales.shared">1</option>

<option name="trellis.size">large</option>

<option name="useColors">1</option>

</single>

</panel>

</row>

</form>

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After stuffing around with this for a while and reading many other peoples posts, I managed to figure out something close enough to what I wanted.

As per the top section, when there are alerts present, I get the red and a count of active alerts per client, when there are no alerts, I get the Green as per the bottom.

For anyone else interested in this - I have added the below code - slightly altered to be go anywhere. We generate alerts into a summary table and monitor that for our live dashboard:

<form>

<label>Go Anywhere Health Check Overview</label>

<fieldset submitButton="false">

<input type="dropdown" token="sourcetype" searchWhenChanged="true">

<label>sourcetype</label>

<choice value="splunkd">Splunkd</choice>

<choice value="Spider">random</choice>

<default>splunkd</default>

<initialValue>splunkd</initialValue>

</input>

</fieldset>

<row>

<panel>

<title>Customer Alert Monitoring</title>

<single>

<search>

<query>index=_internal sourcetype=$sourcetype$

| eval active_event=if(_time + 300 > now(), "t", "f")

| where active_event="t"

| stats count

| eval result=if(count>0,"Active Events Present","All Clear")

| table result

| eval range=if(result=="Active Events Present", "severe", "low")</query>

<earliest>-60m@m</earliest>

<latest>now</latest>

<sampleRatio>1</sampleRatio>

<refresh>10m</refresh>

<refreshType>delay</refreshType>

</search>

<option name="colorBy">value</option>

<option name="colorMode">block</option>

</single>

</panel>

</row>

<row>

<panel depends="$ActiveEvents$">

<title>Active Events per Component</title>

<single>

<search id="EventsCheck">

<progress>

<condition match="'job.resultCount' > 0">

<set token="ActiveEvents">true</set>

</condition>

<condition>

<unset token="ActiveEvents"></unset>

</condition>

</progress>

<query>index=_internal sourcetype=$sourcetype$

| eval active_event=if(_time + 300 > now(), "t", "f")

| where active_event="t"

| stats count by log_level</query>

<earliest>-60m@m</earliest>

<latest>now</latest>

<sampleRatio>1</sampleRatio>

<refresh>10m</refresh>

<refreshType>delay</refreshType>

</search>

<option name="colorBy">value</option>

<option name="colorMode">block</option>

<option name="rangeColors">["0xf7bc38","0xf58f39","0xd93f3c"]</option>

<option name="rangeValues">[1,70]</option>

<option name="trellis.enabled">1</option>

<option name="trellis.scales.shared">1</option>

<option name="trellis.size">large</option>

<option name="useColors">1</option>

</single>

</panel>

</row>

</form>

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi kozanic_FF,

take a look at this run everywhere search that will show a text once no search results were found:

index=_internal place anything here to produce an empty search

| stats count by sourcetype

| append

[| stats count

| eval sourcetype=if(isnull(sourcetype), "Nothing to see here, move along!", sourcetype)]

| streamstats count AS line_num

| eval head_num=if(line_num > 1, line_num - 1, 1)

| where NOT ( count=0 AND head_num < line_num )

| table sourcetype count

You need to replace the search filter with each run, otherwise Splunk will find the filter string on the second run in the logs 😉

Adapt the search to your, hope this helps ...

cheers, MuS

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks MuS,

This works great with a table of results but for what I'm trying to achieve, it wasn't doing what I wanted.

When trying to get a count per source type in a single value Visualisation and using Trellis to give a count per sourcetype, it just breaks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi kozanic_FF,

it just needs a tiny little tweak by adding an additional stats at the end and it works even with single value and trellis 😉

Try this one:

index=_internal

| stats count by sourcetype

| append

[| stats count

| eval sourcetype=if(isnull(sourcetype), "none", sourcetype)]

| streamstats count AS line_num

| eval head_num=if(line_num > 1, line_num - 1, 1)

| where NOT ( count=0 AND head_num < line_num )

| table sourcetype count

| stats sum(count) AS count by sourcetype

again it is a run everywhere SPL, and you need to add random strings to the base search to make it work.

cheers, MuS