- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- BREAK_ONLY_BEFORE_DATE=TRUE seems to not be workin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A custom web application produces logs in the tomcat format like this:

2020-01-31 18:19:02,091 DEBUG [com.vendor.make.services.ServiceName] (pool-7-thread-44) - <Short Form: time elapsed 120, pause interval 360, workflows to start 0>

Potentially a super long message from one to 400 lines, < 50K characters, often JSON

The events always begin with a newline and a timestamp, always in the same format (above).

... yet Splunk breaks up long events (I've seen events with 3K characters broken up, and more) - and so far it looks like they are all JSONs being logged.:

1/31/20

6:21:02.419 PM

"preroll_start-eVar32" : "live"

},

"feed:relateds" : [ ]

}, {

"id" : asset_id,

Show all 9 lines

host = hostname source = /custom_app/tomcat/logs/custom_app.log sourcetype = tomcat:custom_app

It also seems to do so consistently, and always in the same place regardless of the length of the event, right between these two lines:

"preroll_start-eVar29" : "feed_app|sec_us|||asset_id|video|200131_feedl_headlines_3pm_video",

"preroll_start-eVar32" : "live"

Any idea what trips it, or what can be done to force Splunk to keep these events together?

Thanks!

Incidentals:

TRUNCATE = 0 in the props.conf (+ splunk apply cluster-bundle) on the CM seem to make no difference.

/opt/splunk/etc/master-apps/_cluster/local/props.conf on the CM:

[tomcat:custom_app]

TRUNCATE = 0

EXTRACT-.... = ....

SEDCMD-scrub_passwords = s/STRING1_PASS=([^\s]+)/STRING1_PASS=#####/g

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Short answer:

MAX_EVENTS=10000

... in the appopriate sourcetype stanza in props.conf.

Long answer:

"Line breaking issues" section in "Resolve data quality issues" Splunk KB article pointed in the right direction:

MAX_EVENTS defines the maximum number of lines in an event.

I.e. if there is a chance the number of lines in an event if > 256 for a specific sourcetype, set MAX_EVENTS to what the maximum should be. In my case the super-long JSONs could run thousands of lines, and I set it to 10000.

Clues:

"Show all 257 lines" in an event, like this:

2020-02-08 23:25:02,439 TRACE [com.vendor.services.ingest.ExternalIngestService] (pool-7-thread-28) - <JSON Feed:

{

"lastBuildDate" : "2020-02-09 05:13:34.034+0000",

"****:analytics" : [ {

"****:analytics-pageName" : "alertsindex|auto-play",

Show all 257 lines

sourcetype = tomcat:custom_app

This means the event is around 256 lines long - and given the default limit of 256 lines per event, this should raise suspicions.

Another clue is mentioned in "Resolve data quality issues", to look for "MAX_EVENTS (256) was exceeded without a single event break" warnings in splunkd.log, e.g.:

12-07-2016 09:32:32.876 -0500 WARN AggregatorMiningProcessor - Changing breaking behavior for event stream because MAX_EVENTS (256) was exceeded without a single event break. Will set BREAK_ONLY_BEFORE_DATE to False, and unset any MUST_NOT_BREAK_BEFORE or MUST_NOT_BREAK_AFTER rules. Typically this will amount to treating this data as single-line only.

P.S. To me, MAX_EVENTS is confusing: MAX_LINES would have been much easier to digest.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Short answer:

MAX_EVENTS=10000

... in the appopriate sourcetype stanza in props.conf.

Long answer:

"Line breaking issues" section in "Resolve data quality issues" Splunk KB article pointed in the right direction:

MAX_EVENTS defines the maximum number of lines in an event.

I.e. if there is a chance the number of lines in an event if > 256 for a specific sourcetype, set MAX_EVENTS to what the maximum should be. In my case the super-long JSONs could run thousands of lines, and I set it to 10000.

Clues:

"Show all 257 lines" in an event, like this:

2020-02-08 23:25:02,439 TRACE [com.vendor.services.ingest.ExternalIngestService] (pool-7-thread-28) - <JSON Feed:

{

"lastBuildDate" : "2020-02-09 05:13:34.034+0000",

"****:analytics" : [ {

"****:analytics-pageName" : "alertsindex|auto-play",

Show all 257 lines

sourcetype = tomcat:custom_app

This means the event is around 256 lines long - and given the default limit of 256 lines per event, this should raise suspicions.

Another clue is mentioned in "Resolve data quality issues", to look for "MAX_EVENTS (256) was exceeded without a single event break" warnings in splunkd.log, e.g.:

12-07-2016 09:32:32.876 -0500 WARN AggregatorMiningProcessor - Changing breaking behavior for event stream because MAX_EVENTS (256) was exceeded without a single event break. Will set BREAK_ONLY_BEFORE_DATE to False, and unset any MUST_NOT_BREAK_BEFORE or MUST_NOT_BREAK_AFTER rules. Typically this will amount to treating this data as single-line only.

P.S. To me, MAX_EVENTS is confusing: MAX_LINES would have been much easier to digest.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi mitag,

Try below Syntax in your props.conf under sourcetype.

[Sourcetype]

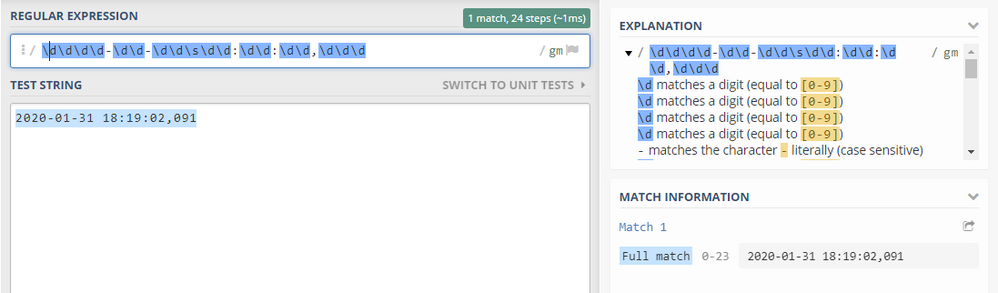

BREAK_ONLY_BEFORE = ^\d\d\d\d-\d\d-\d\d\s\d\d:\d\d:\d\d,\d\d\d

NO_BINARY_CHECK = 1

SHOULD_LINEMERGE = true

TIME_FORMAT = %Y-%m-%d %H:%M:%S,%3Q

The regex in above syntax is matching with your event format.

PFA screenshot for your ref.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would probably work - but:

- won't scale / port if the app is ported to non-US time notation standards - this will break then, and I don't want to write up REGEXes for all possible scenarios - not my job.

- doesn't answer a part of my question: why does Splunk do what it does? What trips it to break the event between those specific lines regardless of the number of lines or characters in the event?

W/o answering that last one (expected behavior? a bug? just my Splunk version or all of them? Is this documented anywhere? Etc.) - we're left with unpredictable behavior that can potentially break things.

Bottom line is this perhaps:

What is the sure-fire way to force Splunk to only break a long event on a Splunk-compliant timestamp? (If it's just TRUNCATE = 0 and the default setting of SHOULD_LINEMERGE = true - then it's not working in this case and I need help figuring out why.)

(Related to that: what is the way to search for improperly broken events across all datasets - events Splunk broke up into multiples whether intended or not? E.g. is there a flag, a field or tag assigned to these events?)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using LINE_BREAKER= and SHOULD_LINEMERGE=false will always be WAAAAAAAY faster than using SHOULD_LINEMERGE=true. Obviously the better the RegEx in your LINE_BREAKER, the more efficient event processing will be so always spend extra time optimizing your LINE_BREAKER.

@woodcock says.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cool - didn't realize LINE_BREAKER= takes precedence to SHOULD_LINEMERGE=false. (But doesn't that mean that when LINE_BREAKER is defined, Splunk won't even look at the SHOULD_LINEMERGE setting? I.e. it'd only break events on LINE_BREAKER REGEX matches regardless of whether there are newlines? ....Unless I missing something...)

That said, my goal is to ask Splunk to only break on what Splunk thinks are valid timestamps, not to write up all possible timestamp REGEXes myself - for portability and maintenance reasons.

So I still need to figure this out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you said that every event begins with a new line, you might only set SHOULD_LINEMERGE = false (and use the default LINE_BREAKER which is per default defined to break an event after every line or if you also have newlines without being a new event, you need to define the LINE_BREAKER for this).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

event begins with a newline != there are no newlines within an event.

There are newlines in the event - lots of them - most JSONs have them. So following your suggestion would make it much worse...