- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Adding or removing the append command changes a pr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'm calculating the average electrical energy consumption per produced piece from today of one of our production machines.

Then I want to know the percentage in which this value differs from the average of the last 30 days.

The average of the last 30 days is stored in a summary index as one value per day.

For just this moment, the verified value of the total electrical energy consumption (field "elEnergie1") is 18 kWh. But every new search returns a value which alters between 55 and 65 kWh, sometimes around 22 too.

This is the code:

index=machinedata_w05 source=W05WTSema4IV320732 name=S3.Energiedaten.Wirkenergie_Tag OR name=S7.Prozessdaten.wst_gesamt earliest=@d

| eval {name}=value

| eval day = strftime(_time, "%d.%m.%Y")

| rename S3.Energiedaten.Wirkenergie_Tag as elEnergie1

S7.Prozessdaten.wst_gesamt as Stk

| table _time elEnergie1 day Stk

| filldown elEnergie1

| autoregress elEnergie1

| table _time elEnergie1 elEnergie1_p1 day Stk

| where elEnergie1!="" OR elEnergie1_p1!="" OR Stk!=""

| eval diff=elEnergie1_p1-elEnergie1

| table _time elEnergie1 elEnergie1_p1 diff day Stk

| where diff>0 OR Stk!=""

| stats first(_time) as _time sum(diff) as elEnergie1 range(Stk) as Stk by day

| where Stk!=0

| eval elEnergie1Stk = round(elEnergie1/Stk, 5),

elEnergie1=round(elEnergie1, 2)

| table elEnergie1Stk elEnergie1 Stk

| fields - _time

| append

[

| search index=machinedata_w05_sum app=Medienverbrauch medium="el.Energie" machine=Sema4 earliest=-30d

| stats avg(Verbrauch_elEnergie_pro_Stk) as avgliter

| fields avgliter

| eval avgliter=round(avgliter, 5)

]

| filldown

| eval abw = round((if(isnull(elEnergie1Stk), -1, elEnergie1Stk)-avgliter)/avgliter*100, 1)

| table abw elEnergie1Stk avgliter elEnergie1 Stk

| where abw!=""

| eval abw=if(abw<100, "---", abw)

After some trial end error I found out that if I just delete the append command, the energy consumption is calculated correctly (the depending calculations are then of course wrong, but that's not the point).

So my question is:

Why does removing/adding the append command changes the value of a previous calculated field?

I have absolutely no idea what is happening here!?!?

(If you want to know more about what the whole search does just ask me)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

index=machinedata_w05_sum app=Medienverbrauch medium="el.Energie" machine=Sema4 earliest=-30d

| stats avg(Verbrauch_elEnergie_pro_Stk) as avgliter

| fields avgliter

| eval avgliter=round(avgliter, 5)

| append [ search index=machinedata_w05 source=W05WTSema4IV320732 name=S3.Energiedaten.Wirkenergie_Tag OR name=S7.Prozessdaten.wst_gesamt earliest=@d

| eval {name}=value

| eval day = strftime(_time, "%d.%m.%Y")

| rename S3.Energiedaten.Wirkenergie_Tag as elEnergie1

S7.Prozessdaten.wst_gesamt as Stk

| table _time elEnergie1 day Stk

| filldown elEnergie1

| autoregress elEnergie1

| table _time elEnergie1 elEnergie1_p1 day Stk

| where elEnergie1!="" OR elEnergie1_p1!="" OR Stk!=""

| eval diff=elEnergie1_p1-elEnergie1

| table _time elEnergie1 elEnergie1_p1 diff day Stk

| where diff>0 OR Stk!=""

| stats first(_time) as _time sum(diff) as elEnergie1 range(Stk) as Stk by day

| where Stk!=0

| eval elEnergie1Stk = round(elEnergie1/Stk, 5),

elEnergie1=round(elEnergie1, 2)

| table elEnergie1Stk elEnergie1 Stk

| fields - _time

| reverse]

| reverse

| filldown

| eval abw = round((if(isnull(elEnergie1Stk), -1, elEnergie1Stk)-avgliter)/avgliter*100, 1)

| table abw elEnergie1Stk avgliter elEnergie1 Stk

| where abw!=""

| eval abw=if(abw<100, "---", abw)

I think this subsearch is too much, so I modify this to turn it over.

How about this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

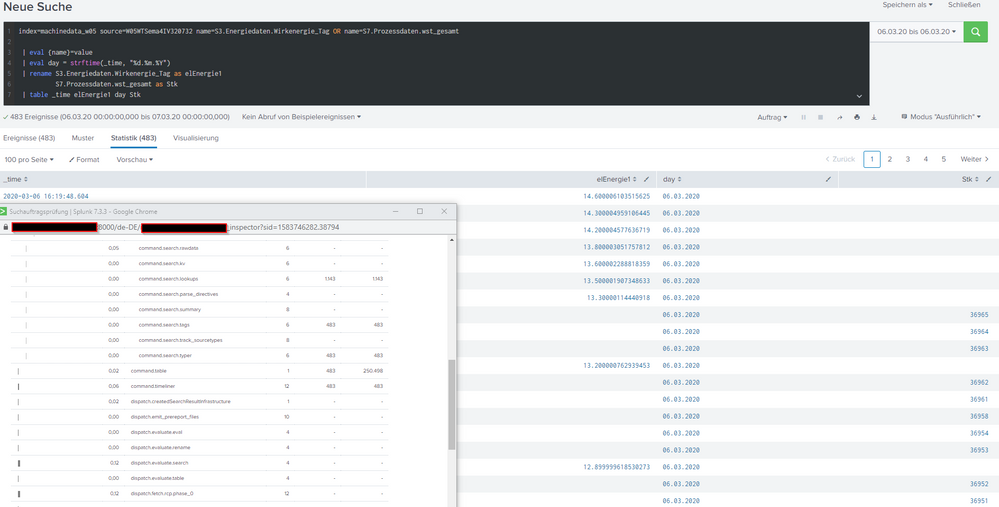

To add to to4kawa's answer an other solution I stumbled upon myself:

In the job inspector I saw that the table command had appr. 1000 inputs and 700000 (?!?!) outputs.

If I run just the first few lines of the search:

index=machinedata_w05 source=W05WTSema4IV320732 name=S3.Energiedaten.Wirkenergie_Tag OR name=S7.Prozessdaten.wst_gesamt

| eval {name}=value

| eval day = strftime(_time, "%d.%m.%Y")

| rename S3.Energiedaten.Wirkenergie_Tag as elEnergie1

S7.Prozessdaten.wst_gesamt as Stk

| table _time elEnergie1 day Stk

the table command takes 483 inputs and gives out 250498 outputs.

The only other entries with a high event count are

0,11 dispatch.stream.remote 8 - 134.974

0,05 dispatch.stream.remote.splunk03w05.mycompany.net 4 - 65.486

0,05 dispatch.stream.remote.splunk02w05.mycompany.net 4 - 69.488

If anybody knows whats going on I would be happy if you let me know it.

When I remove all table commands except for the last one in the original search and substitute them with the fields command everything works as expected!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

index=machinedata_w05_sum app=Medienverbrauch medium="el.Energie" machine=Sema4 earliest=-30d

| stats avg(Verbrauch_elEnergie_pro_Stk) as avgliter

| fields avgliter

| eval avgliter=round(avgliter, 5)

| append [ search index=machinedata_w05 source=W05WTSema4IV320732 name=S3.Energiedaten.Wirkenergie_Tag OR name=S7.Prozessdaten.wst_gesamt earliest=@d

| eval {name}=value

| eval day = strftime(_time, "%d.%m.%Y")

| rename S3.Energiedaten.Wirkenergie_Tag as elEnergie1

S7.Prozessdaten.wst_gesamt as Stk

| table _time elEnergie1 day Stk

| filldown elEnergie1

| autoregress elEnergie1

| table _time elEnergie1 elEnergie1_p1 day Stk

| where elEnergie1!="" OR elEnergie1_p1!="" OR Stk!=""

| eval diff=elEnergie1_p1-elEnergie1

| table _time elEnergie1 elEnergie1_p1 diff day Stk

| where diff>0 OR Stk!=""

| stats first(_time) as _time sum(diff) as elEnergie1 range(Stk) as Stk by day

| where Stk!=0

| eval elEnergie1Stk = round(elEnergie1/Stk, 5),

elEnergie1=round(elEnergie1, 2)

| table elEnergie1Stk elEnergie1 Stk

| fields - _time

| reverse]

| reverse

| filldown

| eval abw = round((if(isnull(elEnergie1Stk), -1, elEnergie1Stk)-avgliter)/avgliter*100, 1)

| table abw elEnergie1Stk avgliter elEnergie1 Stk

| where abw!=""

| eval abw=if(abw<100, "---", abw)

I think this subsearch is too much, so I modify this to turn it over.

How about this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This works! Thanks a lot!

I checked the separate searches again.

The search in index machinedata_w05 which searches through appr. 40 Million events per day only runs for 0.2 seconds whereas the search in index machinedata_w05_sum which searches through 6000 events per day runs for 2 seconds (both overall events without filters).

Can this be the cause?

I really try to understand it but it's not clear to me yet.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it isn't working after the append then it must be corruption of the filldown.

This is one reason that I NEVER use filldown without arguments.

In other words, the events returned from the append EITHER:

1: have gobs and gobs of fields and rows so the unconstrained filldown causes RAM to be exhaused

OR

2: have fields with values that causes abw to get miscalculated.

It is probably the latter. You are probably NOT meaning for the appended events to obtain values for elEnergie1Stk and avgliter, etc. Fix your filldown and/or your math to compensate correctly for the appended events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've got only one event returned from append in a new row.

Thefilldownensures that the returned value is in the same row as the results of the base search.

If I useappendcols, thefilldownisn't necessary anymore --> results are still wrong.abwis calculated correctly (the value is wrong becauseelEnergie1is wrong but the math is correct).avgliteris the correct value (0.05453 in this case). OnlyelEnergie1is way to high. EvenStkis right, onlyelEnergie1is wrong.

You are probably NOT meaning for the

appended events to obtain values for

elEnergie1Stk and avgliter, etc.

What does this mean exactly (sorry, not a native speaker)?

I only append one event which is avgliter.

I still can't get my head around it why this is behaving like it is.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It probably doesn't, not directly. If you inspect your job you will probably find that adding the append is causing your search to either take too much time or use too much disk space leaving your job in an incomplete, had-to-abort, finalized state, instead of the correct, normal, complete Done state. That is why we stress so hard to avoid join and append. It can almost always be done without them (I have only seen 1 case where we could not).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi woodcock,

thanks for your reply!

I don't think this is the issue because the search runs only 0.5 seconds. The search has only 483 events where each event looks like this:

{"name":"S3.Energiedaten.Wirkenergie_Tag","value":15.000007629394531}

The subsearch only has 9 events where each event looks like this:

03/05/2020 23:42:01 +0100, info_min_time=1546297200.000, info_max_time=1583449200.000, info_search_time=1583488430.469, app=Medienverbrauch, area="Wärmetauscherfertigung", medium="el.Energie", machine=Sema4, IV_number=320732, unit_Verbrauch_gesamt=kWh, Verbrauch_elEnergie_pro_Stk="0.06650", Verbrauch_elEnergie_gesamt="52.80", unit_Verbrauch_Stk="kWh/Stk"

I already have a similar search in another dashboard which runs without problems. The only difference is in a few evals and the source.

But where do I find exactly the job status "finalized" to check if your suggestions is on point?

The same issue is also with appendcols.

How can I do it without append? Can you please give me a hint?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

limits.conf

if your append search consume time, the error will cause.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where is this written exactly? I only found that append will exceed maxout specified for subsearch in the limits.conf.

And the subsearch-options are configured as default like written in the docs. Like I said this are only 32 events before the stats command and runs in 2.1 seconds. This is way below the limits.