- Find Answers

- :

- Premium Solutions

- :

- Splunk SOAR

- :

- playbook running multiple times on single containe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

playbook running multiple times on single container

Using SOAR export app in Splunk, we are pulling certain alerts to SOAR. Depending on the ip, the artifacts are grouped to a single container. Now I need to create 1 ticket for each container using playbook. But what happens is that if the container is having multiple artifacts, it creates 1 ticket for each artifact. Any idea on how to solve this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm a bit confused about how you're getting data to your SOAR instance - you say you're pulling, but you're also using the Splunk App for SOAR Export? When I hear pull, I'm assuming you have the SOAR App for Splunk configured to pull events in from SOAR (yes, I know the app names are all similar and this definitely caused some confusion for my team early on)

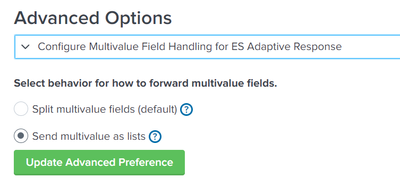

Assuming you just have the event forwarding configured, you can maybe try changing your advanced options to this:

This would send everything as a single artifact, rather than the multiple you have.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are not ES app. This option is for es app right? We are forwarding the alerts from splunk to soar using soar export app

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ansusabu_25 the app is not ES specific but can be installed on any SH. You should have the option already shown to you and this will stop the multiple artifacts being created. At the moment, multiple artifacts are created due to there being 1 or more MV fields in the results data. If you set the setting already shown then you will get 1 event with 1 artifact in but the values for some of the fields will be lists which you will need to handle in playbooks, as in configure any blocks to be able to process single and list items. Previously I have used a playbook on ingest to split the MV fields into artifacts without all the additional duplication.

Hope this helps! Happy SOARing!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A quick win will be tagging the container.

You can edit your playbook to check if the tag of the container is XYZ, which will be not in the first run (for the first artifact). Once you call your action to create an incident, change the tag of the container to XYZ, so even if the next artifact triggers the playbook, the tag will already by XYZ and the create incident action will not be called as it will not satisfy your condition.

While creating artifacts manually (via rest, for example), you can force the parameter "run_automation" to false, preventing that new artifact to trigger the playbook execution, but in your case data is coming from the export app so maybe you can find some settings in there to change this behavior (honestly I don't recall one, but maybe you can find something interesting)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried the option to set the tag. But the problem is by the time the 1st artifact set the tag, the 2nd one have already completed the decision block and hence it repeats the playbook

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have this same problem. If a container has multiple artifacts, for example 10, with the tagging duplicate actions are usually limited to 1-3 instead of 10.

I haven't been able to find low level details about how the python scripts are executed at an interpreter/ingestion level, and I don't think it exists publicly, which is unfortunate because the power of the platform lies in being able to use python to efficiently process data. The VPE makes this clunky.

I spent 3-4years on Palo Alto's XSOAR as the primary engineer and for all its quirks, Palo Alto has produced way better documentation on their SOAR than Splunk (Palo Alto overhauled their documentation when they acquired Demisto). I'm about a year into using Splunk SOAR, and for all the quirks I had to handle using Palo Alto's XSOAR I wish I could go back to it, maybe my opinion/preference will change, but unless Splunk produces better documentation and opens up to the public/community some lower level documentation I'm

doubtful it will.

Palo Alto's XSOAR has a feature called Pre-Processing rules which allows you to filter/dedup and transform data coming into the SOAR before playbook execution, I wish Splunk SOAR had something similar, that way ingestion/deduplication logic (if you can even call tagging "that") wouldn't be intermingled in the same area as the "OAR" logic of the playbook, and hopefully avoid race conditions.

The problem with "Mulit-Value" lists is that it screws up pre-existing logic. Maybe I'm missing something, but that Option should be configurable in the in the Saved Search/Alert +Action Splunk App for SOAR Export, so that it could be configured on a per alert basis.

6 Years ago I chose Demisto over Phantom working for a Fortune 300, if I could have my way right now I'd probably go with my first choice.

P.S. to be fair to Splunk SOAR maybe there's some feature I'm overlooking.