- Find Answers

- :

- Premium Solutions

- :

- Splunk SOAR

- :

- What is the proper way to purge SOAR containers/ev...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

What is the proper way to purge Splunk SOAR/phantom containers from the database. It seems that deleting a container only hides it from the UI.

Is there a way to purge containers with certain filters for example purge containers where label="secific_label" and created before 6 months ... ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ouss took me a while to find the docs but here: https://my.phantom.us/kb/80/

optional arguments:

-h, --help show this help message and exit

-b, --list-labels Lists available container labels and exits

-d, --dry-run Do not actually delete, just show output

--non-interactive Do not block on user input. This flag is suitable for running as part of an unsupervised script.

-c CHUNK_SIZE, --chunk-size CHUNK_SIZE

Max number of containers to delete in a single transaction. Max 10000

-r MAX_RETRY_COUNT, --max-retry-count MAX_RETRY_COUNT

Max number of retries in the case of error.

Filters:

Use these to select the containers you want to delete. Supply at least one.

-i IDS, --ids IDS Comma separated list of IDs of the container to delete

-l LABEL, --label LABEL

Only delete containers with this label

-m MATCHING, --matching MATCHING

Title matches this string (case insensitive)

--before BEFORE Only delete containers last updated before this date/time.

--after AFTER Only delete containers last updated after this date/time.

--status STATUS Only delete containers having these status values (comma separated list).

Not sure why it's not on Splunk docs yet but I have raised a feedback request to get it ported across.

Please mark as solution if this helps.

Tom

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Googled to try and find a solution to this problem and came across this answer. Also found this Splunk docs article: https://docs.splunk.com/Documentation/SOARonprem/5.5.0/Admin/DataRetention

Would the above accomplish not wanting containers and their data past X years? Would be really great if this was the case.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey all,

Im not a postgre or linux master but I found something.

Based on this article:

https://docs.splunk.com/Documentation/Phantom/4.10.7/Install/UpgradeOffline

I notice how to run vacuumdb in phantom Centos 7 environment.

In the article there is a line, I know that this arcitle is for old version 4 but give me some ideas how to run vacuumdb.

To improve database performance, after completing the upgrade, run: su - postgres -c '/usr/pgsql-11/bin/vacuumdb -h /tmp --all --analyze-in-stages'

After that there is the similar command but from phenv.

/<PHANTOM_HOME>/bin/phenv /<PHANTOM_HOME>/usr/postgresql/bin/vacuumdb -h /tmp --all --analyze-in-stages

Based on this i understand that --analyze-in-stages - only show statistics.

After that I open folder:

cd /opt/phantom/usr/postgresql/bin/

Next:

phenv vacuumdb -h /tmp --all --analyze-in-stages

But this command shows me only stats.

But from this article I notice that vacuumdb command has parameter -full

https://stackoverflow.com/questions/51204561/postgres-vacuum-doesnt-free-up-space

Lets check vacuumdb documentation:

https://www.postgresql.org/docs/current/app-vacuumdb.html

--analyze-in-stages

Only calculate statistics for use by the optimizer (no vacuum), like --analyze-only. Run three stages of analyze; the first stage uses the lowest possible statistics target (see default_statistics_target) to produce usable statistics faster, and subsequent stages build the full statistics.

This option is only useful to analyze a database that currently has no statistics or has wholly incorrect ones, such as if it is newly populated from a restored dump or by pg_upgrade. Be aware that running with this option in a database with existing statistics may cause the query optimizer choices to become transiently worse due to the low statistics targets of the early stages.

--all

Vacuum all databases.

--full

Perform “full” vacuuming.

So for general what was done to remove containers and reduce db disc space in Splunk SOAR 5.2:

1. cd /opt/phantom/bin/

2. phenv python /opt/phantom/bin/delete_containers.pyc --label test --before "2020-01-01T12:00:00Z"

3. cd /opt/phantom/usr/postgresql/bin/

4. phenv vacuumdb -h /tmp --all --full

This reduce a space in my case.

! Warning!,

Im not sure if this method is proper, but it works in my case. You can test it on your test environment and confirm if it works for you also. Maybe someone from Splunk Suport will be able to confirm this solution.

General summary:

phenv vacuumdb -h /tmp --all

This command - "The standard form of VACUUM removes dead row versions in tables and indexes and marks the space available for future reuse. However, it will not return the space to the operating system,"

But this command:

phenv vacuumdb -h /tmp --all --full

Although VACUUM FULL can be used to shrink a table back to its minimum size and return the disk space to the operating system, there is not much point in this if the table will just grow again in the future. Thus, moderately-frequent standard VACUUM runs are a better approach than infrequent VACUUM FULL runs for maintaining heavily-updated tables.

I hope it helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Would like to re-open this discussion as what OP is seeing with disk space is a constant pain point for me too. $PHANTOM_HOME starts creeping up periodically eventually getting up to >95%. Regardless of what I am removing with the aforementioned scripts (I have semi automated and run manually from time to time). It does not help...only solution I have found is to continually grow the FS. I have come to the conclusion that a lot of this space is eaten up temporary files created by DB queries.

./data/db/base/pgsql_tmp/pgsql_tmp2178.xxxxx

Currently have over 8200 of these files in this directory. lol

All an assumption though.

Maybe I need to understand Postgres more. Just curious if anyone has additional input on this too?

Thanks,

Doug

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ouss great question, honestly I am not sure as not too aware of postgres workings tbh.

I would also say I raised the request and the docs have been moved to here now: https://docs.splunk.com/Documentation/SOARonprem/5.3.1/Admin/DeleteContainers

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ouss took me a while to find the docs but here: https://my.phantom.us/kb/80/

optional arguments:

-h, --help show this help message and exit

-b, --list-labels Lists available container labels and exits

-d, --dry-run Do not actually delete, just show output

--non-interactive Do not block on user input. This flag is suitable for running as part of an unsupervised script.

-c CHUNK_SIZE, --chunk-size CHUNK_SIZE

Max number of containers to delete in a single transaction. Max 10000

-r MAX_RETRY_COUNT, --max-retry-count MAX_RETRY_COUNT

Max number of retries in the case of error.

Filters:

Use these to select the containers you want to delete. Supply at least one.

-i IDS, --ids IDS Comma separated list of IDs of the container to delete

-l LABEL, --label LABEL

Only delete containers with this label

-m MATCHING, --matching MATCHING

Title matches this string (case insensitive)

--before BEFORE Only delete containers last updated before this date/time.

--after AFTER Only delete containers last updated after this date/time.

--status STATUS Only delete containers having these status values (comma separated list).

Not sure why it's not on Splunk docs yet but I have raised a feedback request to get it ported across.

Please mark as solution if this helps.

Tom

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @phanTom,

you are right the script works well and the containers data is purged from the database. However, I am noticing that the folder "/phantom/data/db/pg_wal" (45G) is huge compared to "/phantom/data/db/base" (4.5 G). From searchihng in Postgres forums it seems that pg_wal is supposed to be cleaned automatically by postgres ... any ideas on why this is not happening or from where I coud start troubleshooting 🙂 thank you in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply @phanTom , I have executed this script and deleted approximately 10k containers with the artifacts, playbook runs and action runs associated to them ... However I do not seem to notice any changes on disk Space usage, (by the way my goal of purging containers is to free up some disk space). Is the script deleting the data from the database or should I follow further steps to delete this data from the database?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

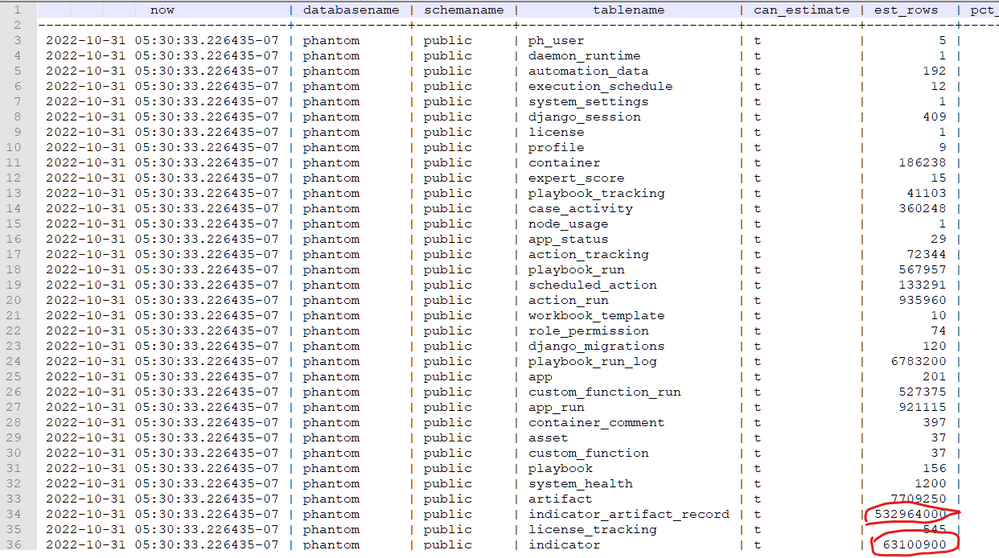

I had a case open with support on this same issue as continually adding space to the file system was not viable. Support indicated that cleaning out containers and all related 'stuff' was fine and all...but there is a need to reclaim space from the Postgres DB after running the cleanup commands for containers and related items (artifacts, etc). I also learned that I had A TON of Indicators and indicator_artifact_records eating up space in the DB as well that needed to be removed.

Once competed, we utilized the the Postgres VACUUM function.

https://www.postgresql.org/docs/current/sql-vacuum.html

There is a way to see top offenders of bloat in the database too, which will help show what's eating up space. Required a ~140 line query to add to the command (not sure if that should be shared here ❓). This is an example of what i ran to get that data:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @dbkuhn ,

There is any change of sharing this query?

Also, how did you cleaned up this indicator you mentioned?

I tried to do the cleanup via delete_containers.py and when I started vacuum process if filled up the disk as it needs kinda of the same amount of data to dispose in disk to process, so it was worse than I thought and now my dev environment is down with 20kb free space in the mount.

I'll have to struggle here to try to expand the mount first in order to be able to re-start the db and start the vacuum again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Victor,

For the indicators I used the following (was recommended I did it in increments as it can be a lot, as I mentioned)...

For example:

WITH constants AS (

-- define some constants for sizes of things

-- for reference down the query and easy maintenance

SELECT current_setting('block_size')::numeric AS bs, 23 AS hdr, 8 AS ma

),

no_stats AS (

-- screen out table who have attributes

-- which dont have stats, such as JSON

SELECT table_schema, table_name,

n_live_tup::numeric as est_rows,

pg_table_size(relid)::numeric as table_size

FROM information_schema.columns

JOIN pg_stat_user_tables as psut

ON table_schema = psut.schemaname

AND table_name = psut.relname

LEFT OUTER JOIN pg_stats

ON table_schema = pg_stats.schemaname

AND table_name = pg_stats.tablename

AND column_name = attname

WHERE attname IS NULL

AND table_schema NOT IN ('pg_catalog', 'information_schema')

GROUP BY table_schema, table_name, relid, n_live_tup

),

null_headers AS (

-- calculate null header sizes

-- omitting tables which dont have complete stats

-- and attributes which aren't visible

SELECT

hdr+1+(sum(case when null_frac <> 0 THEN 1 else 0 END)/8) as nullhdr,

SUM((1-null_frac)*avg_width) as datawidth,

MAX(null_frac) as maxfracsum,

schemaname,

tablename,

hdr, ma, bs

FROM pg_stats CROSS JOIN constants

LEFT OUTER JOIN no_stats

ON schemaname = no_stats.table_schema

AND tablename = no_stats.table_name

WHERE schemaname NOT IN ('pg_catalog', 'information_schema')

AND no_stats.table_name IS NULL

AND EXISTS ( SELECT 1

FROM information_schema.columns

WHERE schemaname = columns.table_schema

AND tablename = columns.table_name )

GROUP BY schemaname, tablename, hdr, ma, bs

),

data_headers AS (

-- estimate header and row size

SELECT

ma, bs, hdr, schemaname, tablename,

(datawidth+(hdr+ma-(case when hdr%ma=0 THEN ma ELSE hdr%ma END)))::numeric AS datahdr,

(maxfracsum*(nullhdr+ma-(case when nullhdr%ma=0 THEN ma ELSE nullhdr%ma END))) AS nullhdr2

FROM null_headers

),

table_estimates AS (

-- make estimates of how large the table should be

-- based on row and page size

SELECT schemaname, tablename, bs,

reltuples::numeric as est_rows, relpages * bs as table_bytes,

CEIL((reltuples*

(datahdr + nullhdr2 + 4 + ma -

(CASE WHEN datahdr%ma=0

THEN ma ELSE datahdr%ma END)

)/(bs-20))) * bs AS expected_bytes,

reltoastrelid

FROM data_headers

JOIN pg_class ON tablename = relname

JOIN pg_namespace ON relnamespace = pg_namespace.oid

AND schemaname = nspname

WHERE pg_class.relkind = 'r'

),

estimates_with_toast AS (

-- add in estimated TOAST table sizes

-- estimate based on 4 toast tuples per page because we dont have

-- anything better. also append the no_data tables

SELECT now(), schemaname, tablename,

TRUE as can_estimate,

est_rows,

table_bytes + ( coalesce(toast.relpages, 0) * bs ) as table_bytes,

expected_bytes + ( ceil( coalesce(toast.reltuples, 0) / 4 ) * bs ) as expected_bytes

FROM table_estimates LEFT OUTER JOIN pg_class as toast

ON table_estimates.reltoastrelid = toast.oid

AND toast.relkind = 't'

),

table_estimates_plus AS (

-- add some extra metadata to the table data

-- and calculations to be reused

-- including whether we cant estimate it

-- or whether we think it might be compressed

SELECT current_database() as databasename,

schemaname, tablename, can_estimate,

est_rows,

CASE WHEN table_bytes > 0

THEN table_bytes::NUMERIC

ELSE NULL::NUMERIC END

AS table_bytes,

CASE WHEN expected_bytes > 0

THEN expected_bytes::NUMERIC

ELSE NULL::NUMERIC END

AS expected_bytes,

CASE WHEN expected_bytes > 0 AND table_bytes > 0

AND expected_bytes <= table_bytes

THEN (table_bytes - expected_bytes)::NUMERIC

ELSE 0::NUMERIC END AS bloat_bytes

FROM estimates_with_toast

UNION ALL

SELECT current_database() as databasename,

table_schema, table_name, FALSE,

est_rows, table_size,

NULL::NUMERIC, NULL::NUMERIC

FROM no_stats

),

bloat_data AS (

-- do final math calculations and formatting

select current_database() as databasename,

schemaname, tablename, can_estimate,

table_bytes, round(table_bytes/(1024^2)::NUMERIC,3) as table_mb,

expected_bytes, round(expected_bytes/(1024^2)::NUMERIC,3) as expected_mb,

round(bloat_bytes*100/table_bytes) as pct_bloat,

round(bloat_bytes/(1024::NUMERIC^2),2) as mb_bloat,

table_bytes, expected_bytes, est_rows

FROM table_estimates_plus

)

-- filter output for bloated tables

SELECT now(), databasename, schemaname, tablename,

can_estimate,

est_rows,

pct_bloat, mb_bloat,

table_mb

FROM bloat_data

-- this where clause defines which tables actually appear

-- in the bloat chart

-- example below filters for tables which are either 50%

-- bloated and more than 20mb in size, or more than 25%

-- bloated and more than 1GB in size

WHERE ( pct_bloat >= 0 AND mb_bloat >= 0 )

OR ( pct_bloat >= 0 AND mb_bloat >= 0 )

ORDER BY pct_bloat DESC;

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @ouss ,

Sorry to revive this but did you find anything? I'm going thru the same situation here, no luck on finding answers yet.