- Find Answers

- :

- Premium Solutions

- :

- Splunk SOAR

- :

- Ingest daemon troubleshooting: Where to look for t...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi folks,

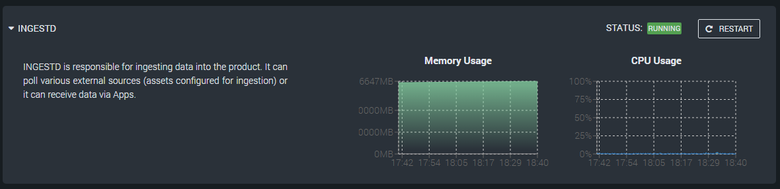

Our on-premise 5.3.1 SOAR's Ingest daemon is behaving funny in terms of memory management and was wondering if someone can give me any pointers to where to look for what is going wrong.

In essence, the ingestd keeps on using more and more virtual memory until it maxes out at 256GB and then stops ingesting more data. Restarting the service does solve the issue.

I am thinking the root cause might be hiding in 3 places:

- poorly written playbooks - I am thinking something might be wrong with the playbooks that we have. We have playbooks running as often as every 5 minutes, so I suppose they can cause resource starvation. Not sure how to dive deeper for potential memory leaks here though.

- something going wrong with the ingestion of containers/better clean-up of closed containers - is it possible that just closing containers without deleting them after X amount of time can cause this?

- some weird bug that we've hit - not sure how likely this is but I saw that in version 5.3.4 a bug regarding memory usage has been fixed (PSAAS-9663) so it is on my list, if nothing else turns up

One relevant point to make is that this started occurring after migration from 4.9.X to our current version so I have no idea if this is linked to the fact that we migrated to Python 3 playbooks or the particular product version.

Any pointers to where/how to start looking for the root cause are appreciated.

Cheers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So this turned out to be the PSAAS-8617 issue in 5.3.1. The only solution is to update to the 5.3.2 or later version.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So this turned out to be the PSAAS-8617 issue in 5.3.1. The only solution is to update to the 5.3.2 or later version.