Are you a member of the Splunk Community?

- Find Answers

- :

- Premium Solutions

- :

- Splunk ITSI

- :

- unable to import entity, mstats or mcatalog comman...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

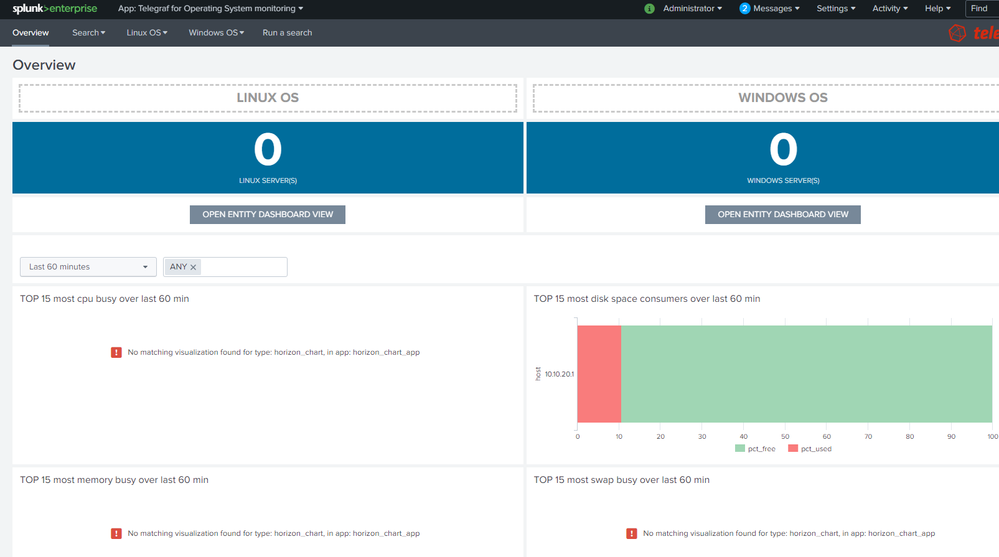

At GUI level, installed DA-ITSI-TELEGRAF-OS, telegraf-os

At Indexer level, installed TA-influxdata-telegraf

At HF level, installed TA-influxdata-telegraf

telegraf agent forwards OPNsense 19.1 event data,

I see index = telegraf is searchable, and fields are extracted, such as metric_name, etc.

When I try to import via ITSI/Configure/Create Entity/Import from Search/Modules

and choose ITSI module for Influxdata Telegraf (OS)/OS-Hosts entity import linux

It says: No or insufficient data found.

I try search manually:

| mstats latest(_value) as value where `telegraf_index` metric_name="system.n_cpus" OR metric_name="mem.total" OR metric_name="swap.total" by metric_name, host

| mstats latest(_value) as value where `telegraf_index` metric_name="system.n_cpus" OR metric_name="mem.total" OR metric_name="swap.total"

| mcatalog values(_dims) as dimensions values(metric_name) as metric_name where index=telegraf metric_name=*

it returns 0 results.

in Telegraf OS monitoring also no visualization,

Am I missing something? Thank you,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @deodion !

"I see index = telegraf is searchable, and fields are extracted, such as metric_name, etc."

--> This is where the issue comes from, the index should be a metric index, not an event index, metric indexes cannot be searched without mstats | mcatalog commands.

Please review you index configuration according to:

https://da-itsi-telegraf-kafka.readthedocs.io/en/latest/kafka_monitoring.html#splunk-configuration

Guilhem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you paste an example of telegraf.conf you are using please ?

FYI the apps are expecting default names for metrics.

Can you run please the following command and share results:

| mcatalog values(metric_name) where index=telegraf earliest=-4h latest=now

Guilhem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[global_tags]

[agent]

interval = "10s"

round_interval = false

metric_batch_size = 1000

metric_buffer_limit = 10000

collection_jitter = "0s"

flush_jitter = "0s"

precision = ""

debug = false

quiet = true

hostname = "10.10.20.1"

omit_hostname = false[[outputs.graphite]]

servers = ["10.10.20.103:2003"]

timeout = 2

insecure_skip_verify = false

graphite_tag_support = true[[inputs.cpu]]

percpu = true

totalcpu = true

collect_cpu_time = true[[inputs.disk]]

[[inputs.diskio]]

[[inputs.mem]]

[[inputs.processes]]

[[inputs.swap]]

[[inputs.system]]

[[inputs.net]]

[[inputs.pf]]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dimensions

cpu

device

fstype

interface

mode

name

path

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

metric_name

cpu.time_guest

cpu.time_guest_nice

cpu.time_idle

cpu.time_iowait

cpu.time_irq

cpu.time_nice

cpu.time_softirq

cpu.time_steal

cpu.time_system

cpu.time_user

cpu.usage_guest

cpu.usage_guest_nice

cpu.usage_idle

cpu.usage_iowait

cpu.usage_irq

cpu.usage_nice

cpu.usage_softirq

cpu.usage_steal

cpu.usage_system

cpu.usage_user

disk.free

disk.inodes_free

disk.inodes_total

disk.inodes_used

disk.total

disk.used

disk.used_percent

diskio.io_time

diskio.iops_in_progress

diskio.read_bytes

diskio.read_time

diskio.reads

diskio.weighted_io_time

diskio.write_bytes

diskio.write_time

diskio.writes

mem.active

mem.available

mem.available_percent

mem.buffered

mem.cached

mem.commit_limit

mem.committed_as

mem.dirty

mem.free

mem.high_free

mem.high_total

mem.huge_page_size

mem.huge_pages_free

mem.huge_pages_total

mem.inactive

mem.low_free

mem.low_total

mem.mapped

mem.page_tables

mem.shared

mem.slab

mem.swap_cached

mem.swap_free

mem.swap_total

mem.total

mem.used

mem.used_percent

mem.vmalloc_chunk

mem.vmalloc_total

mem.vmalloc_used

mem.wired

mem.write_back

mem.write_back_tmp

net.bytes_recv

net.bytes_sent

net.drop_in

net.drop_out

net.err_in

net.err_out

net.packets_recv

net.packets_sent

processes.blocked

processes.idle

processes.running

processes.sleeping

processes.stopped

processes.total

processes.unknown

processes.wait

processes.zombies

swap.free

swap.in

swap.out

swap.total

swap.used

swap.used_percent

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right this does not look bad.

Does the Splunk host Metadata is set correctly ?

Let's take one of the simple searches that underneath the entities discoveries:

mstats latest(_value) as value where index=telegraf metric_name="system.n_cpus" OR metric_name="mem.total" OR metric_name="swap.total" by metric_name, host

Does this reports anything ?

If not does this returns results if you remove the host by statement ?

like

mstats latest(_value) as value where index=telegraf metric_name="system.n_cpus" OR metric_name="mem.total" OR metric_name="swap.total" by metric_name

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes it is return result from the first query (with ,host) and it looks correct,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok @deodion

First, in the core application you have no results because you need a few viz addons which are being by the applications, such as required in the doc:

https://telegraf-os.readthedocs.io/en/latest/deployment.html

https://splunkbase.splunk.com/app/3117

https://splunkbase.splunk.com/app/3166

Once you will have deployed these 2 there will be more this viz error in the home page.

- Can you run step by the step the identity report:

| mstats latest(_value) as value where `telegraf_index` metric_name="system.n_cpus" OR metric_name="mem.total" OR metric_name="swap.total" by metric_name, host

| eval nb_cpus=case(metric_name="system.n_cpus", value), swap_total=case(metric_name="swap.total", value/1024/1024), mem_total=case(metric_name="mem.total", value/1024/1024)

| stats first(nb_cpus) as nb_cpus, first(mem_total) as mem_total, first(swap_total) as swap_total by host

| foreach *_total [ eval <> = round('<>', 0) ]

| eval itsi_role="telegraf_host", family="Linux"

| join type=inner host [ | mcatalog values(metric_name) as linux_kernel where index="telegraf" metric_name="kernel.*" by host ] | fields - linux_kernel

| fields host, itsi_role, family, nb_cpus, mem_total, swap_total

Run this search in the ITSI namespace, if it does not return the results you should have, try going line by line, re-execute up to which you have no more data.

I don;t why this would be failing as you setup looks ok, make sure the macro telegraf_index is correctly set as well (eventually try to use index=telegraf for testing purposes)

let me know

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you reach to me on Splunk social Slack ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can get result if I remove | join type=.........................

i just created slack account, not sure if i can use it,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For ITSI app in indexer level, i only copy paste

SA-IndexCreation

SA-ITSI-Licensechecker

SA-UserAccess

in splunk gui level, i delete below:

SA-IndexCreation

SA-ITSI-Licensechecker

my lic master is at indexer,

may be there is something to do with this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok so no it has nothing to see with your license master.

The join is there to filter Linux hosts, based on the following metric:

| mcatalog values(metric_name) as linux_kernel where `telegraf_index` metric_name="kernel.*" by host

So you get no result because you don't have the kernel.* metrics available currently from telegraf.

Which would in telegraf.conf be there:

# Get kernel statistics from /proc/stat

[[inputs.kernel]]

# no configuration

I was planning to update the apps to change the way I am filtering, either you onboard the data, either you wait for an update from me which I shall be able to provide tonight.

Guilhem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Morning,

you mean update your splunk app or telegraf agent? I try add manually inputs kernel, restart, still no luck,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Yes I meant adding this kernel metric in the telegraf.conf.

Nevermind, I have published new version of the apps:

- Operating System monitoring with Telegraf, Version 1.0.5 (https://splunkbase.splunk.com/app/4271/)

- ITSI module for Influxdata Telegraf OS, Version 1.0.6 (https://splunkbase.splunk.com/app/4194/)

This shall fix your issues.

For the core app (Operating System monitoring with Telegraf) please do not forget to have the viz dependencies installed on the search head.

And let me know the outcome.

Kind regards,

Guilhem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do i still need to add manually

[[inputs.kernel]]

# no configuration

in telegraf.conf agent side?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I finally got it working from splunk side,

also from agent side:

to survive reboot required settings, it also requires editing /usr/local/opnsense/service/templates/OPNsense/Telegraf/telegraf.conf

and adding: graphite_tag_support = true

because current version telegraf OPNsense agent is not supporting to add this via menu settings,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @deodion !

"I see index = telegraf is searchable, and fields are extracted, such as metric_name, etc."

--> This is where the issue comes from, the index should be a metric index, not an event index, metric indexes cannot be searched without mstats | mcatalog commands.

Please review you index configuration according to:

https://da-itsi-telegraf-kafka.readthedocs.io/en/latest/kafka_monitoring.html#splunk-configuration

Guilhem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

anyone can see the long comment at above post, to make opnsense + telegraf agent + splunk hf works....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

one more thing for: Telegraf for Operating System monitoring

Processes CPU usage (%, top 20)

Processes - Resident Memory (MB, top 20)

Network Open Sockets

Network Sockets Created/Second

they are no result, is that expected?