- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Why are users hitting disk quota?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are users hitting disk quota?

I've got an on-premises Splunk deployment running Enterprise 8.1.2. I keep having a recurring issue where the users report that their searches are being queued due to disk quota.

This search could not be dispatched because the role-based disk usage quota of search artifacts for user "jane.doe" has been reached (usage=7757MB, quota=1000MB). Use the Job Manager to delete some of your search artifacts, or ask your Splunk administrator to increase the disk quota of search artifacts for your role in authorize.conf.

So naturally I go to the Job Manager to see what's up, but what I keep finding is that the Jobs don't even almost approach the quota.

This is the second time this issue has come up. Previously I did a bunch of digging around but was never able to find any record of what was actually using up the quotas. That was a while back so unfortunately I don't have notes on that. I ended up just increasing the quota for the user's role and things started working again.

Now that it's happening again, I figured I'd try posting here to see if anyone has any advice on how to find what's using up the quota.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know why the DispatchReaper quits doing it's job, but restarting the Splunk daemon puts it back in order. I've created an alert which looks for the warnings about expired and unreaped artifacts and added some instructions about restarting Splunk.

index=_internal "WARN DispatchReaper - Found" "expired and unreaped" AND NOT "StreamedSearch"I guess that's good enough. I'm still curious why the DispatchReaper just stops reaping.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One of the search heads has a pile of replicated search artifacts in the dispatch directory. I have verified that deleting these replicated search artifact directories which contain the hash corresponding to one of the blocked users allows them to resume searching.

I have read that these artifacts are supposed to be cleaned up by the dispatch reaper based upon the TTL and the last modified time. I have found some logs pertaining to the dispatch reaper.

splunkd.log.4:07-08-2022 09:55:54.628 +0000 WARN DispatchReaper - Found 17173 expired and unreaped artifacts (SHC managed). Displaying first 20.This warning repeats about every 10 minutes and the count of found artifacts doesn't change. I have searched for this warning on another of the search heads in this cluster, and it does not exist.

I found this jumbo query which claims to show the number of unreaped artifacts.

| rest /services/shcluster/member/members splunk_server=local

| table label status title artifact_count status_counter.Complete advertise_restart_required last_heartbeat

| eval is_captain = "No"

| eval is_current = "No"

| eval artifacts_not_reaped = artifact_count - 'status_counter.Complete'

| eval heartbeat_diff = now() - last_heartbeat

| join type=outer label

[

| rest /services/shcluster/status splunk_server=local

| fields captain.label

| rename captain.label AS label

| eval is_captain = "Yes"

| eval is_current = "No"

]

| join type=outer label

[

| rest /services/server/info splunk_server=local

| fields host

| rename host AS label

| eval is_current = "Yes"

]

| sort label

| convert timeformat="%F %T" ctime(last_heartbeat) AS last_heartbeat

| table label status is_captain is_current title last_heartbeat heartbeat_diff artifact_count status_counter.Complete artifacts_not_reaped advertise_restart_required | rename label AS "SHC Member" status AS Status is_captain AS "Captain" title AS GUID is_current AS "Current Node" last_heartbeat AS "Last Heartbeat" heartbeat_diff AS "Heartbeat Offset" artifact_count AS "Artifact Count" status_counter.Complete AS "Reaped Artifacts" artifacts_not_reaped AS "Unreaped Artifact Count" advertise_restart_required AS "Restart Flag"This query indicates that there are no unreaped artifacts, though it's clearly inferring that value from other explicit values. I guess the question is now why is the dispatch reaper finding 17k unreaped, expired artifacts and not doing anything about?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

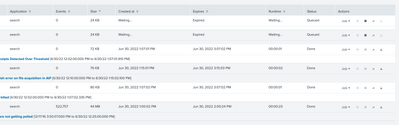

Additional info - I hopped on the monitoring console and found that this user has some search alerts running under her account, and these searches can use lots of disk. There are examples of these single searches exceeding the entire quota. However, these don't seem to show up correctly in the Job Manager. In the job manager they show like 45MB of disk, but then the monitoring console shows some with 2000MB.

Size discrepancy aside, shouldn't deleting everything from the Job Manager allow the user to be able to continue searching?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found this search which is supposed to show how much disk is being used per user.

|rest splun_server=local /services/search/jobs

|eval diskUsageMB=diskUsage/1024/1024

|stats sum(diskUsageMB) as DiskUsage_MB by eai:acl.owner

|rename eai:acl.owner as UserIt's telling me that these user's are using ~100MB of disk. Meanwhile they're simultaneously getting the disk quota error saying they're using 1033MB. I can see that they are indeed being queued using this other search.

index=_audit search_id="*" sourcetype=audittrail action=search info=granted

| table _time host user action info search_id search ttl

| join search_id

[ search index=_internal sourcetype=splunkd quota component=DispatchManager log_level=WARN reason="The maximum disk usage quota*"

| dedup search_id

| table _time host sourcetype log_evel component username search_id, reason

| eval search_id = "'" + search_id + "'"

]

| table _time host user action info search_id search ttl reasonI'm wondering if there aren't orphaned artifacts somewhere that are being counted against the user for the disk quota check but not reported through the Job Manager or that REST query.