Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Splunk shows only 9 months (270 days) data- How do...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk shows only 9 months (270 days) data- How do I increase the retention period?

Hi Everyone,

I got a strange issue and unable to find a fix.

All the indexes have a longer retention period but the oldest data is limited to 270 days. I checked the index cluster but did not find anything which could be causing this issue. Here is the configuration for all indexes:

[example1]

coldPath = volume:primary/example1/colddb

homePath = volume:primary/example1/db

maxTotalDataSizeMB = 512000

thawedPath = $SPLUNK_DB/example1/thaweddb

frozenTimePeriodInSecs=39420043

Checked the index & Indexers disk space and they are still space left for more data.

Please let me know if anyone have similar experience or suggestion to increase the retention period.

Thanks,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Everyone,

Regrettably, the oldest available data across all indexes has been reduced to approximately 7 months.

I have already conducted the following checks:

Current index size: Less than 200GB (configured for 500GB)

Indexers Disk Size (Cluster): All indexes currently have 30-35% free space.

frozenTimePeriodInSecs=39420043 (approximately 15 months)

Any assistance with troubleshooting would be greatly appreciated.

Thank you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

you could search a reason for deleting bucket from internal index. You can start with

index=_internal *cold* *<your index or bucket Id>* You will get list of buckets. Select one which are frozen. Then use that bucket id to see the process how and why it has frozen.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you,

I randomly ran it for a few buckets and observed the following message:

Moving bucket='rb_1681312487_1677890027_1731_FBA51F26-2043-4798-B18D-2D637A7347B9', initiating warm_to_cold: from='/Data/splunkdb/o365/db' to='/Data/splunkdb/o365/colddb', caller='chillIfNeeded', reason='maximum number of warm buckets exceeded'.

I'm not sure if this is the reason that could be affecting the data retention period. Initially, I had "maxHotBuckets = 10" defined, but it's no longer defined, and I've left it as the default value.

[test]

coldPath = volume:primary/test/colddb

homePath = volume:primary/test/db

thawedPath = $SPLUNK_DB/test/thaweddb

maxTotalDataSizeMB = 512000

frozenTimePeriodInSecs = 39420043

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To add to the above details, the "thaweddb" folder is blank and doesn't contain any buckets.

For now, I have increased the "frozenTimePeriodInSecs" by a few more months, but I'm not sure if it will work. Any other advice would be very helpful.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still can't find anything which will lead to a solution. Any suggestion will be of great help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The data can be frozen (in your case - deleted if not configured otherwise) in one of three cases:

1) The buckets in the index get too old (most recent event in a bucket is older than the retention period for the index) or

2) The index exceeds the size limit

3) The volume hits the size limit.

So you have to verify if any of those three conditions are met.

Additionally, check your effective configuration with btool. Maybe you're looking in a wrong file for the settings.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @spodda01da,

Did you check that your indexe size does not exceed your maxTotalDataSizeMB value (here 512000 MB) ?

Based on the doc :

https://docs.splunk.com/Documentation/Splunk/9.0.2/admin/Indexesconf

* CAUTION: The 'maxTotalDataSizeMB' size limit can be reached before the time limit defined in 'frozenTimePeriodInSecs'

maxTotalDataSizeMB = <nonnegative integer> * The maximum size of an index, in megabytes

To check the size of your index you can use :

du -sch /opt/splunk/var/lib/splunk/<index_name>

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did, the size defined is 500GB and most of the indexes are around 300-400GB.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @spodda01da

Run follwing command to check

if buckets are deleting before actual retion days

index=_internal sourcetype=splunkd bucketmover "*will attempt to freeze*"

| eval "Index Last Event"=strftime(now,"%d-%m-%y %H:%M:%S")

| eval "Index First Event"=strftime(latest,"%d-%m-%y %H:%M:%S")

| eval "Actual Data Stored"=round((now-latest)/86400,0)

| eval "Index Rention Days"=frozenTimePeriodInSecs/86400

| table candidate "Index Rention Days" "Actual Data Stored" "Index First Event" "Index Last Event"

if "Actual Data Stored" less than "Index Rention Days" then data ingestion more on index

and as menioned by @GaetanVP maxTotalDataSizeMB has presencede over frozenTimePeriodInSecs.

in that case you may need to increase the disk space

----

Regards,

Sanjay Reddy

----

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

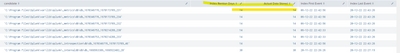

Thanks @SanjayReddy , I ran the script and see following details:

This issue is not specific to one index but with almost all has oldest data of 270 days.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear,

the issue is resolved for you, for me the same issue with the notable index, we have configured the notable index with 18 mnths retention period and also maxtotaldatasizemb to 20gb, its only used 10 % of 20gb, so as this configuration it need to have data for last 18 months but i can see last 90 days for notable index, when we checked last week its getting from 2nd july when i checked this week its getting from july 12th, so its storing only 90 days,

can you have any solution for this we are only using hot warm cold not frozen we configured the live data for 18 mnths then it will be deleted, but for notable index its only have for 90 days,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content