- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- HTTP client error=Read Timeout while accessing ser...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

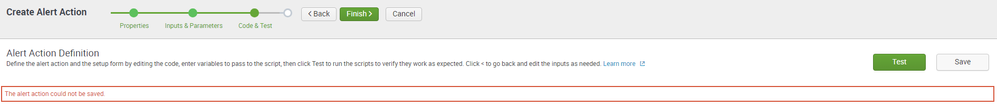

I am adding an Alert Action with Splunk Add-on Builder, but when I click “save” it basically goes in timeout.

01-16-2024 17:01:31.340 +0100 ERROR HttpClientRequest [24831 TcpChannelThread] - HTTP client error=Read Timeout while accessing server=http://127.0.0.1:8065 for request=http://127.0.0.1:8065/en-US/custom/splunk_app_addon-builder/app_edit_modularalert/add_modular_alert.

In the meanwhile if I open a new tab on the browser, whichever page I request falls in timeout as well.

01-16-2024 17:02:18.114 +0100 ERROR HttpClientRequest [7954 TcpChannelThread] - HTTP client error=Read Timeout while accessing server=http://127.0.0.1:8065 for request=http://127.0.0.1:8065/en-US.

Looking into the /opt/splunk/etc/apps folder it seems my app stuck in TA-splunk-myapp_temp_output folder meanwhile is saving.

splunk@SearchHead:~/etc/apps > ls -latr

drwxrwxrwx 10 splunk splunk 4096 Jan 15 16:02 TA-splunk-myapp

…

drwxrwxrwx 3 splunk splunk 4096 Jan 16 16:53 TA-splunk-myapp_temp_output

I also tried to:

- cancel the TA-splunk-myapp_temp_output folder, restart Splunk and try again saving.

- increase performance from 16CPU/32GB to 32CPU/64GB

but I have the same issue.

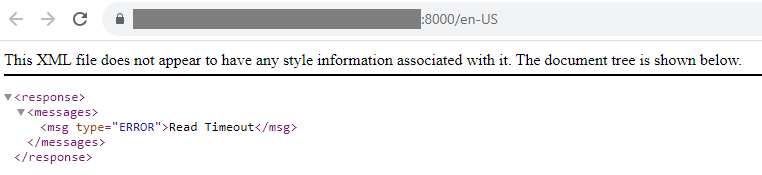

It seems that the timeout comes from the “appserver” that runs on port 8065.

https://docs.splunk.com/Documentation/Splunk/latest/Admin/Webconf

appServerPorts = <positive integer>[, <positive integer>, <positive integer> ...]

* Port number(s) for the python-based application server to listen on.

This port is bound only on the loopback interface -- it is not

exposed to the network at large.

* Generally, you should only set one port number here. For most

deployments a single application server won't be a performance

bottleneck. However you can provide a comma-separated list of

port numbers here and splunkd will start a load-balanced

application server on each one.

* At one time, setting this to zero indicated that the web service

should be run in a legacy mode as a separate service, but as of

Splunk 8.0 this is no longer supported.

* Default: 8065

I am thinking about:

- Put the logs in DEBUG

- Adding other ports to start load-balanced application server

Any suggestion is really appreciated.

Thanks a lot,

Edoardo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the time being I have solved the issue saving the code one piece at a time.

Saving the 200 lines of code in one shot was generating the problem...

Restarting Splunk in DEBUG mode can point in the right direction to understand the root cause, but the amount of messages is really huge.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the time being I have solved the issue saving the code one piece at a time.

Saving the 200 lines of code in one shot was generating the problem...

Restarting Splunk in DEBUG mode can point in the right direction to understand the root cause, but the amount of messages is really huge.