Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Can we monitor the swap space usage on the forward...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vmstat from Splunk_TA_nix should return swap usage of your host if its enabled or at least that's what the headers say.

PRINTF='END {printf "%10d %10d %10d %10.1f %10.1f %10s %10.1f %10s %10s %10s %10s %10s %10s %10.2f %10.2f %10.2f %10.2f %10.2f\n", memTotalMB, memFreeMB, memUsedMB, memFreePct, memUsedPct, pgPageOut, swapUsedPct, pgSwapOut, cSwitches, interrupts, forks, processes, threads, loadAvg1mi, waitThreads, interrupts_PS, pgPageIn_PS, pgPageOut_PS}'

DERIVE='END {memUsedMB=memTotalMB-memFreeMB; memUsedPct=(100.0*memUsedMB)/memTotalMB; memFreePct=100.0-memUsedPct; swapUsedPct=swapUsed ? (100.0*swapUsed)/(swapUsed+swapFree) : 0; waitThreads=loadAvg1mi > cpuCount ? loadAvg1mi-cpuCount : 0}'

If that doesn't help you , there are few other commands from which you could use to extract the swap information. top/atop/htop/free are few of them.

What goes around comes around. If it helps, hit it with Karma 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ddrillic

You can also forward the Introspection logs of forwarders forwarded to indexers.

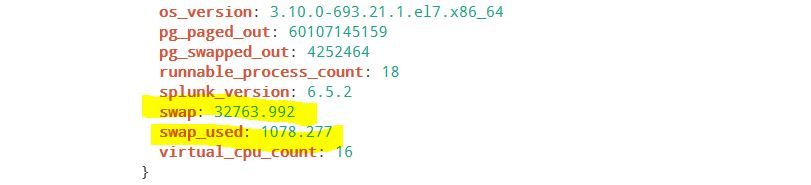

You will find info related to swap space usage in

index=_introspection sourcetype=splunk_resource_usage component="hostwide" | rename data.* as * | fields swap swap_used

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see the swap information

But it's for the Splunk servers, not the forwarders, right?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vmstat from Splunk_TA_nix should return swap usage of your host if its enabled or at least that's what the headers say.

PRINTF='END {printf "%10d %10d %10d %10.1f %10.1f %10s %10.1f %10s %10s %10s %10s %10s %10s %10.2f %10.2f %10.2f %10.2f %10.2f\n", memTotalMB, memFreeMB, memUsedMB, memFreePct, memUsedPct, pgPageOut, swapUsedPct, pgSwapOut, cSwitches, interrupts, forks, processes, threads, loadAvg1mi, waitThreads, interrupts_PS, pgPageIn_PS, pgPageOut_PS}'

DERIVE='END {memUsedMB=memTotalMB-memFreeMB; memUsedPct=(100.0*memUsedMB)/memTotalMB; memFreePct=100.0-memUsedPct; swapUsedPct=swapUsed ? (100.0*swapUsed)/(swapUsed+swapFree) : 0; waitThreads=loadAvg1mi > cpuCount ? loadAvg1mi-cpuCount : 0}'

If that doesn't help you , there are few other commands from which you could use to extract the swap information. top/atop/htop/free are few of them.

What goes around comes around. If it helps, hit it with Karma 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @renjith.nair.