- Find Answers

- :

- Premium Solutions

- :

- Splunk Enterprise Security

- :

- How to synchronize Notable Event data with 3rd par...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to synchronize Notable Event data with 3rd party incident management tool (TheHive)?

Hello,

We’d like to synchronize Correlation Searches with our Incident management tool, The Hive. We could use TA-Thehive to create an Adaptive Response Action in Correlation Search configuration. However, the difficulty is that in addition to Correlation Search data we'd like to synchronize the Notable Data as well, like its event_id, next steps, etc.

The only way to do it we were able to find is to create an additional alert based on Notable index for every Correlation Search, then use the TA to create a response action for this alert and to send all Notable Event data to our incident management tool.

This solution has one main issue:

- For every Correlation Search we need to create an additional alert (time consuming)

- The alert’s query is based on Notable Index while our Correlation Searches query uses tstats (performance impact)

I’d like to know if anyone faced the same issue before and was able to find a better solution.

Thanks for the help.

Alex.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

did you ever find a solution to this? I'm having the same issue right now and I would highly appreciate to know how you did it in the end.

I'm also online in the Splunk user group slack space (name is Julian, avatar is a dwarf with a splunk logo on a book) if you want to chat real quick.

Thanks,

~ Julian

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Julian,

For now, we use a workaround solution which is another app: https://github.com/swiip81/create_thehive_alert

It doesn't solve the main issue — synchronisation with Notable Events. However, this app is much more flexible.

The main solution is to create a new app, based on index "notable". Our Tool Team is working on it. The idea is simple:

- For each correlation search the app create an Adaptive Response Action (create an alert in TheHive)

- The action checks the "notable" index and grab all events where 'search_name' field is equal to its corresponding correlation search name

/!\ Attention: Adaptive Response Actions in Enterprise Security are triggered in alphabetical order. It means that the name of our Adaptive Response Action should be > to "Notable Event" ("TheHive alert" is OK | "Alert TheHive" is NOT OK).

So how it will work (should work at least):

1. Our Correlation Search, lets call it "Bruteforce_detected", returns some results

2. The "Notable Event" Adaptive Response Action creates one or more events in "notable" index. The value of 'search_name' field for these events is "Bruteforce_detected".

3. Our "TheHive alert" Adaptive Response Action checks "notable" index and grabs all events where 'search_name' field is equal to "Bruteforce_detected" (the events that has been just created).

4. "Earliest TIme" and "Latest Time" are equal for both actions. In this way we could be sure to grab only the events related to current Correlation Search execution.

5. For each event it creates a corresponding alert in TheHive.

Hope it was helpful.

P.S. actually you may want to use `notable` macro instead of "notable" index. It gives you some additional information.

Alex.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, I was not expecting such a nice answer. Thanks Alex, that helped a lot already!

That's a nice workaround, but I don't quite get the alphabetical order you mentioned. I created a new app with an adaptive response action called "zzz_test", which is definitely > "Notable Event" and added just this code:

import splunklib.client

import splunklib.results as results

events = helper.get_events()

for event in events:

helper.log_error(event)

splunklibClient = splunklib.client.connect(token=helper.session_key, owner='nobody', app="ta-nts-notable-forwarder")

jobs = splunklibClient.jobs

oneshot_params = {

"earliest_time": "-1h",

"latest_time": "now"

}

search_query = "SEARCH `notable` | search orig_sid=\"" + helper.sid + "\""

search_results = jobs.oneshot(search_query, **oneshot_params)

reader = results.ResultsReader(search_results)

helper.log_debug(helper.sid)

for item in reader:

helper.log_debug("ehm wtf: {}".format(item))Next, I edited a correlation search and added the "Notable Event" and "zzz_test" adaptive response actions. I still don't see the notable fields like the event_id in the log output. The search also does not return any results.

I'm currently thinking of a slightly different solution. I want to create a saved search, which is executed every minute (* * * * *) and triggers my alert (the real one is called "forward_notable_to_nts" instead of the example above zzz_test):

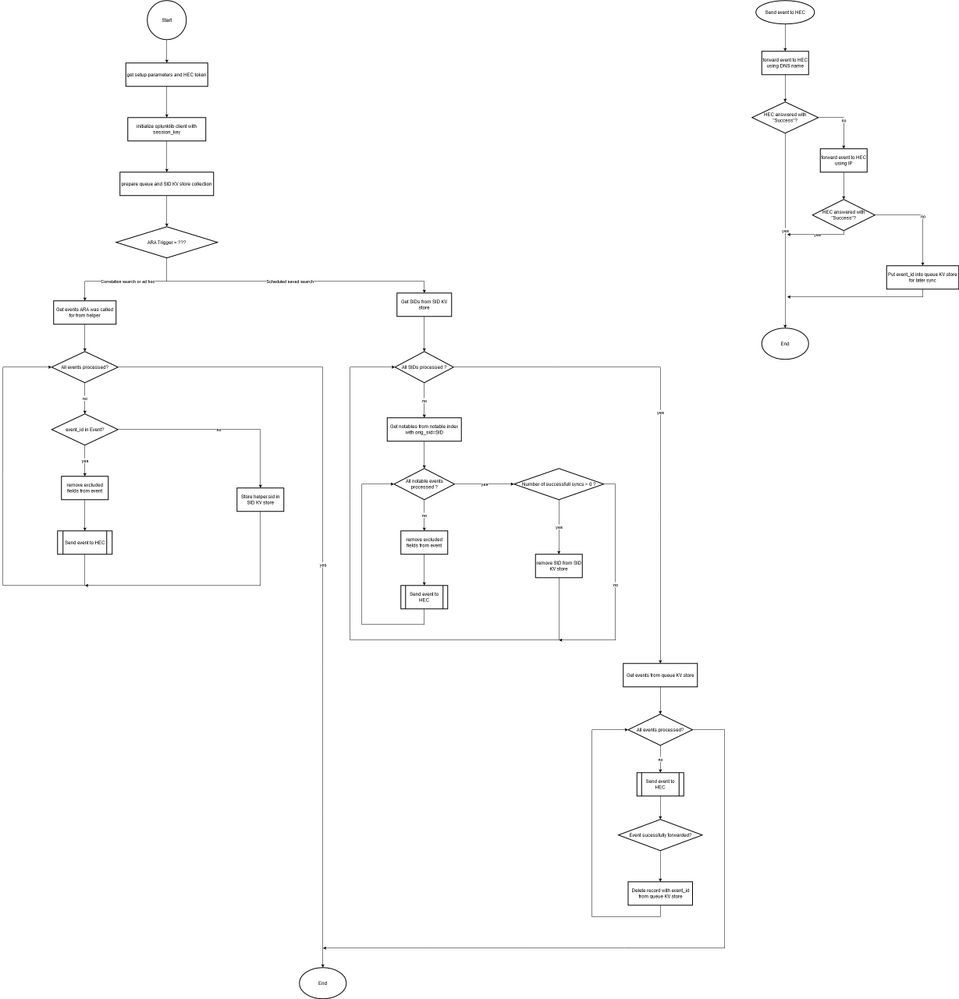

| makeresults | sendalert forward_notable_to_nts param.notable_sync_scheduled_run="true"The adaptive response action is still also run from the correlation search. If the event_id is missing, it will store the SID (searchid) in a KV Store for later processing of the event once it is indexed and has the notable fields.

The adaptive response action script will also check if it was run with the parameter "notable_sync_scheduled_run". This is how I know if the script was executed by the saved search instead of the correlation search. In this case, it will check if there are entries in the KV store and make another search using the notable index like:

`notable` orig_sid=<searchid>This time I should get the full notable event.

I would not need an additional app, but you know, it's still a workaround for a pretty weird issue, which should work out of the box in my opinion.

What do you think of this solution?

Do you know if there is a better solution?

Thanks again!

~ Julian

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content