Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Splunk Dev

×

Join the Conversation

Without signing in, you're just watching from the sidelines. Sign in or Register to connect, share, and be part of the Splunk Community.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Find Answers

- :

- Apps & Add-ons

- :

- Splunk Development

- :

- Splunk Dev

- :

- Too many open files, but have a ulimit of 65536- i...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Too many open files, but have a ulimit of 65536- is this too small?

robertlynch2020

Influencer

03-08-2022

04:41 AM

Hi

I have Too many open files, but i have ulimit of 65536

I believe I have set my Splunk up correctly, but my Search head has crashed twice now in 2 days.

Is 65536 too small? Should i try and make it bigger?

bash$ cat /proc/32536/limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size unlimited unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 790527 790527 processes

Max open files 65536 65536 files

Max locked memory 65536 65536 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 1546577 1546577 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

hp737srv autoengine /hp737srv2/apps/splunk/

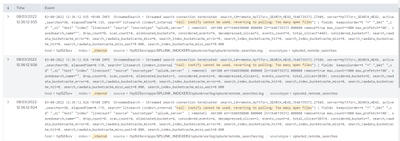

I am also getting the following messages from my 3 indexers (I have an indexer cluster)

When I run the following command, I can see Splunk 1 hour after startup taking 4554?

bash$ lsof -u autoengine | grep splunk | awk 'BEGIN { total = 0; } $4 ~ /^[0-9]/ { total += 1 } END { print total }'

4554

So at the moment, I have made a case with Splunk, but I might have to put in nightly restarts if it keeps happening.

In the last few months, I have set up a heave forwarder to send in HEC data to the indexers. This data has been increasing, so I am not sure if this is the issue?

Thanks in advance

Get Updates on the Splunk Community!

Accelerating Observability as Code with the Splunk AI Assistant

We’ve seen in previous posts what Observability as Code (OaC) is and how it’s now essential for managing ...

Integrating Splunk Search API and Quarto to Create Reproducible Investigation ...

Splunk is More Than Just the Web Console

For Digital Forensics and Incident Response (DFIR) practitioners, ...

Congratulations to the 2025-2026 SplunkTrust!

Hello, Splunk Community! We are beyond thrilled to announce our newest group of SplunkTrust members!

The ...