- Apps & Add-ons

- :

- Splunk Development

- :

- Splunk Dev

- :

- How to resolve lots of Splunk internal connections...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have a Splunk app which exposes a REST end point for other application to request metrics.

The main piece of python code inside the method is:

service = self.getService()

searchjob = service.jobs.create(searchquery)

while not searchjob.is_done():

time.sleep(5)

reader = results.ResultsReader(searchjob.results(count=0))

response_data = {}

response_data["results"] = []

for result in reader:

if isinstance(result, dict):

response_data["results"].append(result)

elif isinstance(result, results.Message):

mylogger.info("action=runSearch, search = %s, msg = %s" % (searchquery, results.Message))

search_dict["searchjob"] = searchjob

search_dict["searchresults"] = json.dumps(response_data)

The dependent application invokes the REST API at some scheduled intervals. There are close to 150 calls that is spread across various time intervals.

Note: At any point of time there will be maximum 6 search requests.

Normal scenarios

Remote application and my Splunk app - both are up and running - everything is fine.

For some reason if I have to restart remote application, and after restart - both are up and running - everything is fine.

For some reason if I have to restart my Splunk process, and after restart - both the applications are up and running - everything is fine.

Problematic scenario:

The problem starts when the system where remote application is running is rebooted. After reboot, the remote application will start making calls to the splunk application and in about 60 min, the number of CLOSE_WAIT connections reaches 700+ and eventually splunk system starts throwing socket error. Splunk Web will also become inaccessible.

Additional Info:

- The remote application is a python application written using Tornado framework. The remote application runs inside a docker container that is managed by Kubernetes.

- The ulimit -n on splunk system shows 1024. (I know that as per Splunk recommendation it is less. But i would like to understand why the issue occurs only during remote systemreboot)

- During normal times, the searches take on an average 7s to complete. When the remote machine is rebooted during that time the searches take on an average 14s to complete. (Well this may not make sense to relate remote system reboot with splunk search performance on the splunk system. But thats the trend)

The CLOSE_WAIT connections are all internal tcp connections

tcp 1 0 127.0.0.1:8089 127.0.0.1:37421 CLOSE_WAIT 0 167495826 28720/splunkd

tcp 1 0 127.0.0.1:8089 127.0.0.1:32869 CLOSE_WAIT 0 167449474 28720/splunkd

tcp 1 0 127.0.0.1:8089 127.0.0.1:37567 CLOSE_WAIT 0 167497280 28720/splunkd

tcp 1 0 127.0.0.1:8089 127.0.0.1:33086 CLOSE_WAIT 0 167451533 28720/splunkd

Any help or pointers is highly appreciated.

Thanks,

Strive

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is resolved.

It was due to the _GLOBAL_DEFAULT_TIMEOUT of socket.py

To fix, we are setting timeout explicitly before opening the the connection

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is resolved.

It was due to the _GLOBAL_DEFAULT_TIMEOUT of socket.py

To fix, we are setting timeout explicitly before opening the the connection

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't believe that you will be able to fix this with the Splunk tuning, have you looked at what the other remote application is doing and how the requests are being opened? More information on the remote application would be helpful. Here's a link that might be helpful.

http://unix.stackexchange.com/questions/10106/orphaned-connections-in-close-wait-state

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Increased ulimit to 64000

Still seeing the issue. The moment close wait connections count reaches 1350+ splunk stops responding to REST API calls.. Splunk Web stops responding..

But if we check Splunk status it says Splunk is running..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After few restarts of Splunk, now the system has not stopped working after 1350 CLOSE_WAIT connections..

But the CLOSE_WAIT connections just keeps on growing.. In the latest test it shows 5477 CLOSE_WAIT connections after 6 hours of run time..

Why Splunk Management server is not closing the connections..

The next step is to analyze the usage of cherrypy in Splunk...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The tcpdump details

Pattern when connections are getting closed properly:

127.0.0.1.50764 > 127.0.0.1.8089: Flags [.], cksum

127.0.0.1.50764 > 127.0.0.1.8089: Flags [R.], cksum

OR

127.0.0.1.8089 > 127.0.0.1.54338: Flags [F.], cksum

127.0.0.1.54338 > 127.0.0.1.8089: Flags [R.], cksum

Pattern when connections are not getting closed properly and connections end up in CLOSE_WAIT

127.0.0.1.50771 > 127.0.0.1.8089: Flags [F.], cksum

127.0.0.1.8089 > 127.0.0.1.50771: Flags [.], cksum

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I completely agree that splunk recommended value for hard fd limit is 64000

What i would like understand is: When splunk will close these CLOSE_WAIT connections? I did not see any default configurations which we can override so that the CLOSE_WAIT connections are closed immediately to free up the resources.

When the splunk server starts i see that the message it prints is:

WARN main - The hard fd limit is lower than the recommended value. The hard limit is '4096' The recommended value is '64000'.

ulimit - Limit: virtual address space size: unlimited

ulimit - Limit: data segment size: unlimited

ulimit - Limit: resident memory size: unlimited

ulimit - Limit: stack size: 10485760 bytes [hard maximum: unlimited]

ulimit - Limit: core file size: unlimited

ulimit - Limit: data file size: unlimited

ulimit - Limit: open files: 4096 files

ulimit - Limit: user processes: 515270 processes

ulimit - Limit: cpu time: unlimited

ulimit - Linux transparent hugetables support, enabled="always" defrag="always"

----

----

----

loader - Detected 16 CPUs, 16 CPU cores, and 64426MB RAM

f we go with 33% of 4096, which is approximately 1352. The splunk application responds to all the requests until the total CLOSE_WAIT tcp connections count reaches ~1360.

I can increase the ulimit -n provided if there is a guarantee that the CLOSE_WAITS are going to be closed at some point. But thats not happening. It just keeps on increasing. During monitoring i noticed that the first CLOSE_WAIT connection stays in the same state even after 5 hours.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Strive,

A very intersting thread at What is the cause of these socket errors reported in splunkd.log since upgrading to 6.0?

@hexx says -

Therefore, I would suggest to increase ulimit -n first. It's not worth your time to do it the other way around ; -)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At some point these CLOSE_WAIT connections will reach the limit that we set by increasing the ulimit -n.

increasing ulimit will just give some breathing space. Correct me if i am wrong.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

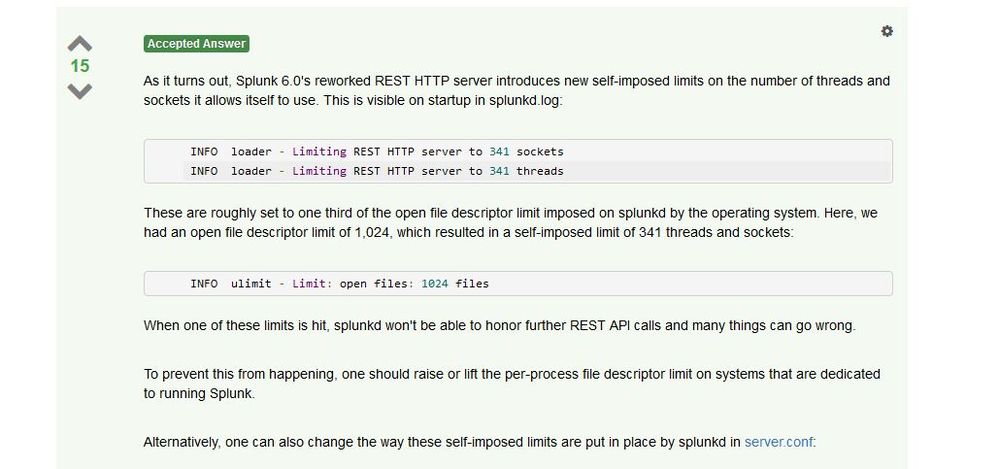

it's interesting because the default on many systems of 1024 which translates into 341 actuals, according to the post, is way off the 64000 recommended value or even the unlimited available value - so there is a huge gap between this default value and a good possible value.

Look, this 1024 value is not good enough, in many cases, for plain forwarders ; -) not mention Splunk itself.

Obviously I don't know if it would solve your issue, but you need to increase 1024 in any event - it's not even a supported value by Splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I completely agree that splunk recommended value for hard fd limit is 64000

What i would like understand is: When splunk will close these CLOSE_WAIT connections? I did not see any default configurations which we can override so that the CLOSE_WAIT connections are closed immediately to free up the resources.

When the splunk server starts i see that the message it prints is:

WARN main - The hard fd limit is lower than the recommended value. The hard limit is '4096' The recommended value is '64000'.

ulimit - Limit: virtual address space size: unlimited

ulimit - Limit: data segment size: unlimited

ulimit - Limit: resident memory size: unlimited

ulimit - Limit: stack size: 10485760 bytes [hard maximum: unlimited]

ulimit - Limit: core file size: unlimited

ulimit - Limit: data file size: unlimited

ulimit - Limit: open files: 4096 files

ulimit - Limit: user processes: 515270 processes

ulimit - Limit: cpu time: unlimited

ulimit - Linux transparent hugetables support, enabled="always" defrag="always"

----

----

----

loader - Detected 16 CPUs, 16 CPU cores, and 64426MB RAM

If we go with 33% of 4096, which is approximately 1352. The splunk application responds to all the requests until the total CLOSE_WAIT tcp connections count reaches ~1360.

I can increase the ulimit -n provided if there is a guarantee that the CLOSE_WAITS are going to be closed at some point. But thats not happening. It just keeps on increasing. During monitoring i noticed that the first CLOSE_WAIT connection stays in the same state even after 5 hours..