Join the Conversation

- Find Answers

- :

- Splunk Platform

- :

- Splunk Cloud Platform

- :

- Splunk Forwarders Buffer size and best practices?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk Forwarders Buffer size and best practices?

Hi Splunkers, I have some doubts about forwarder buffer, both universal and heavy.

The starting point is this: I know that, if an indexer goes down and it is receiving data by a UF, this has a buffering mechanism to store data and send them to proper destination once it is up and running again. If I'm not wrong, the limits of this buffer can be set on a config file (I don't remeber well wich one). Now, the question are:

1. Even if the answer can be obvious, this mechanism is already available for HF?

2. How can I decide the maximum size of my buffer? is there a pre set limit or it depends on my environments?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

theses attributes in outputs.conf will take care these

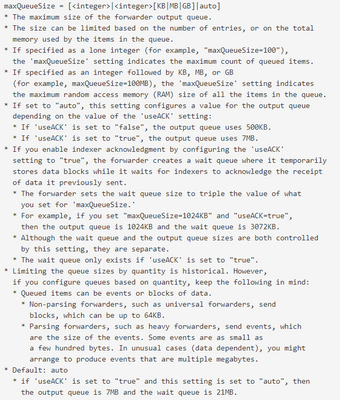

maxQueueSize

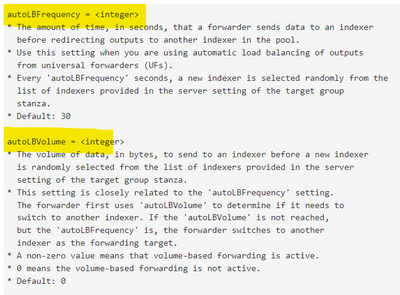

autoLBFrequency

autoLBVolume

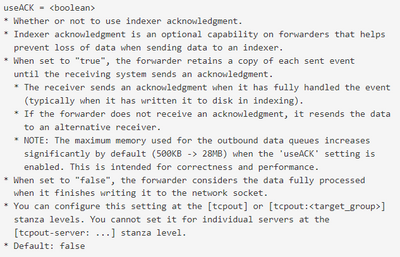

useAck

https://docs.splunk.com/Documentation/Splunk/8.2.7/Admin/Outputsconf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SanjayReddy , thanks a lot. I didn't remember about the auto fields, I got memories only about useack and maxqueue size, very usefull.

About the max queue size, are there any souces I can use to understand the best/max value I can set in my environments? I ask this because my final question is: what is the max value I can set in maxQueueSize It depends by my hardware availability or there a limit I cannot overwhelm?