Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Reporting

×

Join the Conversation

Without signing in, you're just watching from the sidelines. Sign in or Register to connect, share, and be part of the Splunk Community.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Reporting

- :

- How to create a report that show new log sources a...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to create a report that show new log sources added in Splunk?

Narcisse

Loves-to-Learn

01-09-2023

09:33 AM

I am newbie in Splunk. I need help help creating a report to show new log sources that have been added to Splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Narcisse

Loves-to-Learn

01-25-2023

02:19 PM

Hello @gcusello

Just want to know if you have a new suggestion that will fix my error

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gcusello

SplunkTrust

01-09-2023

11:57 PM

Hi @Narcisse,

you can run a simple search like the following:

| metadata index=* earliest=-30d@d latest=now

| stats

earliest(_time) AS earliest

latest(_time) AS latest

values(index) AS index

values(host) AS host

BY sourcetype

| where latest-earliest<86400

| eval

earliest=strftime(earliest,"%Y-%m-%d %H:%M:%S"),

latest=strftime(latest,"%Y-%m-%d %H:%M:%S")In this way you can check the Data arrived in the last 24 hours not present in the previous 29 days.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Narcisse

Loves-to-Learn

01-10-2023

11:00 AM

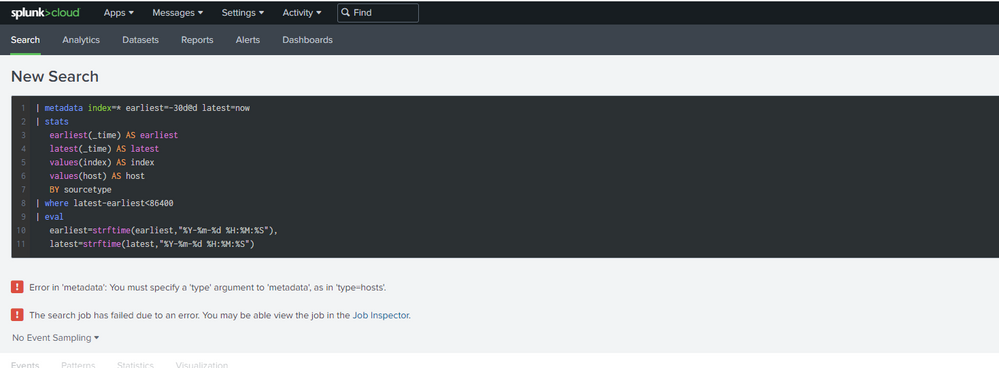

Thanks for your response but I am getting these messages

Error in 'metadata': You must specify a 'type' argument to 'metadata', as in 'type=hosts'.

The search job has failed due to an error. You may be able view the job in the Job Inspector.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gcusello

SplunkTrust

01-26-2023

04:08 AM

Hi @Narcisse,

please try this:

| tstats earliest(_time) AS earliest latest(_time) AS latest values(host) AS host WHERE earliest=-30d@d latest=now BY sourcetype index

| where latest-earliest<86400

| eval

earliest=strftime(earliest,"%Y-%m-%d %H:%M:%S"),

latest=strftime(latest,"%Y-%m-%d %H:%M:%S")Ciao.

Giuseppe

Get Updates on the Splunk Community!

Stay Connected: Your Guide to January Tech Talks, Office Hours, and Webinars!

What are Community Office Hours?

Community Office Hours is an interactive 60-minute Zoom series where ...

[Puzzles] Solve, Learn, Repeat: Reprocessing XML into Fixed-Length Events

This challenge was first posted on Slack #puzzles channelFor a previous puzzle, I needed a set of fixed-length ...

Data Management Digest – December 2025

Welcome to the December edition of Data Management Digest!

As we continue our journey of data innovation, the ...