Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Why are the queues being filled up on one indexer?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are the queues being filled up on one indexer?

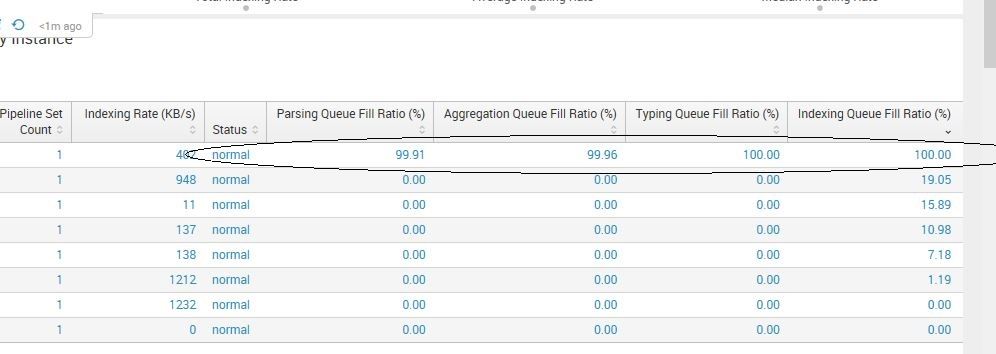

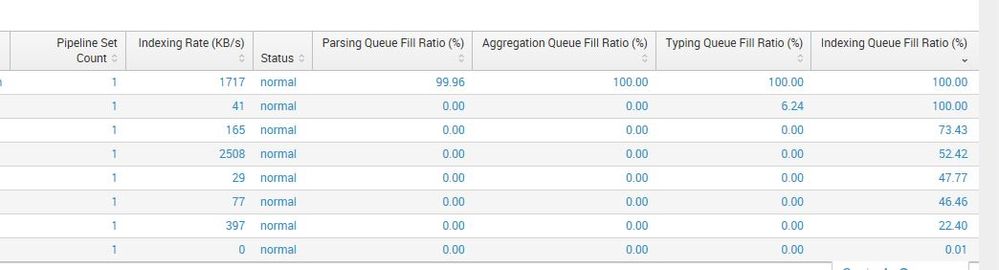

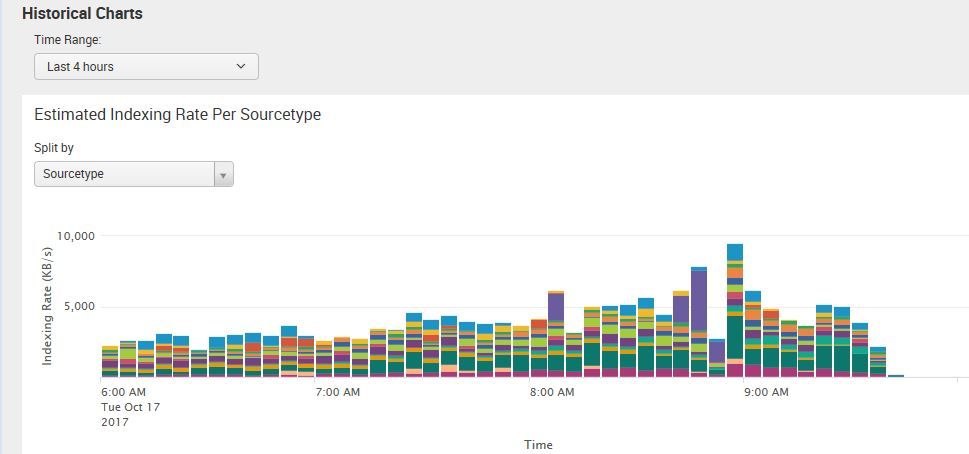

In the last day or two all the queues of one indexer got filled up. We bounced it and now on another indexer all the queues are close to 100%. What can it be?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

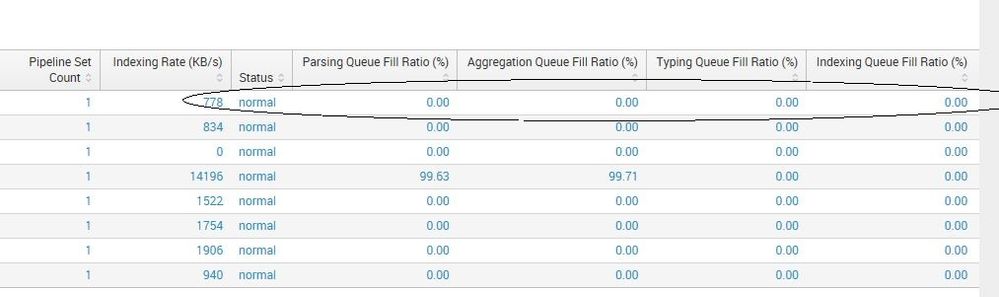

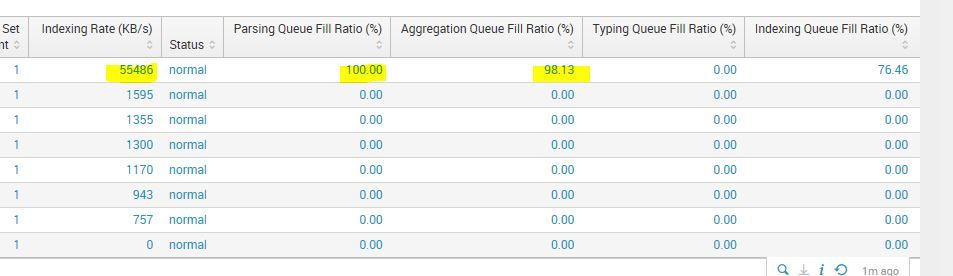

Normally, for months and months at this point of the day all the queues would be quite empty. However, h2709 is still pretty bad -