Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Re: Search Head Cluster Slow performance

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Search Head Cluster Slow performance

Cluster Member on Version : 6.2.1 and has three Search Head Cluster Memeber:

*Issue : *

1) When user run’s adhoc search from the Search head cluster Captain (even simple search like index= ) the result start to stream right away , also when the search is being typed in Search Bar the guiding Matching searches is displayed.

2) On the other had when the same adhoc search is run from Non-Captain SHC member , after that search is typed and asked to run, the search bar turns GREY and initially no results are streamed, after some delay the bust of result get displayed message {** “Gave up waiting for the captain to establish a common bundle version across all search peers; using most recent bundles on all peers instead** is displayed. Also for Non-Captain SHC member when the search is typed in Search Bar the guiding Matching search never gets displayed.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Everyone, Ours is a small environment with 2SHs and 3 indexers, recently after a resync on the SH cluster i see the below error and the SH seems to be very slow. Is there a way to sort this out?

This is the error/warning message i see in the MC and below is the error while running adhoc searches

"Gave up waiting for the captain to establish a common bundle version across all search peers; using most recent bundles on all peers instead" @rbal_splunk Looks like you have already answered this, can you pls help here

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

you should create a separate question for this as this thread is quite old and probably the issue is different.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

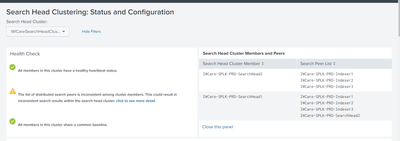

1)Errors on SHC member *01 and *03:

03-25-2015 16:40:13.914 -0700 WARN ISplunkDispatch - sid:1427326803.763_75811D00-D496-4CA9-AC7F-B59481D41E24 Gave up waiting for the captain to establish a common bundle version across all search peers; using most recent bundles on all peers instead

03-25-2015 16:45:12.032 -0700 WARN ISplunkDispatch - sid:scheduler_admin_U0Etbml4_RMD5b9b800e209365567_at_1427327100_2890_5AA49DE9-479C-46D9-94ED-E953671E85FD Gave up waiting for the captain to establish a common bundle version across all search peers; using most recent bundles on all peers instead

2)Looks like *02 is the most recently-elected captain, i.e. *02 is the captain while the ISplunkDispatch WARNs are being thrown:

helloserver01_0/log/splunkd.log.1:03-13-2015 15:32:48.499 -0700 INFO SHPRaftConsensus - All hail leader https://helloserver.sce.com:8089 for term 11

helloserver03_0/log/splunkd.log.2:03-13-2015 15:14:13.280 -0700 INFO SHPRaftConsensus - All hail leader https://helloserver.sce.com:8089 for term 11

3) The issue is caused due to misconfiguration, where the captain does not have the same search peers as the other members:

$ find */etc/{apps,system} -name distsearch.conf | grep local | xargs grep --color 'servers ='

helloserver01_0/etc/system/local/distsearch.conf:servers = helloserver01:8089,helloserver02:8089,helloserver03:8089,helloserver04:8089,helloserver05:8089,helloserver07:8089,helloserver08:8089,helloserver09:8089,helloserver10:8089,helloserver11:8089,helloserver12:8089,helloserver13:8089,helloserver14:8089,helloserver15:8089,helloserver16:8089,helloserver17:8089,helloserver18:8089,helloserver19:8089,helloserver20:8089,helloserver21:8089,helloserver22:8089,helloserver23:8089,helloserver24:8089,helloserver25:8089,helloserver26:8089,helloserver27:8089,helloserver28:8089,helloserver06:8089,helloserver29:8089,helloserver30:8089,helloserver31:8089,helloserver32:8089,helloserver33:8089,helloserver34:8089,helloserver35:8089,helloserver36:8089,helloserver37:8089,helloserver38:8089

helloserver03_0/etc/system/local/distsearch.conf:servers = helloserver01:8089,helloserver02:8089,helloserver03:8089,helloserver04:8089,helloserver05:8089,helloserver06:8089,helloserver07:8089,helloserver08:8089,helloserver09:8089,helloserver10:8089,helloserver11:8089,helloserver12:8089,helloserver13:8089,helloserver14:8089,helloserver15:8089,helloserver16:8089,helloserver17:8089,helloserver18:8089,helloserver19:8089,helloserver20:8089,helloserver21:8089,helloserver22:8089,helloserver23:8089,helloserver24:8089,helloserver25:8089,helloserver26:8089,helloserver27:8089,helloserver28:8089,helloserver29:8089,helloserver30:8089,helloserver31:8089,helloserver32:8089,helloserver33:8089,helloserver34:8089,helloserver35:8089,helloserver36:8089,helloserver37:8089,helloserver38:8089

helloserver04_0/etc/system/local/distsearch.conf:servers = helloserver29:8089,helloserver30:8089,helloserver31:8089,helloserver32:8089,helloserver33:8089,helloserver34:8089,helloserver35:8089,helloserver36:8089,helloserver37:8089,helloserver38:8089

This is causing bundle replication to be "permanently incomplete".

Per our docs:

All search heads must use the same set of search peers.

Please make sure that you have saem numer of peer on all SHC memeber, and that shoudl resolve teh issue.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, had same issue but on just a couple in a large SHC. turns out distsearch.conf was on a couple in system local. Thanks!