- Find Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Query performance question : using pipe vs inline ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Query performance question : using pipe vs inline function

Hello,

I needed to count total and two individual error Conditions.

I used below two query and I was surprised to see both ran in same time for a count of total 100k rows.

Option 1) index=<> sourcetype=<> Exception_Stack="*ClassNotFound*" | eval err=case((TYPE_OF_ERROR=0) , "SUCCESS", (TYPE_OF_ERROR=403 ) "Alert",1=1, "Others") | stats count by err

Option 2) index=<> sourcetype=<> Exception_Stack="*ClassNotFound*" | stats count as Total, count(eval(TYPE_OF_ERROR=0)) as Success , count(eval(TYPE_OF_ERROR=403)) as Alert

But As per my understanding second should have perform better because in this case all the operations would be done in single traverse, however in first case it would be traversing the results multiple time.

I would like to know your views.

Thanks in advance

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

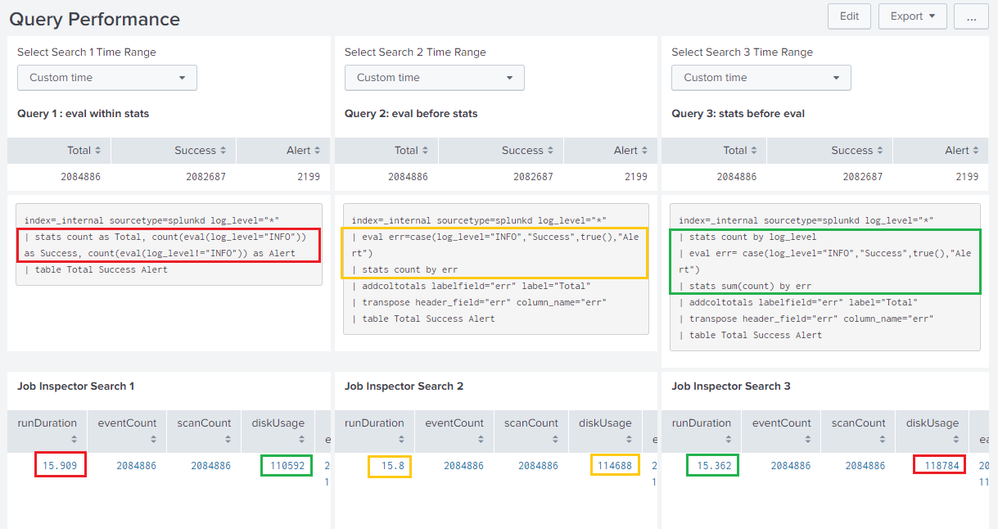

@jagdeepgupta813, even before looking at performance aspect of the queries, based on the output the two queries are different. One keeps the counts as column and other as rows. So I have made some changes to the queries so that they output the same result we are interested in.

Having said that, I would think that both queries i.e. (1) stats with eval and (2) eval first followed by stats are similar as in they will work with all the events in streaming manner so their duration is similar. For improving performance you would need a different approach to (3) perform stats first (aggregate the data into smaller buckets) and then use eval (on aggregated results). This is debatable though as stats being a non-streaming command and eval being streaming command, stats should be delayed as much as possible, to get the complete result set.

While this is over-simplified, actual performance would be dependent on a lot of factors directly or indirectly related to the type of command being used like:

1) built in query optimization based on the SPL being executed

2) which commands run on indexers (parallel processing with streaming commands) and which will run only on Search Head

3) what is the each load on each node and their status (specially in clustered environment).

Refer to the following documentation on Search Command Optimization: https://docs.splunk.com/Documentation/Splunk/latest/Search/Quicktipsforoptimization

http://docs.splunk.com/Documentation/Splunk/latest/Search/Writebettersearches

It would hence be better to use Job Inspector on various runs of these searches over a long period to fetch a lot of data and compare the results.

Following is a run any where example using Splunk's _internal index with the query and output similar to yours. The three query approaches as mentioned above have been used to generate the same results and Search Job SID is used to pull Job related details using REST API. I have run these on my personal system. So you should better check the same in your environment

Following is the Simple XML Code for run anywhere dashboard:

<form>

<label>Query Performance</label>

<fieldset submitButton="false"></fieldset>

<row>

<panel>

<input type="time" token="tokTimeSearch1" searchWhenChanged="true">

<label>Select Search 1 Time Range</label>

<default>

<earliest>-15d@d</earliest>

<latest>-1d@d</latest>

</default>

</input>

<table>

<title>Query 1 : eval within stats</title>

<search>

<done>

<set token="tokSearch1JobID">$job.sid$</set>

</done>

<query>index=_internal sourcetype=splunkd log_level="*"

| stats count as Total, count(eval(log_level="INFO")) as Success, count(eval(log_level!="INFO")) as Alert

| table Total Success Alert</query>

<earliest>$tokTimeSearch1.earliest$</earliest>

<latest>$tokTimeSearch1.latest$</latest>

<sampleRatio>1</sampleRatio>

</search>

<option name="count">20</option>

<option name="dataOverlayMode">none</option>

<option name="drilldown">none</option>

<option name="percentagesRow">false</option>

<option name="refresh.display">progressbar</option>

<option name="rowNumbers">false</option>

<option name="totalsRow">false</option>

<option name="wrap">true</option>

</table>

</panel>

<panel>

<input type="time" token="tokTimeSearch2" searchWhenChanged="true">

<label>Select Search 2 Time Range</label>

<default>

<earliest>-15d@d</earliest>

<latest>-1d@d</latest>

</default>

</input>

<table>

<title>Query 2: eval before stats</title>

<search>

<done>

<set token="tokSearch2JobID">$job.sid$</set>

</done>

<query>index=_internal sourcetype=splunkd log_level="*"

| eval err=case(log_level="INFO","Success",true(),"Alert")

| stats count by err

| addcoltotals labelfield="err" label="Total"

| transpose header_field="err" column_name="err"

| table Total Success Alert</query>

<earliest>$tokTimeSearch2.earliest$</earliest>

<latest>$tokTimeSearch2.latest$</latest>

<sampleRatio>1</sampleRatio>

</search>

<option name="count">20</option>

<option name="dataOverlayMode">none</option>

<option name="drilldown">none</option>

<option name="percentagesRow">false</option>

<option name="rowNumbers">false</option>

<option name="totalsRow">false</option>

<option name="wrap">true</option>

</table>

</panel>

<panel>

<input type="time" token="tokTimeSearch3" searchWhenChanged="true">

<label>Select Search 3 Time Range</label>

<default>

<earliest>-15d@d</earliest>

<latest>-1d@d</latest>

</default>

</input>

<table>

<title>Query 3: stats before eval</title>

<search>

<done>

<set token="tokSearch3JobID">$job.sid$</set>

</done>

<query>index=_internal sourcetype=splunkd log_level="*"

| stats count by log_level

| eval err= case(log_level="INFO","Success",true(),"Alert")

| stats sum(count) by err

| addcoltotals labelfield="err" label="Total"

| transpose header_field="err" column_name="err"

| table Total Success Alert</query>

<earliest>$tokTimeSearch3.earliest$</earliest>

<latest>$tokTimeSearch3.latest$</latest>

<sampleRatio>1</sampleRatio>

</search>

<option name="count">20</option>

<option name="dataOverlayMode">none</option>

<option name="drilldown">none</option>

<option name="percentagesRow">false</option>

<option name="rowNumbers">false</option>

<option name="totalsRow">false</option>

<option name="wrap">true</option>

</table>

</panel>

</row>

<row>

<panel>

<html>

<pre>index=_internal sourcetype=splunkd log_level="*"

| stats count as Total, count(eval(log_level="INFO")) as Success, count(eval(log_level!="INFO")) as Alert

| table Total Success Alert</pre>

</html>

</panel>

<panel>

<html>

<pre>index=_internal sourcetype=splunkd log_level="*"

| eval err=case(log_level="INFO","Success",true(),"Alert")

| stats count by err

| addcoltotals labelfield="err" label="Total"

| transpose header_field="err" column_name="err"

| table Total Success Alert</pre>

</html>

</panel>

<panel>

<html>

<pre>index=_internal sourcetype=splunkd log_level="*"

| stats count by log_level

| eval err= case(log_level="INFO","Success",true(),"Alert")

| stats sum(count) by err

| addcoltotals labelfield="err" label="Total"

| transpose header_field="err" column_name="err"

| table Total Success Alert</pre>

</html>

</panel>

</row>

<row depends="$tokSearch1JobID$,$tokSearch2JobID$,$tokSearch2JobID$">

<panel>

<table>

<title>Job Inspector Search 1</title>

<search>

<query>| rest /services/search/jobs/$tokSearch1JobID$

| table runDuration eventCount scanCount diskUsage earliestTime latestTime normalizedSearch optimizedSearch request.search reduceSearch reportSearch</query>

<earliest>$earliest$</earliest>

<latest>$latest$</latest>

</search>

<option name="refresh.display">progressbar</option>

</table>

</panel>

<panel>

<table>

<title>Job Inspector Search 2</title>

<search>

<query>| rest /services/search/jobs/$tokSearch2JobID$

| table runDuration eventCount scanCount diskUsage earliestTime latestTime normalizedSearch optimizedSearch request.search reduceSearch reportSearch</query>

<earliest>$earliest$</earliest>

<latest>$latest$</latest>

</search>

<option name="refresh.display">progressbar</option>

</table>

</panel>

<panel>

<table>

<title>Job Inspector Search 3</title>

<search>

<query>| rest /services/search/jobs/$tokSearch3JobID$

| table runDuration eventCount scanCount diskUsage earliestTime latestTime normalizedSearch optimizedSearch request.search reduceSearch reportSearch</query>

<earliest>$earliest$</earliest>

<latest>$latest$</latest>

</search>

<option name="refresh.display">progressbar</option>

</table>

</panel>

</row>

</form>

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try running both for much larger number of records.