- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- universal forwarder is taking about 30GB of Memory...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

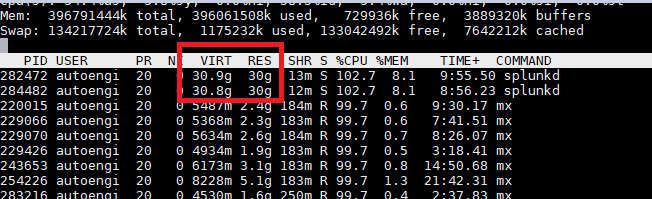

My universal forwarder is taking about 30GB and my IT guys are asking is this normal.

I have just restarted it and then upgrade it to the latest 7.1.1, but with in 20 minutes it has gone from 500MB back to 30GB VIRT and RESS. This seems like a lot of me, or is this just the way LINUX uses memory?

Thanks in Advance

Robert

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We found the issues, Splunk was monitoring over 20,000 files most of them where old.

When we deleted 19,000 of them the issues was resolved.

However i think there is a bug in the forwarder here.

Thanks for your help on this

Robert

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We found the issues, Splunk was monitoring over 20,000 files most of them where old.

When we deleted 19,000 of them the issues was resolved.

However i think there is a bug in the forwarder here.

Thanks for your help on this

Robert

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you post a sample of your inputs.conf file, and a brief explanation of exact data collection requirement.

i presume there are a lot of data that the UF is trying to forward hence the huge overhead.

Also the OS version that you are running your UF on

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I am using LINUX - Red Hat. We have one application folder, but we have multiple sourcetypes all over the application. so i had to set up multiple lines to take in multiple sourcetypes.

[monitor:///dell873srv/apps/UBS_QCST_SEC3/logs.../.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

sourcetype = sun_jvm

crcSalt =

whitelist = .*gc..log$

blacklist=logs_|fixing_|tps-archives

[monitor:///dell873srv/apps/UBS_QCST_SEC3.../.tps]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

sourcetype = tps

crcSalt =

whitelist = ..tps$

blacklist=logs_|fixing_|tps-archives

[monitor:///dell873srv/apps/UBS_QCST_SEC3/logs/monitoring/vmstat/.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

sourcetype = vmstat-linux

crcSalt =

whitelist = vmstat..log$

blacklist=logs_|fixing_|tps-archives

[monitor:///dell873srv/apps/UBS_QCST_SEC3/logs/monitoring/nicstat/]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

sourcetype = nicstat

crcSalt =

whitelist = nicstat..log$

blacklist=logs_|fixing_|tps-archives

[monitor:///dell873srv/apps/UBS_QCST_SEC3.../.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

whitelist=mxtiming..log$

blacklist=logs_|fixing_|tps-archives|mxtiming_crv_nr.*

crcSalt =

sourcetype = MX_TIMING2

[monitor:///dell873srv/apps/UBS_QCST_SEC3.../service.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

whitelist = (?\d)-\d*-service.log

blacklist=logs_|fixing_|tps-archives

crcSalt =

sourcetype = service

[monitor:///dell873srv/apps/UBS_QCST_SEC3/.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

whitelist=mxtiming_crv_nr..log$

blacklist=logs_|fixing_|tps-archives

crcSalt =

sourcetype = MX_TIMING_RATE_CURVE

[monitor:///dell873srv/apps/UBS_QCST_SEC3/logs/traces/.log]

disabled = false

host = UBS-RC_QCST_MASTER

index = mlc_live

whitelist=mxtiming_crv_nr..log$

blacklist=logs_|fixing_|tps-archives

crcSalt =

sourcetype = MX_TIMING_RATE_CURVE

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe folder traversal is the culprit.

Naive use of '...' causes CPU problems. splunkd ends up using 80 to 90% of the CPU on the Forwarders. Monitor folder traversals looking for new log files is very CPU expensive. I suggest you use as much specific log path as possible and use wildcards "" whenever absolutely necessary

example below:

current monitor statement

[monitor://C:\Windows...LogFiles]

Replace this by :

[monitor://C:\WINDOWS\system32\LogFiles]

OR

[monitor://C:\WINDOWS\system32\LogFiles\.log]verify if you have disabled THP. refer the splunk doc on it here

Also, please check the limits.conf at $SPLUNK_HOME/etc/system/default/.

check for stanza

[thruput]

maxKBps =

the default value here is 256, you might consider increasing it if this is the actual reason for the data getting piled up, you can st the integer value to "0" which means unlimited.

check limits.conf documentation here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your answer

1st - The CPU is fine, it is the memory that is the issue. It is difficult for me to reduce the ... in some case i need them

2nd THP in off on the main Splunk install, however it is on at some of the forwarer servers - do you think this could be the issue?

3rd This is set to 0 on server and forwarder.

Cheers

Rob

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk suggests THP to be turned off, but certain applications , that are running on the servers on which the forwarders are installed, have an underlying dependency on THP. so do verify it before disabling it.

can you check which files are consuming the most of the disk space and post it here.

Also, are you getting a consistent high disk space utilization on the server, or it is just an occasional spike.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We found the issues, Splunk was monitoring over 20,000 files most of them where old.

When we deleted 19,000 of them the issues was resolved.

However i think there is a bug in the forwarder here.

Thanks for your help on this

Robert

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Never seen that before. Have you checked the queue sizes configured on that UF and whether perhaps its queues are filling up due to trouble forwarding data (network issues or much more data coming in that it is able to push out with the defaul 256KBps thruput limit?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi

Thanks for the replay, i am not sure how to check the size of this, is it below?

[tcpout:my_LB_indexers]

server=hp737srv:9997

maxQueueSize=500MB

Cheers

Rob

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, so that is set to max 500MB. Do you have useAck enabled (if so, that adds a Wait Queue of 1500MB).

Still doesn't directly explain 30GB memory usage. But might still be worth checking the metrics.log of that UF to see if the queue is actually filling up at all (it is not like it immediately reserves the full queue size if there is no queuing happening). Any errors / warnings in splunkd.log on the UF?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi -

Thanks for replay.

I don use useAck , i have checks all logs and i cant see any major issues....hmmm

This is a large environment with a lot of activity, however the max is 40G - it work go over that.

I wounder if i can reduce the 40GB

Cheers

Rob