- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why is one indexed field only giving me a multival...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am receiving cloud data from AWS via HEC in JSON format but I am having trouble getting the "timestamp" field to index properly. Here is a simplified sample JSON:

{

metric_name: UnHealthyHostCount

namespace: AWS/ApplicationELB

timestamp: 1678204380000

}

In order to index I created the following sourcetype which has been replicated to HF, IDX cluster, and SH:

[aws:sourcetype]

SHOULD_LINEMERGE = false

TRUNCATE = 8388608

TIME_PREFIX = \"timestamp\"\s*\:\s*\"

TIME_FORMAT = %s%3N

TZ = UTC

MAX_TIMESTAMP_LOOKAHEAD = 40

KV_MODE = json

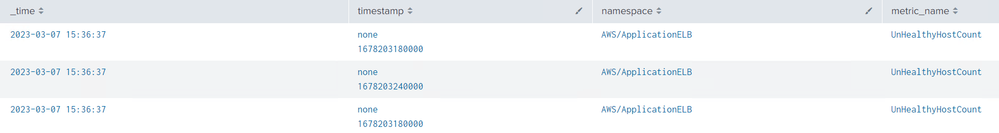

The event data gets indexed without issue, but I noticed that the "timestamp" field seems to be indexed as a multivalue containing the epoch as above, but also the value "none". I thought it had to do with indexed extractions, but it is the only field that displays this behaviour. Here is the table:

Any ideas on how to get the data indexed without the "none" value?

Thank you and best regards,

Andrew

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The _time field not extracting properly is going to be an independent problem from the none value in your search. The _time extraction occurs independently of field extractions, it purely looks at the _raw event data.

Starting with the _time problem, can you try the following props, at a minimum this will need to be set on the HF which is running the HEC collector.

[sourcetype]

TIME_PREFIX = "timestamp":\s*

TIME_FORMAT = %s%3N

TZ = UTC

MAX_TIMESTAMP_LOOKAHEAD = 40

As for the "none" mvfield issue, there must be a field extraction running somewhere that's extracting the timestamp field, as well as your JSON field extraction.

Let's start by working out if timestamp is an indexed field. We can check the tsidx file using the following search:

| tstats values(timestamp) where index=my_index sourcetype=my_sourcetype source=my_source by index

If that search returns a values(timestamp) field then your timestamp field is being extracted at index_time, you need to focus your debugging efforts on the HF running the HEC collector, you're looking for TRANSFORMS-xxxx or INGEST_EVAL entries in props. If that search returns nothing then this is a search_time field extraction and you need to focus on the SH. Here, you're looking for EVAL-xxxx, REPORT-xxxx or FIELDALIAS-xxxx.

/opt/splunk/bin/splunk btool props list my_sourcetype

Look through the output of this command, are any of the field-extractions running that were mentioned above?

If not, maybe this is a source/host based extraction, look through the output of the following commands looking for a reference to your data (note this could be a regex based stanza, so there is no perfect way to search through here.)

/opt/splunk/bin/splunk props list source::

/opt/splunk/bin/splunk props list host::

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Andrew,

Firstly, from what you have shared so far, there is no reason to suspect that Splunk will be extracting the timestamp field separately. Can you make sure you've shared all of your relevant props.conf / transforms.conf entries and can you also please share an obfuscated sample of the entire JSON without removing any of the JSON syntax?

Secondly, are you sure that your search is not doing anything it shouldn't be? Can you share the search that you're using to generate this table?

And finally, your timestamp extraction is not working looking at:

TIME_PREFIX = \"timestamp\"\s*\:\s*\"

Are you sure you need that last \", typically JSON numbers are not surrounded by quotes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tom_Lundie Hello Tom, thank you for the response.

You've hit the nail on the head, the badly associated _time is the problem I'm trying to solve. When I started to investigate I noticed that the table produced a multivalue timestamp value where one value was "none". That's what I assumed is causing the problem which is why I'm trying to figure out how to get rid of it.

As requested:

Entire JSON

{

account_id: 1234567

dimensions: {

LoadBalancer: app/ingress/9c6692fe5eb2b0b8

TargetGroup: targetgroup/report-89f6283838/917587a99b43effd

}

metric_name: UnHealthyHostCount

metric_stream_name: Cert

namespace: AWS/ApplicationELB

region: ca-central-1

timestamp: 1678214700000

unit: Count

value: {

count: 3

max: 0

min: 0

sum: 0

}

}

props.conf

| table _time timestamp namespace metric_name

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The _time field not extracting properly is going to be an independent problem from the none value in your search. The _time extraction occurs independently of field extractions, it purely looks at the _raw event data.

Starting with the _time problem, can you try the following props, at a minimum this will need to be set on the HF which is running the HEC collector.

[sourcetype]

TIME_PREFIX = "timestamp":\s*

TIME_FORMAT = %s%3N

TZ = UTC

MAX_TIMESTAMP_LOOKAHEAD = 40

As for the "none" mvfield issue, there must be a field extraction running somewhere that's extracting the timestamp field, as well as your JSON field extraction.

Let's start by working out if timestamp is an indexed field. We can check the tsidx file using the following search:

| tstats values(timestamp) where index=my_index sourcetype=my_sourcetype source=my_source by index

If that search returns a values(timestamp) field then your timestamp field is being extracted at index_time, you need to focus your debugging efforts on the HF running the HEC collector, you're looking for TRANSFORMS-xxxx or INGEST_EVAL entries in props. If that search returns nothing then this is a search_time field extraction and you need to focus on the SH. Here, you're looking for EVAL-xxxx, REPORT-xxxx or FIELDALIAS-xxxx.

/opt/splunk/bin/splunk btool props list my_sourcetype

Look through the output of this command, are any of the field-extractions running that were mentioned above?

If not, maybe this is a source/host based extraction, look through the output of the following commands looking for a reference to your data (note this could be a regex based stanza, so there is no perfect way to search through here.)

/opt/splunk/bin/splunk props list source::

/opt/splunk/bin/splunk props list host::

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tom_Lundie Thank you again for the response, I appreciate you taking the time.

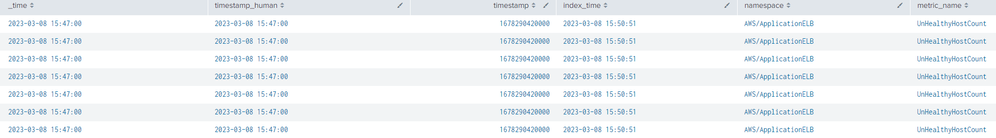

I updated the sourcetype, deployed it, both problems solved: timestamp is no longer multivalue with "none" value and _time is associated correctly to the epochms value in the payload!

Before making the change I ran the tstats search you provided and it returned value "none". After I made the change and ran it again the value was null. Then I tabled the results as before and the multivalue is no longer there (I also added timestamp_human and index_time to provide additional understanding):

Thank you so much for the suggestions. Accepting your response as the answer, specifically the sourcetype.

Best regards,

Andrew

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@andrewtrobec you're very welcome, glad to hear this helped.

Interesting, there must've been a rogue index_time field extraction that caused this. Starting afresh with a new sourcetype makes sense.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

MAX_TIMESTAMP_LOOKAHEAD seems also way too low at 40 bytes. And you should not need backslashes to escape quotes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content