Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Events are not breaking/extracted properly

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I completed a few UF based data ingestions and SPLUNK is getting events from those ingestions but have some issues with breaking event.

I have 2 types of files: 1) text files with header and Pile Delimiters, 2) XML files

In the case of text files, header info is showing up within the SPLUNK events, and also events are not breaking as expected at all, most of the cases, one SPLUNK event contains more than one source events

In the case of XML files, info within one source file considers as one SPLUNK event, but it should be considered number of events based on the XML tag.

Any thoughts/recommendations to resolve these issues would be highly appreciated. Thank you!

props/input configuration files and source files are given below:

For Text Files:

props

[ds:audit]

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)

HEADERFIELD_LINE_NUMBER=1

INDEXED_EXTRACTIONS=psv

TIME_FORMAT=%Y-%m-%dT%H:%M:%S.%Q%z

TIMESTAMP_FIELDS=TimeStamp

inputs

[monitor:///opt/audit/DS/DS_EVENTS*.txt]

sourcetype=ds:audit

index=ds_test

sample

serID|UserType|System|EventType|EventId|Subject|SessionID|SrcAddr|EventStatus|ErrorMsg|TimeStamp|Additional Application Data |Device

p22bb4r|TEST|DS|USER| VIEW_NODE |ELEMENT<843006481>|131e9d5b-e84e-567d-a6b1-775f58993f68|null|00||2022-06-14T09:01:55.001+0000||NA

p22bbs1|TEST|DS|USER| FULL_SEARCH |ELEMENT<843006481>|121e7d5b-f84e-467d-a6b1-775f58993f68|null|00||2021-06-14T09:01:50.001+0000||NA

p22bbw3|TEST|DS|USER| FULL_SEARCH | ELEMENT< 343982854>|5b8fb22e-eeed-4802-8b07-8559dbfe1e45|null|00||2021-06-14T08:54:08.054+0000||NA

ts70sbr4|TEST|DS|USER|VIEW_NODE| ELEMENT< 35382854>|5b8fb22e-eeed-4802-8b07-8559dbfe1e45|null|00||2021-06-14T08:54:16.054+0000||NA

ts70sbd3|TEST|DS|USER|FULL_SEARCH|ELEMENT<933982854>|5b8fb22e-eeed-4802-8b07-8559dbfe1e45|null|00||2021-06-14T08:53:54.053+0000||NA

For XML Files:

[secops:audit]

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]*)<MODTRANSL>

TIME_PREFIX=<TIMESTAMP>

TIME_FORMAT=%Y%m%d%H%M%S

MAX_TIMESTAMP_LOOKAHEAD=14

TRUNCATE=2500

Input

[monitor:///opt/app/secops/logs/audit_secops_log*.XML]

sourcetype=secops:audit

index=secops_test

Sample Data

<?xml version="x.1" encoding="UTF-8"?><DSDATA><MODTRANSL><TIMESTAMP>20190621121321</TIMESTAMP><USERID>d23bsrb</USERID><USERTYPE>SECOPS</USERTYPE><SYSTEM>DS</SYSTEM><EVENTTYPE>ADMIN</EVENTTYPE><EVENTID>SYS</EVENTID><ID>0300001</ID><SRCADDR>10.210.135.108</SRCADDR><RETURNCODE>00</RETURNCODE><VARDATA> Initiated New Entity Status: AP</VARDATA></MODTRANSL><MODTRANSL><TIMESTAMP>20190621121416</TIMESTAMP><USERID> d23bsrb </USERID><USERTYPE>SECOPS</USERTYPE><SYSTEM>DSI</SYSTEM><EVENTTYPE>ADMIN</EVENTTYPE><EVENTID>SYS</EVENTID><ID>000000000</ID><SRCADDR>10.210.135.120</SRCADDR><RETURNCODE>00</RETURNCODE><VARDATA> Entity Status: Approved New Entity Status: TI</VARDATA></MODTRANSL><MODTRANSL><TIMESTAMP>20190621121809</TIMESTAMP><USERID>sj45yrs</USERID><USERTYPE>SECOPS</USERTYPE><SYSTEM>DSI</SYSTEM><EVENTTYPE>ADMIN</EVENTTYPE><EVENTID>DS_OPD</EVENTID><ID>2192345</ID><SRCADDR>10.212.25.19</SRCADDR><RETURNCODE>00</RETURNCODE><VARDATA> 43ded7433b314eb58d2307e9bc536bd3</VARDATA > <DURATION>124</DURATION> </MODTRANSL</DSDATA>

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SplunkDash

I've tried the following on a Splunk v8.0.5 instance and it worked OK for me.

[ __auto__learned__ ]

NO_BINARY_CHECK=true

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)

INDEXED_EXTRACTION=PSV

HEADER_FIELD_LINE_NUMBER=1

HEADER_FIELD_DELIMITER=|

FIELD_DELIMITER=|

TIMESTAMP_FIELDS=TimeStamp

TIME_FORMAT=%FT%T.%3Q%z

And yes, it is strange that I need to specify FIELD_DELIMITER=|, as you would think that specifying PSV implies this anyway. Might be a bug.

Note, as mentioned before, this config should work on a either UF or HF, though generally INDEXED_EXTRACTIONS are defined on the UF, which means the structured data is parsed at source by the UF. No further modifications can be made to this forwarded data, by a Splunk HF/IDX at least, once it parsed like this.

If this solves your problem then please mark this as answered.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your PSV definition looks mostly OK. But if it doesn't work, there must surely be something wrong with it 😉

But about your XML source settings - if you put those into props.conf on UF, they won't work. You need them on indexers/HFs (whatever is the first "heavy" layer you're hitting). And with this LINE_BREAKER you won't get proper XML. You'd need to break the events using a non-capturing group so that the XML tag you're breaking at is not consumed.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Thank you so much for your response, truly appreciate it. Just wondering are there any alternate solution to resolve these issues. Any additional/alternate recommendation would be highly appreciated. Thank you again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SplunkDash

1. For the text files you look to have a typo with Splunk docs showing it should be

HEADER_FIELD_LINE_NUMBER = <integer>

Also, the docs show the INDEXED_EXTRACTIONS as capitalized, e.g.

INDEXED_EXTRACTIONS = PSV Not 100% sure capitalization would make a difference but worth a go.

As INDEX_EXTRACTIONS is used, the props.conf can live on either the UF or HF.

2. Assuming you do not want the <?xml version=...> part then the event break config would be.

LINE_BREAKER = ([\r\n>]*.+)<DSDATA>Note, I kept the <DSDATA> tag to keep the XML valid with the closing <\DSDATA> tag.

If you did want to get rid of the DSDATA fields too then this should work...

LINE_BREAKER = ((?:</DSDATA>)?[\r\n>]*.+<DSDATA>|</DSDATA>)Note the OR statement (|</DSDATA>) is to try and strip the last entry in an event that may not have a newline or carriage return. It will result an empty event.

If it was me, I think I'd keep the DSDATA field in the LINE_BREAKER and then use SEDCMD to strip <.?DSDATA> from the events.

For the XML extraction the props.conf file must live on the HF, or indexer, depending on your setup.

Restarts may be needed once configs are updated, depending on how you deploy this.

Hope it helps

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Thank you for your quick response. In regard to XML file <MODTRANSL> should be the event breaking point, sample XML file I provided should have 3 events. Do you think,

LINE_BREAKER = ([\r\n>]*.+)<DSDATA>going to work for that. Thank you again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SplunkDash

OK that is clearer and it can be done. Give this a try in the heavy forwarders props.conf file.

LINE_BREAKER = ([\r\n]*<\?xml.+<DSDATA>|</MODTRANSL>|</DSDATA>|[\n\r])

# to make XML valid again, re-append the </MODTRANSL> tag stripped in line breaking capture group

SEDCMD-re-append-trailing-tag =s/$/<\/MODTRANSL>/

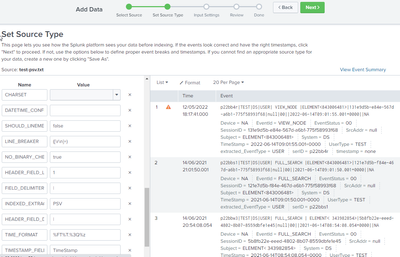

A good way to play with this is with a test file uploaded via the Settings > Add Data wizard.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @yeahnah,

Thank you so much, truly appreciated....it's working for me.

Is there any recommendation for the issues with text files? Anything will help. Thank you so much again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SplunkDash

I've tried the following on a Splunk v8.0.5 instance and it worked OK for me.

[ __auto__learned__ ]

NO_BINARY_CHECK=true

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)

INDEXED_EXTRACTION=PSV

HEADER_FIELD_LINE_NUMBER=1

HEADER_FIELD_DELIMITER=|

FIELD_DELIMITER=|

TIMESTAMP_FIELDS=TimeStamp

TIME_FORMAT=%FT%T.%3Q%z

And yes, it is strange that I need to specify FIELD_DELIMITER=|, as you would think that specifying PSV implies this anyway. Might be a bug.

Note, as mentioned before, this config should work on a either UF or HF, though generally INDEXED_EXTRACTIONS are defined on the UF, which means the structured data is parsed at source by the UF. No further modifications can be made to this forwarded data, by a Splunk HF/IDX at least, once it parsed like this.

If this solves your problem then please mark this as answered.