- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why a complete 9997 traffic still fails?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a UF that's configured to forward to a healthy intermediate HF (9997) . The UF is producing "forcibly closed" errors but the HF is healthy and is accepting TCP 9997 from other UFs.

What could be the reason for this? Troubleshooting attempts made:

1. Confirming with network team that rules are in place.

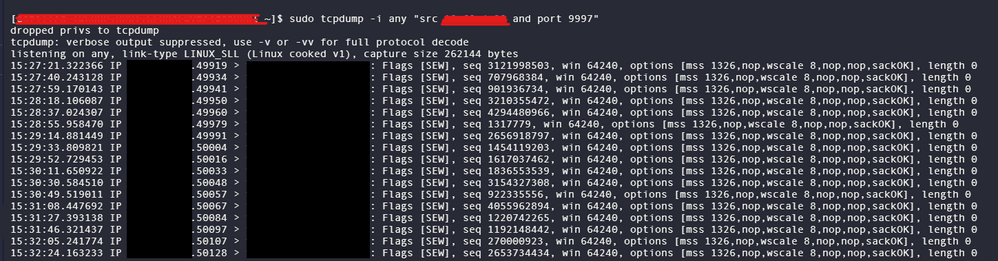

2. TCP Dump from the dest (HF), packets received.

3. Telnet from UF to dest (9997), telnet completes.

Any other things I missed?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Found one evidence that problem is network. At least, finally, I have proof that the network team has to fix it.

Basically, I ran a network search from multiple srcs in the same subnet towards the HF:9997. And displayed the bytes_in. This one UF that I have a problem with has bytes_in=0. And the rest has bytes_in comparable to bytes_out.

SPL:

sourcetype=pan:traffic src=10.68.x.x/16 dest=10.68.p.q dest_port=9997

| stats sparkline(sum(bytes_out)) as bytes_out sparkline(sum(bytes_in)) as bytes_in sum(bytes_in) as total_bytes_return by src dest dest_port

This SPL returns hundreds of rows and when I sort by total_bytes_return, there's a flat line for bytes_in and 0 for the field total_bytes_return for this UF in concern.

I can sleep now and pass this over to network team.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

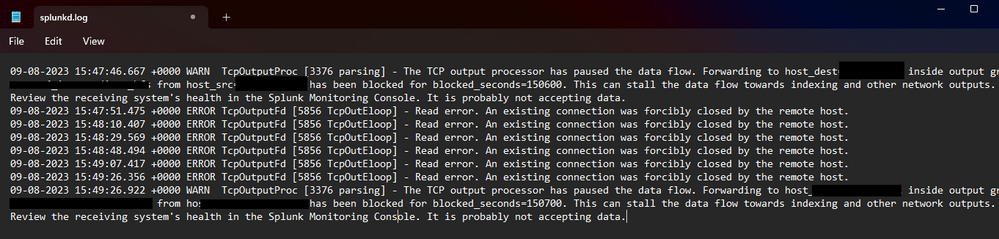

the splunkd.log is from UF - my bad for erroneously writing "HF's splunkd.log" on the caption.

The UF can't complete the 9997 to the HF despite all evidence (at network level).

- 9997 is allowed

- Firewall logs show traffic is allowed

- Other UFs with same IP subnet can do the 9997 no problem (e.g. all UFs: 10.68.0.0/16, dest HF: 10.68.2.2:9997)

Why other UFs can, e,g. 10.68.10.10, 11, 12, 13, 14, 15 and many more ---> 10.68.2.2:9997 == OK

but this particular one 10.68.10.16 ---> 10.68.2.2:9997 == results to "An existing connection was forcibly closed by the remote host." and "The TCP output processor has paused the data flow. Forwarding to host_dest=10.68.2.2"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's strange because the tcpdump seemed to contain just SYN packets whereas "existing connection was forcibly closed" applies to... well, existing, already established connection.

Unfortunately, it's hard to say what's going on on the network without access to said network. I've seen so many different strange cases in my life. The most annoying so far was when the connection would get reset in the middle. And _both_ sides would get RST packets. The customer insisted that there is nothing filtering the traffic. After some more pestering him it turned out that there was some IPS which didn't like the certificate and was issuing RST to both ends of the connection.

So there can be many different reasons for this.

Compare the contents of packet dump on both sides - maybe that will tell you something.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. So far you're showing us that your HF receives Syn packets from UF (at least that's what I assume because the IPs are filtered out). We don't see if the HF responds to them.

The second log - shows the HF having problems with pushing the events downstream (you're showing the output side logs, not inputs).

There is more to this than meets the eye.