Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- What can be done with an indexer in a "hung" state...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What can be done with an indexer in a "hung" state?

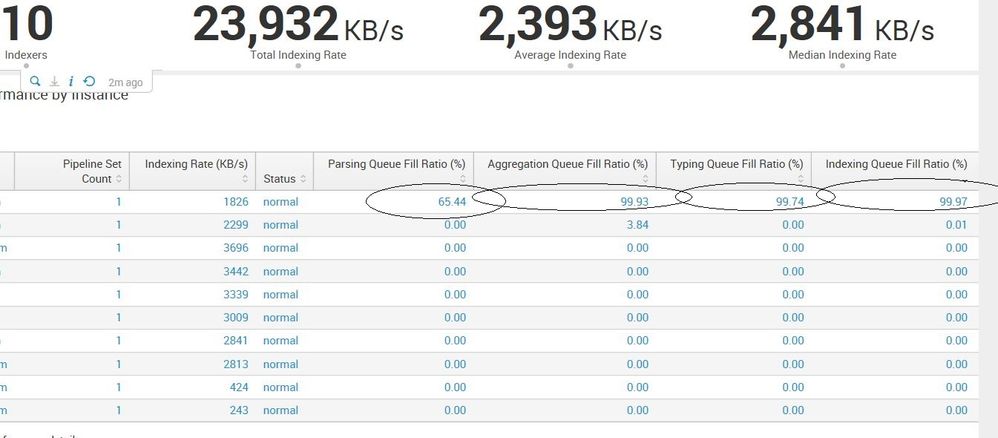

We reach situations where one out of the ten indexers reaches a "hung" state. All the large queues are filled up for hours and hours and even a restart doesn't always clears this state. Meaning, I bounced it earlier today and after an hour or two it's again in this state. What can be done?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like storage issue or low IOPS on 1st Indexer because when Indexing Queue is full so earlier queue also getting full.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right, removing this indexer from outputs.conf which applies to most of our forwarders...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, I'll first remove from few forwarders who are sending more data to this indexer, in parallel I'll check search activity on this indexer from search head. If more jobs are running on this indexer then it uses more IOPS to read data and might be due to that write performance degraded....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ended up bouncing it when all the queues were at 100% and before a VM crash ; -)