- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Using Splunk as a enterprise data platform

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using Splunk as a enterprise data platform

Splunk has to fit into a structured and unstructured world without duplicating effort. Splunk currently serves as a standard log collection mechanism, but I would like to see Splunk support the feeding of additional third party solutions.

To this end, I would like to know if the following is supported or if anyone has tried these options:

1) Roll coldToFrozen AND warmToCold buckets into hadoop and keep a copy indexed into Splunk. This data would need to be searchable via Splunk Enterprise and hadoop utilities like pig or hive.

2) I would like Splunk, in the form of a UF or an HEC, collect data, and I would like for the data to be routable to Kafka in addition to Splunk Enterprise. I was thinking of having an HF layer, which already exists at my company, route to Fluentd via Splunk's tcp or UDP out. Fluentd could then route to Kafka.

The other option is to remove the UF or an HEC and replace with Fluentd. Fluentd has community developed plug-ins to send to Kafka, HDFS, and Splunk Enterprise. I have not tested this solution.

Any thougths from anyone?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lisaac,

For number 2, Fluentd offers a great alternative to HEC as it allows you to bifurcate/copy/process/transform/parse the data at the node level and then send to the specific data store you need. For example, you can take all warning, error, critical priority Syslog messages and send those to Splunk while sending the lower priority + warning, error, criticial messages to HDFS, Amazon S3, etc. This allows you to reduce the volume that hits the Splunk indexers.

Additionally, Fluentd offers an enterprise version that offers SLA based support for each of the outputs you mentioned (Splunk Enterprise, HDFS, Kafka, Amazin S3) - if you are interested email me at a@treasuredata dot com. More information can be found at https://fluentd.treasuredata.com

Thanks,

Anurag

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

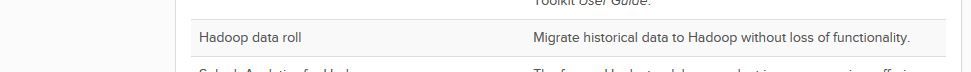

Yes Splunk supports sending data to Hadoop and being searchable. The product was formerly called Splunk Hunk but now is a premium option in Splunk 6.5 (Hadoop data roll). The structured data piece is covered by using the internal MongoDB system (KV Store), a CSV file collection, and/or an external database connected using the DBConnect app.

In terms of sending Splunk data to alternative sources, this is indeed possible, as you're able to set up a variety of outputs stanzas in either the UF or HF to do these things (for instance, in my environment, we send data from our UFs/HFs to both Splunk and our QRadar instance). Note some outputs can only be done from a HF (usually if the output is built on the Splunk Python libraries).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See this previous Answers posting about sending Splunk data to Kafka.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

About -

-- 1) Roll coldToFrozen AND warmToCold buckets into hadoop and keep a copy indexed into Splunk. This data would need to be searchable via Splunk Enterprise and hadoop utilities like pig or hive.

Welcome to Splunk Enterprise 6.5

Says -

So, in 6.5 there is a clean integration between Splunk/Hunk and Hadoop.