- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

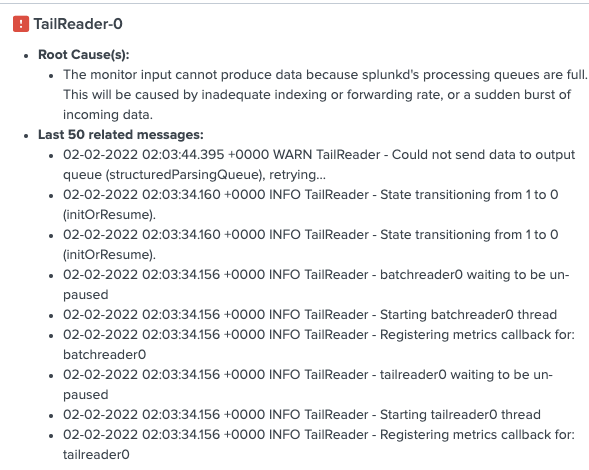

I just recently restarted my splunk enterprise instance in order to add an app and once it was back up, i noticed that one of the health checks was failing.

Also no new logs were showing up in the search.

I looked at the monitoring console and noticed the parsing queue was full.

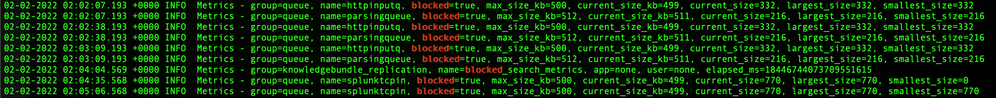

I also checked the metrics.log and saw some of the queues were full.

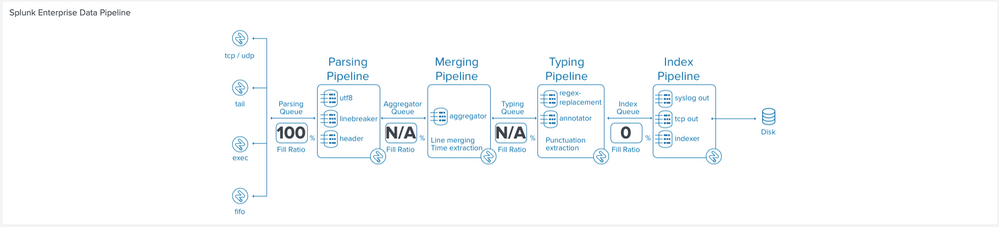

If I'm understanding the data pipeline hierarchy correctly, it's the parsing queue that's actually blocked and causing the other queues to be blocked.

I also checked the splunkd.log and didn't really anything that seemed related. There were some SSL errors which didn't seem related. And this other error:

ERROR HttpInputDataHandler - Failed processing http input, token name=kube, channel=n/a, source_IP=172.17.8.66, reply=9, events_processed=4, http_input_body_size=7256, parsing_err="Server is busy"

but that seems to be a result of the full queue.

I looked into my resource usage from the monitoring console and top tool and neither cpu or mem go higher than 50% utilization.

I also restarted splunk multiple times but the queue always goes to 100% instantly. I did notice a warning on startup:

Bad regex value: '(::)?...', of param: props.conf / [(::)?...]; why: this regex is likely to apply to all data and may break summary indexing, among other Splunk features.However, I didn't make any changes to props.conf and everything was working before I restarted the first time so I assume this is not related.

Not sure what else to try. Any help would be greatly appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

you said that you just installed a new app there? Probably it contains something which broke the data pipeline?

You should start with the next documents to understanding what can cause this issue:

- https://docs.splunk.com/Documentation/Splunk/8.2.4/Deploy/Datapipeline

- https://conf.splunk.com/files/2019/slides/FN1570.pdf

- https://wiki.splunk.com/Community:HowIndexingWorks

- https://docs.splunk.com/Documentation/Splunk/8.2.4/DMC/IndexingDeployment

- https://community.splunk.com/t5/Monitoring-Splunk/What-can-be-done-when-the-parsing-and-aggregation-...

As the parsing Queue is full I start to looking those actions which are happening in parsing pipeline

---8<---

Parsing

During the parsing segment, Splunk software examines, analyzes, and transforms the data. This is also known as event processing. It is during this phase that Splunk software breaks the data stream into individual events.The parsing phase has many sub-phases:

- Breaking the stream of data into individual lines.

- Identifying, parsing, and setting timestamps.

- Annotating individual events with metadata copied from the source-wide keys.

- Transforming event data and metadata according to regex transform rules.

---8<--

There is unofficial trick to use wildcard on props.conf. Just use (?::){0} in beginning of your e.g. sourcetype stanza. Probably someone has remember this wrongly and put those in wrong order?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

you said that you just installed a new app there? Probably it contains something which broke the data pipeline?

You should start with the next documents to understanding what can cause this issue:

- https://docs.splunk.com/Documentation/Splunk/8.2.4/Deploy/Datapipeline

- https://conf.splunk.com/files/2019/slides/FN1570.pdf

- https://wiki.splunk.com/Community:HowIndexingWorks

- https://docs.splunk.com/Documentation/Splunk/8.2.4/DMC/IndexingDeployment

- https://community.splunk.com/t5/Monitoring-Splunk/What-can-be-done-when-the-parsing-and-aggregation-...

As the parsing Queue is full I start to looking those actions which are happening in parsing pipeline

---8<---

Parsing

During the parsing segment, Splunk software examines, analyzes, and transforms the data. This is also known as event processing. It is during this phase that Splunk software breaks the data stream into individual events.The parsing phase has many sub-phases:

- Breaking the stream of data into individual lines.

- Identifying, parsing, and setting timestamps.

- Annotating individual events with metadata copied from the source-wide keys.

- Transforming event data and metadata according to regex transform rules.

---8<--

There is unofficial trick to use wildcard on props.conf. Just use (?::){0} in beginning of your e.g. sourcetype stanza. Probably someone has remember this wrongly and put those in wrong order?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to close the loop on this, as I was going thru the logs I noticed the app I recently installed was still enabled even tho I could've sworn I disabled it. I disabled the app and restarted using the web interface. Not sure if that matters but when I did it thru the CLI, everything seems to be working again.

Thanks for all your help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A bottleneck in the pipeline (e.g. parsing queue) will cause the upstream buffer to be maxed out. You can:

- Increase the size limit of your queue buffer will allow more time for your CPU to process backlogs.

- Throw more hardware at it, e.g. offload to another heavy forwarder.

- Optimize the regex in your parsing queue (props.conf transform.conf), starting with your largest sourcetypes.

Here's a query to calculate the average, peak, and max size limit for the different queues. You can use this to determine which queue needs to be increased.

index=_internal source=*metrics.log group=queue host=<splunk_host> max_size_kb=* earliest=-1d

| stats max(max_size_kb) AS max_limit_kb max(current_size_kb) AS peak_size_kb avg(current_size_kb) AS avg_size_kb by name

| eval avg_size_kb=ROUND(avg_size_kb, 2)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. Do you know which properties I should set? I went thru the limits.conf documentation and I couldn't figure which properties relate to the queue sizes.

Also I'm not able to see any logs for the index _internal. I'm assuming it's caused by the blockage in the data pipeline.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content