Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Need to Split the events before parsing into Splun...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Need to Split the events before parsing into Splunk

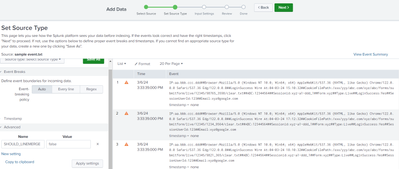

This below mentioned lines are coming as a single event and not as separate events. So we want to get them splitted i.e.. It starts with IP and the end would be with Email field so after which it needs to be a separate next event.

IP:aa.bbb.ccc.ddd##Browser:Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36 Edg/122.0.0.0##LoginSuccess Wire At:04-03-24 15:10:32##CookieFilePath:/xxx/yyy/abc.com/xyz/abc/forms/submitform/live/12345/98765_3598/clear.txt##ABC:12344564##Sessionid:xyz-a1-ddd_1##Form:xyz##Type:Live##LoginSuccess:Yes##SessionUserId:123##Email:xyz@google.com

IP:aa.bbb.ccc.ddd##Browser:Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36 Edg/122.0.0.0##LoginSuccess Wire At:04-03-24 17:12:32##CookieFilePath:/xxx/yyy/abc.com/xyz/abc/forms/submitform/live/12345/1234_9564/clear.txt##ABC:12344564##Sessionid:xyz-a1-ddd_1##Form:xyz##Type:Live##LoginSuccess:Yes##SessionUserId:123##Email:xyz@google.com

IP:aa.bbb.ccc.ddd##Browser:Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36 Edg/122.0.0.0##LoginSuccess Wire At:04-03-24 18:10:32##CookieFilePath:/xxx/yyy/abc.com/xyz/abc/forms/submitform/live/12345/9821_365/clear.txt##ABC:12344564##Sessionid:xyz-a1-ddd_1##Form:xyz##Type:Live##LoginSuccess:Yes##SessionUserId:123##Email:xyz@google.com

IP:aa.bbb.ccc.ddd##Browser:Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36 Edg/122.0.0.0##LoginSuccess Wire At:04-03-24 20:10:32##CookieFilePath:/xxx/yyy/abc.com/xyz/abc/forms/submitform/live/12345/222_123/clear.txt##ABC:12344564##Sessionid:xyz-a1-ddd_1##Form:xyz##Type:Live##LoginSuccess:Yes##SessionUserId:123##Email:xyz@google.com

SO kindly let me know how can be get them splitted into separate events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are the setting for props.conf

SHOULD_LINEMERGE=false #Should always be false

LINE_BREAKER=([\r\n]+)IP #Adds IP to the line breaking (If all lines starts with IP)

NO_BINARY_CHECK=true

TIME_FORMAT=%e-%m-%y %T #Sets the time format

TIME_PREFIX=At: #Use time found after the At:

MAX_TIMESTAMP_LOOKAHEAD=20 #Do not search more tha needed for the time

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@anandhalagaras1 You can apply in the HF's if you have.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gcusello Yes i have updated the props.conf in the UF of the server. Since I don't have access to the Indexers it didnt worked. Since our Search head are hosted in Cloud and managed by Splunk Support.

So what should i need to do if i need to apply to Indexers directly.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

here is the new MASA diagram where you could look where to put those and in which server https://splunk-usergroups.slack.com/files/U0483CQG4/F06PKREDNLW/masa.pdf?origin_team=T047WPASC&origi...

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @anandhalagaras1,

you have to associate SHOULD_LINEMERGE = false to the sourcetype of your data in the UFs and in the Splunk Cloud Search Heads.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gcuselloAs previously stated, I implemented the setting SHOULD_LINEMERGE = false in Splunk Cloud SH, which successfully resolved the issue. However, the logs contain HTML events, which are now being treated as individual events, resulting in difficulties extracting the desired fields. Could you please advise on how we can address this?