- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- My forwarder's outgoing data rate is lagging. How ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

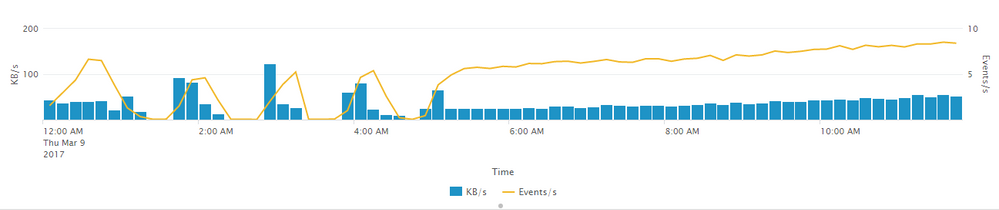

My forwarder's outgoing data rate is lagging. How to resolve this in order for the forwarder to send data continuously?

Forwarder is not sending the data at real-time, it is having some lag as mentioned in the screenshot. Can anyone help me in fixing it?

At 1:10 AM, kbs was nearly 0 whereas the source has some data which is transferred later

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you looked at the logs on the forwarder? Do you see an issue that stands out? Examples:

- Problems connecting to Indexer

- Blocked queues (search metrics.log for "block")

- Errors like this: INFO TailReader - File descriptor cache is full (100), trimming...

How big is the log you are trying to ingest, does it get quite large over a certain time span? Is the event time and index time showing latency? If you run a search like this, what is the latency like?

index=index_of_relevant_forwarder_data | eval time=_time | eval itime=_indextime | eval latency=(itime - time) | stats count, avg(latency), min(latency), max(latency) by source

There are just quite a number of variables here.

Sr. Technical Support Engineer

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous:

Have you looked at the logs on the forwarder? Do you see an issue that stands out? Examples:

Problems connecting to Indexer

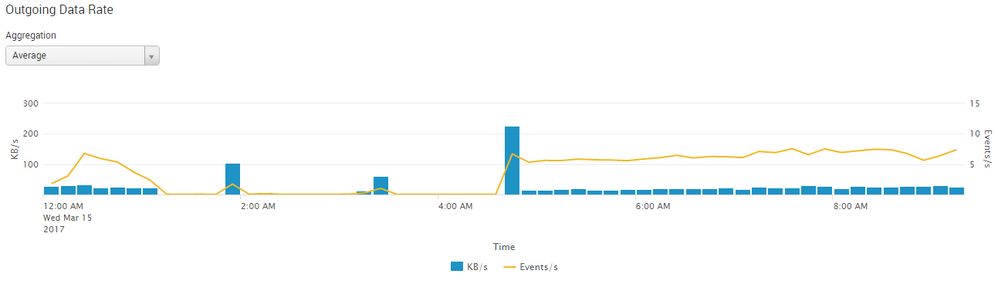

-> I got multiple time out i.e.

03-15-2017 03:54:51.117 -0400 INFO TcpOutputProc - Ping connection to idx=destinationip:port timed out. continuing connections

Do you know how can i resolve this?

Blocked queues (search metrics.log for "block")

-> I didnt receive any events with block in metrics.

Errors like this: INFO TailReader - File descriptor cache is full (100), trimming...

-> i didnt receive any events with the above field.

How big is the log you are trying to ingest, does it get quite large over a certain time span? Is the event time and index time showing latency? If you run a search like this, what is the latency like?

index=index_of_relevant_forwarder_data | eval time=_time | eval itime=_indextime | eval latency=(itime - time) | stats count, avg(latency), min(latency), max(latency) by source

-> I am continuously monitoring the log files which are roll over every day and the logs are really huge.

i used the above query which was really helpful and for a particular source the Max Latency was 9814 which is 2 hours and 43 minute delay.

Can we reduce this latency?

There are just quite a number of variables here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing I would suggest, if you are trying to ingest/forward a lot of data, is to configure the following in limits.conf:

## limits.conf ##

[thruput]

maxKBps = 0

Spec File: http://docs.splunk.com/Documentation/Splunk/latest/Admin/Limitsconf#.5Bthruput.5D

maxKBps = <integer>

* If specified and not zero, this limits the speed through the thruput processor

in the ingestion pipeline to the specified rate in kilobytes per second.

* To control the CPU load while indexing, use this to throttle the number of

events this indexer processes to the rate (in KBps) you specify.

* Note that this limit will be applied per ingestion pipeline. For more information

about multiple ingestion pipelines see parallelIngestionPipelines in the

server.conf.spec file.

* With N parallel ingestion pipelines the thruput limit across all of the ingestion

pipelines will be N * maxKBps.

On a Forwarder, this is configured to the default of 256. This change often elevates the problem you are reporting.

Sr. Technical Support Engineer

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So i have currently have 512 in maxKBps, so are you suggesting to keep it to 256 or 0?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am recommending that it be set to 0:

[thruput]

maxKBps = 0

Sr. Technical Support Engineer