- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Indexes/Source Types Best Practice - Data Onboardi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indexes/Source Types Best Practice - Data Onboarding

New customer seeking guidance for creating indexes/sourcetypes and determining granularity. Primarily we're looking for deeper guidance on why more so than what. We have a large, complex environment.

Our naming scheme for indexes thus far is: organization_category_purpose (ex acme_net_fw)

- organization - unique to us, required, primarily used to segment data between organizations.

- category - broad, like network, application, endpoint, etc

- purpose - more specific, largely unique per category

Does the following seem best practice, for firewalls?

- 2 or 3 indexes used by firewalls (traffic, operations, maybe threats?)

- Multiple sourcetypes split into the various indexes

We are looking at SC4S as a guide (https://splunk.github.io/splunk-connect-for-syslog/main/sources/vendor/PaloaltoNetworks/panos/) although their examples are not always consistent.

We are struggling to determine how granular to be with the purpose of the index and with the amount of possible sourcetypes we can/will have. We do not have the need to specify sensitivity or retention time. Furthermore, we do not have the need to separate security/infrastructure teams.

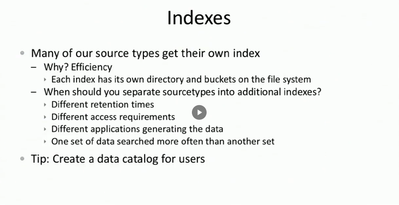

This slide from a Splunk presentation suggests that many sourcetypes get their own index for efficiency:

Questions

- With 4-5 separate firewall products in use in one organization (the most complex), we're looking at 20-25 unique sourcetypes distributed into around 3 firewall indexes, just for firewalls. Does this sound correct?

- We want to avoid unnecessary complexity for future searches, documentation, etc while not destroying our efficiency.

- Can anyone speak into their experiences with creating too many/too few indexes? Specifically on long-term organization, search efficiency, overall experience?

- Can anyone offer any additional real-world guidance on creating a data catalog?

- We can't see any reason to split up windows event logs for endpoints (security/application, etc) but could see security being separate from the others for DCs. Does that sound correct?

Any resources or guidance appreciated.

Here's what we're using so far:

- SC4S example structure: https://splunk.github.io/splunk-connect-for-syslog/main/sources/vendor/PaloaltoNetworks/panos/

- https://lantern.splunk.com/Splunk_Success_Framework/Data_Management/Naming_conventions

- https://subscription.packtpub.com/book/data/9781789531091/5/ch05lvl1sec32/best-practices-for-adminis...

- https://kinneygroup.com/blog/the-proverbial-8-ball-splunk-implementation/

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, @PickleRick, for such a detailed answer. You truly are an Ultra Champion as your forum title suggests and it's answers like yours which make a community great.

Please correct me if wrong. Other than the 2 rules of thumb, you are suggesting that only in advanced cases (which we may never encounter) should we be concerned about splitting up data into multiple indexes, in cases "with hugely different cardinalities or activity levels". This is recommended for efficiency/performance reasons, or...? Performance is our primary consideration when considering multiple indexes.

For example, would firewall logs with >99% traffic and the remainder threat/operational/etc be a good use case for multiple indexes (assuming recommended architecture/resources)? I know we will want dashboards, alerts, reports, etc that ONLY cover the threat logs. We are planning on having CIM compliant data and using acceleration where possible. I'm sure the Splunk Data Administration classes will likely help...

Thoughts or input welcome on efficiency considerations for firewall logs being split into multiple indexes as described above. Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wouldn't go as far as to say "only" in such cases you do this or that. Those are general good practices which doesn't mean that sometimes you don't have to do something differently due to - for example - some local regulations which force you to separate different "types" of data. Or you might have different performance needs so you could store different indexes on different hardware because. So there are some guidelines but there are always some situations "in the wild" which are not and cannot be covered by such general best practices.

But yes, if you have one type of devices which generate several thousands of events per second and another type which generates just a few events per day - you most probably don't want to mix them. That could be a good use case for splitting the events into two separate indexes.

Of course on top of that there are many other ways to gain serious performance boost by leveraging several acceleration techiques (datamodel summary acceleration, summary indexing, report acceleration). The way you write your searches can also greatly affect your search performance. But surely you don't want to add a layer of inefficiency by badly architected indexes 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a general rule of thumb - data should be split into different indexes if:

1) There are different access permissions needed - in Splunk you grant access per index

2) There are different requirements for data retention - you set retention time per index

There might be more subtle cases (like not mixing sources with hugely different cardinalities or activity levels) but these are advanced topics and you might never encounter them.

Sourcetypes define/describe how the data is structured so you need as many of them... as you need. Typically, before actually onboarding data from a given type of source, you'd look whether there is a good TA for such solution. For a relatively wide range of products there are well-written Splunk-build add-ons. For some, there are manufacturer-built add-ons (those can be worse sometimes). For some there are community-built ones and here you have to be most cautions. Remember that you probably will want not only to ingest the data and parse it into fields but also make the data CIM-compatible. Ready-made well-written add-ons save you a lot of time here.

Using different indexes only to simplify searches is an overkill. There are other ways to make it easier to write searches - eventtypes, macros, tags. Use them.

As per your questions:

1) Unless you have different teams working with the firewalls and/or different retention needs I don't see a point in splitting the events into separate indexes. You have your sourcetypes, you have your source/host fields. And in the end you'll probably end up putting all this data into an accelerated datamodel and searching against that, not raw index(es).

2) If you create too few indexes, you can always create new ones. The only problem is that you can't move old data between indexes (use case - you split your general IT team to network ops and security team and want to restrict access to some devices). So yes, you might think ahead and group the sources into separate indexes if you think it might be useful in foreseeable future. If you create too many indexes however, removing them is a bit harder (the main difference is that adding a new index doesn't require indexer restart, removing one might).

Of course the more indexes you have, the more buckets you end up with and the more opened files you have on your indexers. There is an upper limit on buckets in the whole clustered environment managed by a cluster manager but for now you're probably not gonna worry about it.

3) Find where data comes from, what it contains, what you need to use it (existing apps? have to write own ones?), who will use it. There are many different cases. In some organizations Splunk is just deployed once and the types of data don't change almost never throughout its entire life but in others the environment is so dynamic that there are several new types of datasources onboarded every week - in such case it's really really hard to keep track of what is where and to organize it reasonably.

4) Typical consultant's response - "It depends". It depends whether you have just one team who handles everything regarding windows environment or you have separate server team and users' laptops team. Or maybe you have some general helpdesk team which doesn't need to see security related events or you have some application developers who only need to see only events regarding their application. It simply depends on your particular requirements. There is no "one size fits all" solution here. Some customers split windows logs, some customers don't, some even don't collect huge subset of events generated on endpoints. So YMMV.