- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- In which component in the distributed environment ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In which component in the distributed environment should I configure props.conf?

I am using the universal forwarder(UF) to monitor a directory for a CSV file on a remote server. I have configured inputs.conf on the UF to monitor the dir. I am forwarding the data to a Heavy Forwarder which will then forward to an indexer cluster.

I want to tell Splunk where to find the time field and header line using a source type in props.conf

Which component in the distributed environment needs to have the source type configured? The UF, HF or indexer layer?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends.

- search time transforms go to the search-head

- indextime transforms go to the indexers ( and heavy forwarders)

- structured data (CSV/json) transforms go to the collector (it could be the universal forwarder)

As you are mentioning CSV with INDEXED_EXTRACTIONS = CSV, then it goes on props.conf on the collector, so the UF. The events will not be reparsed again at the indexer level.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @yannk for the clear delineation.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CSV is unique. You should have INDEXED_EXTRACTIONS = CSV on all three props.conf.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I need props at all three layers?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the CSV case, you need it at the forwarder level and at the indexer level and from best practices perspective, the three layers should be identical configuration-wise.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excellent, so as long as I have INDEXED_EXTRACTIONS = CSV it should pick up the fields? Or do I need HEADER_FIELD_LINE_NUMBER = 1 also?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HEADER_FIELD_LINE_NUMBER = 1 is fine or you can let Splunk detect it...

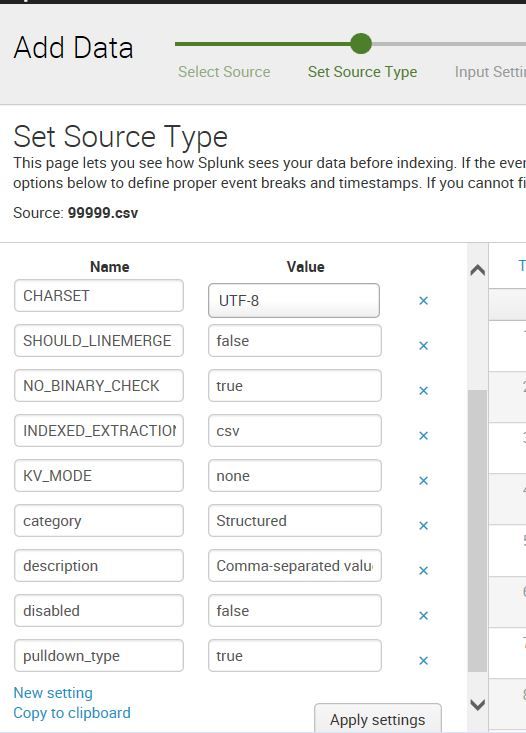

I just used the Add Data feature for a csv file and it shows -

I deleted all except INDEXED_EXTRACTIONS = CSV and finished the upload. The data and all the fields are extracted and the generated stanza in props.conf is surprisingly -

[csv_tst]

DATETIME_CONFIG =

INDEXED_EXTRACTIONS = csv

KV_MODE = none

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

category = Structured

description = Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled = false

pulldown_type = true

.