Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- How to extract specific key/value pairs from a JSO...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a JSON payload that's ingested through a REST API input on a heavy forwarder, with the following configuration in props.conf (on the heavy forwarder, not on the indexer):

[json_result]

INDEXED_EXTRACTIONS = json

KV_MODE = none

DATETIME_CONFIG = CURRENT

SHOULD_LINEMERGE = false

TRUNCATE = 200000

The ensuing event in Splunk looks like this (minified):

{"totalCount":3,"nextPageKey":null,"result":[{"metricId":"builtin:synthetic.http.resultStatus","data":[{"dimensions":["HTTP_CHECK-02B087D58EC18C33","SUCCESS","SYNTHETIC_LOCATION-2CD023FA5F455E28"],"dimensionMap":{"Result status":"SUCCESS","dt.entity.synthetic_location":"SYNTHETIC_LOCATION-2CD023FA5F455E28","dt.entity.http_check":"HTTP_CHECK-02B087D58EC18C33"},"timestamps":[1639254360000],"values":[1]},{"dimensions":["HTTP_CHECK-02B087D58EC18C33","SUCCESS","SYNTHETIC_LOCATION-833A207E28766E49"],"dimensionMap":{"Result status":"SUCCESS","dt.entity.synthetic_location":"SYNTHETIC_LOCATION-833A207E28766E49","dt.entity.http_check":"HTTP_CHECK-02B087D58EC18C33"},"timestamps":[1639254360000],"values":[1]},{"dimensions":["HTTP_CHECK-02B087D58EC18C33","SUCCESS","SYNTHETIC_LOCATION-1D85D445F05E239A"],"dimensionMap":{"Result status":"SUCCESS","dt.entity.synthetic_location":"SYNTHETIC_LOCATION-1D85D445F05E239A","dt.entity.http_check":"HTTP_CHECK-02B087D58EC18C33"},"timestamps":[1639254360000],"values":[1]}]}]}

The text in red reflects what I'm trying to extract from the payload; basically, it's three fields ("Result status", "dt.entity.synthetic_location" and "dt.entity.http_check") and their associated values. I'd like to have three events created from the payload, one event for each occurrence of the three fields, with the fields searchable in Splunk.

I've tried this approach in props.conf to get what I'm looking for...

[json_result]

SHOULD_LINEMERGE = false

LINE_BREAKER = },

DATETIME_CONFIG = CURRENT

TRUNCATE = 0

SEDCMD-remove_prefix = s/{"totalCount":.*"nextPageKey":.*"result":\[{"metricId"

:.*"data":\[//g

SEDCMD-remove_dimensions = s/{"dimensions":.*"dimensionMap"://g

SEDCMD-remove_timevalues = s/,"timestamps":.*"values":.*}//g

SEDCMD-remove_suffix = s/\]}\]}//g

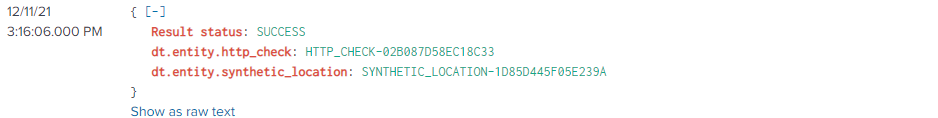

...but I'm only getting one set of fields to show up as an event in Splunk:

And, the fields aren't showing up as "interesting fields" in the left navbar (possibly because the props.conf is not on the indexer?).

Any assistance would be greatly appreciated.

UPDATE: I referenced this post that's pretty close to what I'm trying to accomplish:

The format of the JSON payload cited in this post is different than the format of the payload I'm using, though...so I'm guessing that some additional logic would be necessary to accommodate my format.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did a few things to get this working correctly:

- Since I'm using the REST API modular input, I established and implemented a custom response handler to trim some of the original minified JSON payload (revised payload below):

{"metricId": "builtin:synthetic.http.resultStatus", "data": [{"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-2CD023FA5F455E28"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-2CD023FA5F455E28", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}, {"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-833A207E28766E49"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-833A207E28766E49", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}, {"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-1D85D445F05E239A"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-1D85D445F05E239A", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}]}

- After taking a step back and realizing that the line break (parsing queue) must be spot on before the SEDCMD (typing queue) is applied, I identified my break points in the updated JSON payload and defined my SEDCMD executions to remove additional payload content:

SHOULD_LINEMERGE = false

LINE_BREAKER = \}(\,\s)

DATETIME_CONFIG = CURRENT

TRUNCATE = 0

SEDCMD-remove_header = s/(^\{.*"data":\s\[)//g

SEDCMD-remove_footer = s/\}\]\}/}/g

SEDCMD-remove_dimensions = s/{"dimensions":.*"dimensionMap":\s//g

SEDCMD-remove_timevalues = s/"timestamps":.*"values":.*}//g

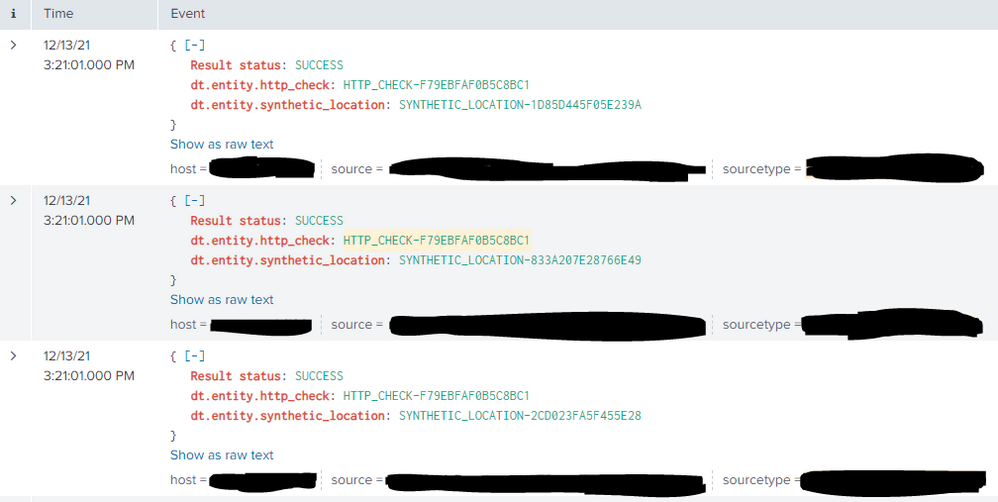

- After stopping and starting the Splunk service and waiting a tick before searching, I validated that I now have the desired result:

While this was working correctly with the props.conf on just the heavy forwarder, I found that I had to implement the props.conf on the indexers as well in order for the three fields in each event to show up in the "Interesting Fields" portion of the left navbar.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did a few things to get this working correctly:

- Since I'm using the REST API modular input, I established and implemented a custom response handler to trim some of the original minified JSON payload (revised payload below):

{"metricId": "builtin:synthetic.http.resultStatus", "data": [{"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-2CD023FA5F455E28"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-2CD023FA5F455E28", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}, {"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-833A207E28766E49"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-833A207E28766E49", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}, {"dimensions": ["HTTP_CHECK-F79EBFAF0B5C8BC1", "SUCCESS", "SYNTHETIC_LOCATION-1D85D445F05E239A"], "dimensionMap": {"Result status": "SUCCESS", "dt.entity.synthetic_location": "SYNTHETIC_LOCATION-1D85D445F05E239A", "dt.entity.http_check": "HTTP_CHECK-F79EBFAF0B5C8BC1"}, "timestamps": [1639412160000], "values": [1]}]}

- After taking a step back and realizing that the line break (parsing queue) must be spot on before the SEDCMD (typing queue) is applied, I identified my break points in the updated JSON payload and defined my SEDCMD executions to remove additional payload content:

SHOULD_LINEMERGE = false

LINE_BREAKER = \}(\,\s)

DATETIME_CONFIG = CURRENT

TRUNCATE = 0

SEDCMD-remove_header = s/(^\{.*"data":\s\[)//g

SEDCMD-remove_footer = s/\}\]\}/}/g

SEDCMD-remove_dimensions = s/{"dimensions":.*"dimensionMap":\s//g

SEDCMD-remove_timevalues = s/"timestamps":.*"values":.*}//g

- After stopping and starting the Splunk service and waiting a tick before searching, I validated that I now have the desired result:

While this was working correctly with the props.conf on just the heavy forwarder, I found that I had to implement the props.conf on the indexers as well in order for the three fields in each event to show up in the "Interesting Fields" portion of the left navbar.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the props/transforms settings you apply on HF's are applied when splunk is ingesting the data (like event breaking, timestamp recognition/parsing, indexed fields extraction) but search-time extractions are performed on search-heads so you need your search-time settings there.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You coud try to clone the event using CLONE_SOURCETYPE and each time cutting part of it. At least that's the only way I could think of.

About the interesting fields issue - are you searching in fast mode or verbose mode?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm searching in Verbose mode. I updated my original post with a link to another post that falls in line with what I'm trying to accomplish. The layout of the JSON payload in that post is different from my payload's layout...but they were able to break out the events successfully. I'm having difficulty trying to replicate that success.