Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: How to configure Splunk to parse and index JSO...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to configure Splunk to parse and index JSON data

I got a custom-crafted JSON file that holds a mix of data types within. I'm a newbie with Splunk administration so bear with me.

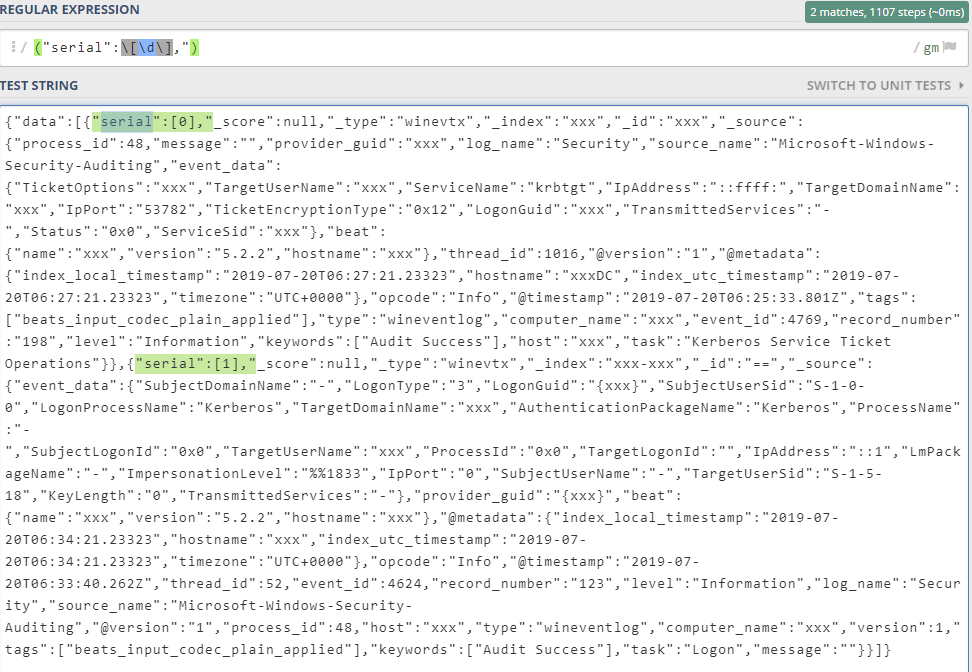

This is a valid JSON, as far as I understand I need to define a new link break definition with regex to help Splunk parse and index this data correctly with all fields. I minified the file and uploaded it after verifying that my regex actually match:

Can you assist what could be a good regex definition? Below is a snippet from file I want to parse; there should be 2 events in there:

{"data":[{"serial":[0],"_score":null,"_type":"winevtx","_index":"xxx","_id":"xxx","_source":{"process_id":48,"message":"","provider_guid":"xxx","log_name":"Security","source_name":"Microsoft-Windows-Security-Auditing","event_data":{"TicketOptions":"xxx","TargetUserName":"xxx","ServiceName":"krbtgt","IpAddress":"::ffff:","TargetDomainName":"xxx","IpPort":"53782","TicketEncryptionType":"0x12","LogonGuid":"xxx","TransmittedServices":"-","Status":"0x0","ServiceSid":"xxx"},"beat":{"name":"xxx","version":"5.2.2","hostname":"xxx"},"thread_id":1016,"@version":"1","@metadata":{"index_local_timestamp":"2019-07-20T06:27:21.23323","hostname":"xxxDC","index_utc_timestamp":"2019-07-20T06:27:21.23323","timezone":"UTC+0000"},"opcode":"Info","@timestamp":"2019-07-20T06:25:33.801Z","tags":["beats_input_codec_plain_applied"],"type":"wineventlog","computer_name":"xxx","event_id":4769,"record_number":"198","level":"Information","keywords":["Audit Success"],"host":"xxx","task":"Kerberos Service Ticket Operations"}},{"serial":[1],"_score":null,"_type":"winevtx","_index":"xxx-xxx","_id":"==","_source":{"event_data":{"SubjectDomainName":"-","LogonType":"3","LogonGuid":"{xxx}","SubjectUserSid":"S-1-0-0","LogonProcessName":"Kerberos","TargetDomainName":"xxx","AuthenticationPackageName":"Kerberos","ProcessName":"-","SubjectLogonId":"0x0","TargetUserName":"xxx","ProcessId":"0x0","TargetLogonId":"","IpAddress":"::1","LmPackageName":"-","ImpersonationLevel":"%%1833","IpPort":"0","SubjectUserName":"-","TargetUserSid":"S-1-5-18","KeyLength":"0","TransmittedServices":"-"},"provider_guid":"{xxx}","beat":{"name":"xxx","version":"5.2.2","hostname":"xxx"},"@metadata":{"index_local_timestamp":"2019-07-20T06:34:21.23323","hostname":"xxx","index_utc_timestamp":"2019-07-20T06:34:21.23323","timezone":"UTC+0000"},"opcode":"Info","@timestamp":"2019-07-20T06:33:40.262Z","thread_id":52,"event_id":4624,"record_number":"123","level":"Information","log_name":"Security","source_name":"Microsoft-Windows-Security-Auditing","@version":"1","process_id":48,"host":"xxx","type":"wineventlog","computer_name":"xxx","version":1,"tags":["beats_input_codec_plain_applied"],"keywords":["Audit Success"],"task":"Logon","message":""}}]}

Berry

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use the below sourcetype. (Or the default pretrained "json" sourcetype)

[data_json]

pulldown_type = true

INDEXED_EXTRACTIONS = json

KV_MODE = none

category = Structured

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

answer

Thanks mate.

I tried to use the default json sourcetype with no success. Seems like something else should be used to help Splunk digest it. I believe I need to configure the break liner but not sure what the value should be. Any ideas?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

|makeresults

|eval _raw="{\"data\":[{\"serial\":[0],\"_score\":null,\"_type\":\"winevtx\",\"_index\":\"xxx\",\"_id\":\"xxx\",\"_source\":{\"process_id\":48,\"message\":\"\",\"provider_guid\":\"xxx\",\"log_name\":\"Security\",\"source_name\":\"Microsoft-Windows-Security-Auditing\",\"event_data\":{\"TicketOptions\":\"xxx\",\"TargetUserName\":\"xxx\",\"ServiceName\":\"krbtgt\",\"IpAddress\":\"::ffff:\",\"TargetDomainName\":\"xxx\",\"IpPort\":\"53782\",\"TicketEncryptionType\":\"0x12\",\"LogonGuid\":\"xxx\",\"TransmittedServices\":\"-\",\"Status\":\"0x0\",\"ServiceSid\":\"xxx\"},\"beat\":{\"name\":\"xxx\",\"version\":\"5.2.2\",\"hostname\":\"xxx\"},\"thread_id\":1016,\"@version\":\"1\",\"@metadata\":{\"index_local_timestamp\":\"2019-07-20T06:27:21.23323\",\"hostname\":\"xxxDC\",\"index_utc_timestamp\":\"2019-07-20T06:27:21.23323\",\"timezone\":\"UTC+0000\"},\"opcode\":\"Info\",\"@timestamp\":\"2019-07-20T06:25:33.801Z\",\"tags\":[\"beats_input_codec_plain_applied\"],\"type\":\"wineventlog\",\"computer_name\":\"xxx\",\"event_id\":4769,\"record_number\":\"198\",\"level\":\"Information\",\"keywords\":[\"Audit Success\"],\"host\":\"xxx\",\"task\":\"Kerberos Service Ticket Operations\"}},{\"serial\":[1],\"_score\":null,\"_type\":\"winevtx\",\"_index\":\"xxx-xxx\",\"_id\":\"==\",\"_source\":{\"event_data\":{\"SubjectDomainName\":\"-\",\"LogonType\":\"3\",\"LogonGuid\":\"{xxx}\",\"SubjectUserSid\":\"S-1-0-0\",\"LogonProcessName\":\"Kerberos\",\"TargetDomainName\":\"xxx\",\"AuthenticationPackageName\":\"Kerberos\",\"ProcessName\":\"-\",\"SubjectLogonId\":\"0x0\",\"TargetUserName\":\"xxx\",\"ProcessId\":\"0x0\",\"TargetLogonId\":\"\",\"IpAddress\":\"::1\",\"LmPackageName\":\"-\",\"ImpersonationLevel\":\"%%1833\",\"IpPort\":\"0\",\"SubjectUserName\":\"-\",\"TargetUserSid\":\"S-1-5-18\",\"KeyLength\":\"0\",\"TransmittedServices\":\"-\"},\"provider_guid\":\"{xxx}\",\"beat\":{\"name\":\"xxx\",\"version\":\"5.2.2\",\"hostname\":\"xxx\"},\"@metadata\":{\"index_local_timestamp\":\"2019-07-20T06:34:21.23323\",\"hostname\":\"xxx\",\"index_utc_timestamp\":\"2019-07-20T06:34:21.23323\",\"timezone\":\"UTC+0000\"},\"opcode\":\"Info\",\"@timestamp\":\"2019-07 -20T06:33:40.262Z\",\"thread_id\":52,\"event_id\":4624,\"record_number\":\"123\",\"level\":\"Information\",\"log_name\":\"Security\",\"source_name\":\"Microsoft-Windows-Security-Auditing\",\"@version\":\"1\",\"process_id\":48,\"host\":\"xxx\",\"type\":\"wineventlog\",\"computer_name\":\"xxx\",\"version\":1,\"tags\":[\"beats_input_codec_plain_applied\"],\"keywords\":[\"Audit Success\"],\"task\":\"Logon\",\"message\":\"\"}}]}"

| spath

props.conf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for that. But I'm looking for parsing during index. I've imported the json and now need to parse it somehow so Splunk could digest it correctly.

what I attached here is just a small snippet for example

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

props.conf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just edited my question, hope it's more clear now

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KV_MODE = json

your question is corrected and spathworks fine, basically this setting is work.

If you modify conf, you must restart splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll try to be more precise - I know that I need to configure props.conf (or the sourcetype during data import) but not sure how - what is the right regex syntax? in the example above there are 2 distinct events. When I chose json as sourcetype the data is not shown as expected (not all fields are parsed), probably because of the "serial" array. I read few questions but none was the same case as I have here

Berry