- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- CSV file parsing issue

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team, @ITWhisperer @gcusello

I am parsing the CSV data to Splunk, testing in dev windows machine from UF.

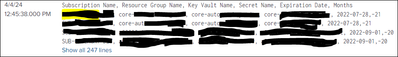

This is the sample csv data:

| Subscription Name | Resource Group Name | Key Vault Name | Secret Name | Expiration Date | Months |

| SUB-dully | core-auto | core-auto | core-auto-cert | 2022-07-28 | -21 |

| SUB-gully | core-auto | core-auto | core-auto-cert | 2022-07-28 | -21 |

| SUB-pally | core-auto | core-auto | core-auto-cert | 2022-09-01 | -20 |

The output i am getting, all events in single event.

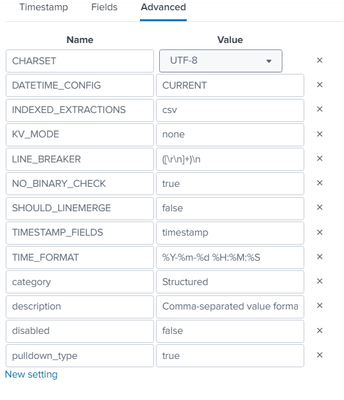

I created inputs.conf, sourcetype

where the sourcetype configurations are

Can anyone help me why is it's not breaking.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @phanikumarcs ,

as I supposed, Splunk dowsn't find the timestamp so it doesn't breaks the events.

Remove the timestamp option and maintain the linebreaker:

[ cmkcsv ]

DATETIME_CONFIG=CURRENT

INDEXED_EXTRACTIONS=csv

KV_MODE=none

LINE_BREAKER=\r\n

NO_BINARY_CHECK=true

SHOULD_LINEMERGE=false

TRUNCATE=200

category=Structured

description=Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled=false

pulldown_type=trueCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @phanikumarcs ,

probably Splunk doesn'r recognize the timestamp field and format you configured:

in your data I don't see the field "timestamp" with the format %Y-%m-%d %H:%M:%S, where is it?

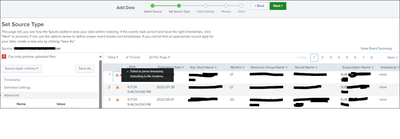

Try to manualli add a sample of these data using the Add Data function that guides you in the sourcetype creation.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

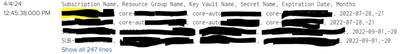

@gcusello yeah i tried the data add via upload, there when i select sourcetype as csv there i can see the timestamp field.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @phanikumarcs,

the timestamp field is one of the columns of your csv file or it's automatically generated by Splunk because it isn't present in the csv file?

I don't see the timestamp field in the screenshot you shared.

In your screenshot and in your table there are only the following fields: Subscription Name, Resource Group Name, Key Vault Name, Secret Name, Expiration Date, Months.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for you help to understand the issue.

Case1:

Actually, there is no timestamp present in the provided csv. In the snapshot you're seeing the data is getting from sample i ingested from the dev machine via UF, here even i am not able to see in the events no "timestamp" field.

Case2:

When i upload the csv in the data inputs, after selecting the sourcetype as "cmkcsv" there it is showing the timestamp field. So here whatever settings i added in the advance it's not at all removing the warning flag as "failed to parse timestamp defaulting to file modtime"

[ cmkcsv ]

DATETIME_CONFIG=CURRENT

INDEXED_EXTRACTIONS=csv

KV_MODE=none

LINE_BREAKER=\n\W

NO_BINARY_CHECK=true

SHOULD_LINEMERGE=false

TIME_FORMAT=%Y-%m-%d %H:%M:%S

TRUNCATE=200

category=Structured

description=Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled=false

pulldown_type=true

TIME_PREFIX=^\w+\s*\w+,\s*\w+,\s*

MAX_TIMESTAMP_LOOKAHEAD=20

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @phanikumarcs ,

as I supposed, Splunk dowsn't find the timestamp so it doesn't breaks the events.

Remove the timestamp option and maintain the linebreaker:

[ cmkcsv ]

DATETIME_CONFIG=CURRENT

INDEXED_EXTRACTIONS=csv

KV_MODE=none

LINE_BREAKER=\r\n

NO_BINARY_CHECK=true

SHOULD_LINEMERGE=false

TRUNCATE=200

category=Structured

description=Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled=false

pulldown_type=trueCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gcusello Yeah, understood and did the same thankyou.

@ITWhisperer any idea, need help here

So now i ingested the csv file, from this i am getting the

index=foo host=nx7503 source=C:/*/mkd.csv

Fields:

Subscription

Resource

Key Vault

Secret

Expiration Date

Months

CSV file:

| Subscription | Resource | Key Vault | Secret | Expiration Date | Months |

| BoB-foo | Dicore-automat | Dicore-automat-keycore | Di core-tuubsp1sct | 2022-07-28 | -21 |

| BoB-foo | Dicore-automat | Dicore-automat-keycore | Dicore-stor1scrt | 2022-07-28 | -21 |

| BoB-foo | G01462-mgmt-foo | G86413-vaultcore | G86413-secret-foo | 2022-09-01 | -20 |

And from the lookup(foo.csv)

Lookup: foo.csv

| Application | environment | appOwner |

| Caliber | Dicore - TCG | foo@gmail.com |

| Keygroup | G01462 - QA | goo@gmail.com |

| Keygroup | G01462 - SIT | boo@gmail.com |

when the "Expiration Date" match the "Resource" and "environment" trigger the alert and send mail to the respective emails(appOwner), how to get this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure what you are asking of me here - your original issue seems to have been solved by @gcusello

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ITWhisperer yes that is resolved. No worries.

@gcusello @ITWhisperer please help

This is the other issue which is related to csv dataset and lookup dataset.

From this SPL: source="cmkcsv.csv" host="DESKTOP" index="cmk" sourcetype="cmkcsv"

Getting output below

| Subscription | Resource | Key Vault | Secret | Expiration Date | Months |

| BoB-foo | Dicore-automat | Dicore-automat-keycore | Di core-tuubsp1sct | 2022-07-28 | -21 |

| BoB-foo | Dicore-automat | Dicore-automat-keycore | Dicore-stor1scrt | 2022-07-28 | -21 |

| BoB-foo | G01462-mgmt-foo | G86413-vaultcore | G86413-secret-foo |

From this lookup: | inputlookup cmklookup.csv

Getting output below

| Application | environment | appOwner |

| Caliber | Dicore - TCG | foo@gmail.com |

| Keygroup | G01462 - QA | goo@gmail.com |

| Keygroup | G01462 - SIT | boo@gmail.com |

Combine the two queries into one, where the output will only display results where the 'environment' and 'Resource' fields match. For instance, if 'G01462' matches in both fields across both datasets, it should be included in the output. How i can do this, could anyone help here to write spl. I wrote some of the Spls but it's not working for me.

source="cmkcsv.csv" host="DESKTOP" index="cmk" sourcetype="cmkcsv"

|join type=inner [ | inputlookup cmklookup.csv environment]

source="cmkcsv.csv" host="DESKTOP" index="cmk" sourcetype="cmkcsv"

| lookup cmklookup.csv environment AS "Resource" OUTPUT "environment"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

good for you, see next time!

Ciao and happy splunking

Giuseppe

P.S.: Karma Points are appreciated by all the contributors 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it is a new / different issue, please raise it as a new question, that way the solved one can stay solved and people can look to help with the unsolved one.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Done thank you @ITWhisperer