- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- splunk indexer: status: "pending", fully searchabl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After updating a bucket replication policy and doing a rolling restart of cluster indexers, one of the indexers seems stuck in this state:

Question: where do I go, what do I do, to figure out what's the root cause and how to fix it?

Cluster status in plaintext:

- Search Factor Not Met

- Replication Factor Not Met

- One of three indexers: Fully Searchable: No, Status: Pending.

- One out of 12 indexes shows with Searchable and Replicated Data Copies (the rest seem fine)

Under "Indexer Clustering: Service Activity", "Snapshots" - a number of "pending" tasks that seem to be stuck and never moving to "in progress" status:

- "Fixup Tasks - In Progress (0)"

- "Fixup Tasks - Pending":

-- Tasks to Meet Search Factor (4)

-- Tasks to Meet Replication Factor (6)

-- Tasks to Meet Generation (6)

Tasks to Meet Search Factor (4)

Bucket Index Trigger Condition Trigger Time Current State

_metrics~34~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics does not meet: sf & rf Waiting 'target_wait_time' before search factor fixup

_metrics~34~64AE7236-EE5E-4EEE-AEBF-203F149FCB61 _metrics does not meet: primality & sf & rf Waiting 'target_wait_time' before search factor fixup

_metrics~35~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics does not meet: sf & rf Waiting 'target_wait_time' before search factor fixup

_metrics~35~64AE7236-EE5E-4EEE-AEBF-203F149FCB61 _metrics does not meet: sf & rf Waiting 'target_wait_time' before search factor fixup

Tasks to Meet Replication Factor (6)

Bucket Index Trigger Condition Trigger Time Current State

_metrics~34~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics does not meet: sf & rf Waiting 'target_wait_time' before replicating bucket

_metrics~34~64AE7236-EE5E-4EEE-AEBF-203F149FCB61 _metrics does not meet: primality & sf & rf Waiting 'target_wait_time' before replicating bucket

_metrics~35~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics does not meet: sf & rf Waiting 'target_wait_time' before replicating bucket

_metrics~35~64AE7236-EE5E-4EEE-AEBF-203F149FCB61 _metrics does not meet: sf & rf Waiting 'target_wait_time' before replicating bucket

_metrics~36~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics non-streaming failure - src=64AE7236-EE5E-4EEE-AEBF-203F149FCB61 tgt=4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 failing=tgt

_metrics~37~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 _metrics non-streaming failure - src=9B5D3504-81B2-4DCC-BF4D-F7ED811A3571 tgt=4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 failing=tgt

... etc.

Some of the errors on the indexer(s):

04-03-2020 07:55:10.100 -0700 ERROR TcpInputProc - event=replicationData status=failed err="Close failed"

host = bvl-mit-splkin2source = /opt/splunk/var/log/splunk/splunkd.logsourcetype = splunkd

04-03-2020 07:55:10.100 -0700 WARN BucketReplicator - Failed to replicate warm bucket bid=_metrics~37~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 to guid=4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 host=10.101.128.89 s2sport=9887. Connection closed.

host = bvl-mit-splkin1source = /opt/splunk/var/log/splunk/splunkd.logsourcetype = splunkd

04-03-2020 07:55:10.097 -0700 WARN S2SFileReceiver - event=processFileSlice bid=_metrics~37~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 msg='aborting on local error'

host = bvl-mit-splkin2source = /opt/splunk/var/log/splunk/splunkd.logsourcetype = splunkd

04-03-2020 07:55:10.097 -0700 ERROR S2SFileReceiver - event=onFileClosed replicationType=eJournalReplication bid=_metrics~37~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 state=eComplete src=64AE7236-EE5E-4EEE-AEBF-203F149FCB61 bucketType=warm status=failed err="bucket is already registered, registered not as a streaming hot target (SPL-90606)"

host = bvl-mit-splkin2source = /opt/splunk/var/log/splunk/splunkd.logsourcetype = splunkd

04-03-2020 07:55:10.089 -0700 WARN BucketReplicator - Failed to replicate warm bucket bid=_metrics~36~4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 to guid=4C2AF0DE-E42F-489B-92FB-2CA3FC68AC85 host=10.101.128.89 s2sport=9887. Connection closed.

host = bvl-mit-splkin3source = /opt/splunk/var/log/splunk/splunkd.logsourcetype = splunkd

Additional notes:

Output of /opt/splunk/bin/splunk list peer-info on the peer:

slave

base_generation_id:651

is_registered:1

last_heartbeat_attempt:0

maintenance_mode:0

registered_summary_state:3

restart_state:NoRestart

site:default

status:Up

/opt/splunk/etc/master-apps/_cluster/local/indexes.conf on CM (successfully replicated to peers via /opt/splunk/bin/splunk apply cluster-bundle😞

[default]

repFactor = auto

[_introspection]

repFactor = 0

[windows]

frozenTimePeriodInSecs = 31536000

coldToFrozenDir = $SPLUNK_DB/$_index_name/frozendb

[wineventlog]

frozenTimePeriodInSecs = 31536000

coldToFrozenDir = $SPLUNK_DB/$_index_name/frozendb

Details:

- Splunk Enterprise 8.02

- mostly default settings

Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Restarting all indexers (not just the one with errors) was what resolved the issue for me.

The root cause is still a mystery. Suspecting a bug or a misconfiguration:

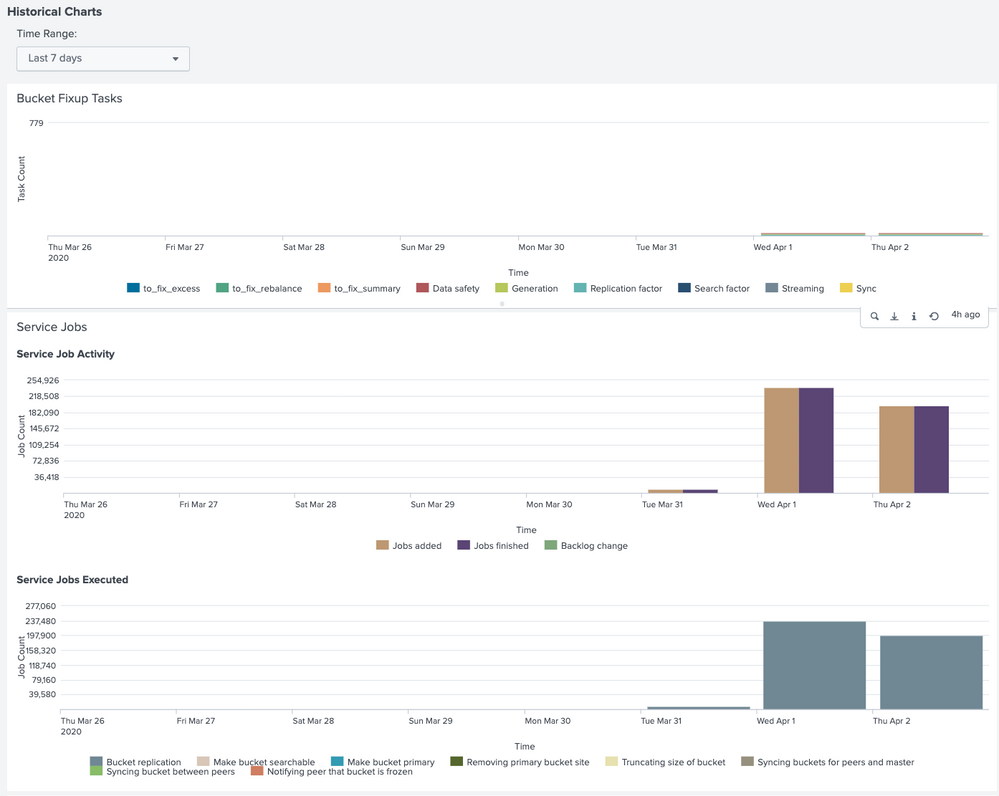

- that degraded

_metricsindex you see in the screenshot above is not supposed to exist - we aren't collecting any metrics to my knowledge, and searching for them via e.g.| mcatalog values(_dims) WHERE index=*produces no results. _metricsindex did seem to exist prior to this issue - but does not exist now in our environment; it's unclear why it was created originally.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I restarted indexers and CM multiple times.. seems to clear up a few buckets, but then sits there w/ pending state

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jsbapple

I’m a Community Moderator in the Splunk Community.

you are reploying to question was posted couple of years ago, so it might not get the attention you need for your question to be answered. We recommend that you post a new question so that your issue can get the visibility it deserves. To increase your chances of getting help from the community, follow these guidelines in the Splunk Answers User Manual when creating your post.

Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are continuing to observe this issue in version 8.2.5. Did Splunk ever fix this in the later versions? We started observing this issue after moving to SmartStore and had not observed this prior. Restarting the Cluster Manager fixes the issue but the issue happens again at a later time.

We also do not collect metrics in our splunk environment and I can see a _metrics index on the indexer cluster for some reason

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PranaySompalli! Thank you for your follow-up question. Can please post your question as a new thread to help gain more visibility / up-to-date answers? Thanks!

-Kara, Splunk Community Manager

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Restarting all indexers (not just the one with errors) was what resolved the issue for me.

The root cause is still a mystery. Suspecting a bug or a misconfiguration:

- that degraded

_metricsindex you see in the screenshot above is not supposed to exist - we aren't collecting any metrics to my knowledge, and searching for them via e.g.| mcatalog values(_dims) WHERE index=*produces no results. _metricsindex did seem to exist prior to this issue - but does not exist now in our environment; it's unclear why it was created originally.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same issue here upgraded from 7.3.x to 8.0.x which added the _metrics. Some indexers were replicating it even though repFactor = 0 and other indexer were not replicating making monitoring console upset.

Rolling restart resolved this issue for us, thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. the rolling restart fixed my issue as well.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, It helps me , I did rolling restart through cluster master GU Interface.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Was it rolling restar of all Indexers, reboot or just service restart, that did the trick for you? We're in the same situation for Splunk version 7.3 post upgrade.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Put the master in maintenance, rolling reboot of all indexers; checking bucket status between reboots to ensure integrity. That said - I can't remember now if I also tried manual rolling service restarts. (Automatic rolling restart from the master wasn't available due to that degraded index.)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh got you. I solved it via rolling restart of the Indexers and then waiting. This is a strange bug, though.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Restart that Indexer and give it time. After a while, try restarting the Cluster Master. Both these actions should be safe at any time and not result in search or indexing outage, so long as only 1 Indexer is wonky.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

didn't help