- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Understanding Splunk Index Retention

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, and sorry if this question was already answered in any other thread.

Thanks in advance for the help.

I had an index in which the current size was over 10 GB, for deleting the data I tried to reduce it's max size and searchable retention.

My question is what is going to happen with the data? Will it be deleted from the servers or archived? I am confused because I am seeing the event counts stuck with the same value as it was before changing the retention config.

Previous index config:

Current Size 10 GB, Max Size: 0, Event Count: 10M, Earliest Event: 5 Months, Latest Event: 1 day, Searchable Retention: 365 days, Archive Retention: blank, Self Storage: blank, Status: enabled

Then, I changed the parameters "Max Size" to "200 MB" and "Searchable Retention" to "1 Day".

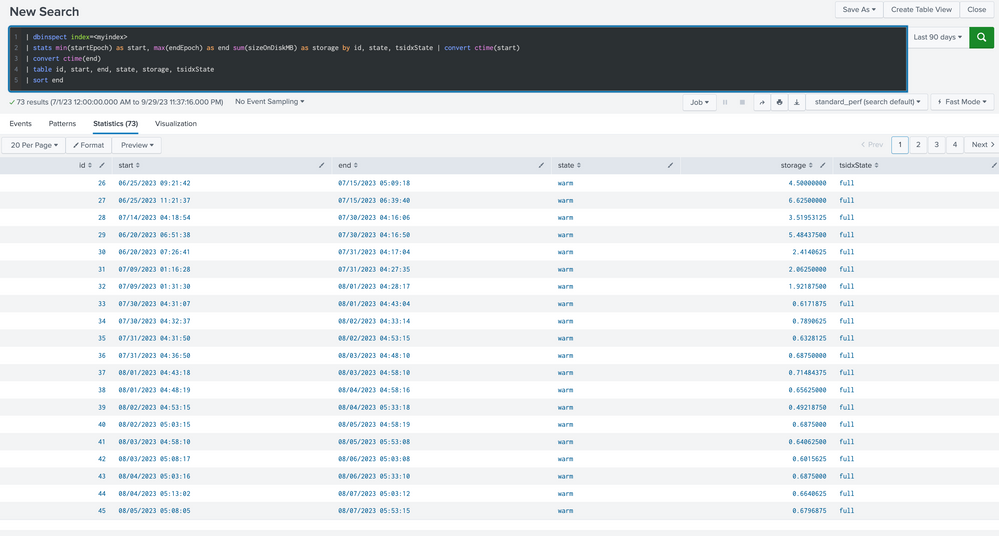

Besides, when running the following query, I see the warm storage size pretty much with the same size (bouncing a few mbs).

|dbinspect index=_internal *<index-name>*

| stats sum(sizeOnDiskMB) by state

Any help greatly appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felipesodre ,

when your bucket completely exceed the retention time (also the earliest event in the bucket) or the bucket reaches the maxSize it can be discarded or moved to offline in a different folder.

As described at https://docs.splunk.com/Documentation/Splunk/9.1.1/Admin/Indexesconf , it dependa on the parameter coldToFrozenScript that specifies a script to run when data is to leave the splunk index system, in other words, what happens to the bucket after the retention period.

If, using a script, you move your Cold Bucket to offline, you can re use them copying them in the Thawed path.

Otherwise you can discard them and the entire bucket is deleted.

You can find more details in this document https://docs.splunk.com/Documentation/Splunk/9.1.1/Indexer/Setaretirementandarchivingpolicy

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is so much "depends" here that we could open a nursing home. Are you using SmartStore? Are you using indexer clustering? What are your SF/RF settings? Are you using Volume settings for your indexers? Are you Splunk Cloud? What is the "btool" output for your indexes.conf from one of your indexers?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am so confused as to why there are still buckets with data in which the endEpochTime is older than the "Searchable Retention"

Thanks again

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Probably because there are also events (at least one) in that bucket that are younger.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, is it safe to assume that if no new data is ingested into this index the data should be gone by tomorrow (the same time I changed the config)?

Thanks again

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thank you for replying back.

Settings:

SmartStore: No

Indexer clustering: No

SF/RF Settings Splunk: SF=2, RF=3

Volume settings: Default settings

Splunk Cloud: Yes

Unfortunately, I am unable to run the "btool". However, I am able to run the following rest API query to gather the info from specific parameters for the mentioned index:

| rest /services/data/indexes

| join type=outer title [

| rest splunk_server=n00bserver /services/data/indexes-extended

]

| search title=*

| eval retentionInDays=frozenTimePeriodInSecs/86400

| table *

What should be the parameters to look for?

Thanks again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I should also have mentioned that I have stopped ingestion onto this index for now. Until I figure out how to reduce the storage/clean the data.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felipesodre ,

good for you, see next time!

Ciao and happy splunking

Giuseppe

P.S.: Karma Points are appreciated by all the contributors 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @felipesodre ,

when your bucket completely exceed the retention time (also the earliest event in the bucket) or the bucket reaches the maxSize it can be discarded or moved to offline in a different folder.

As described at https://docs.splunk.com/Documentation/Splunk/9.1.1/Admin/Indexesconf , it dependa on the parameter coldToFrozenScript that specifies a script to run when data is to leave the splunk index system, in other words, what happens to the bucket after the retention period.

If, using a script, you move your Cold Bucket to offline, you can re use them copying them in the Thawed path.

Otherwise you can discard them and the entire bucket is deleted.

You can find more details in this document https://docs.splunk.com/Documentation/Splunk/9.1.1/Indexer/Setaretirementandarchivingpolicy

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gcuselloSmall correction - bucket is eligible for rotation to frozen if _latest_ event in it is older than the retention limit, not earliest.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for clarifying that 🙂