Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Re: Search Head is generating crash log every few ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Search Head is generating crash log every few minutes and Searches are slow

My Search Head is generation crash*.log in $SPLUNK_HOME/var/log/splunk every few minutes. Splunkd does not crash, just the searches are slow and some time scheduled tasks do not work.

The review of search.log for some of the failed scheduled jobs shows errors like

07-16-2014 05:46:35.167 INFO BundlesSetup - Setup stats for /opt/getty/splunk/etc: wallclock_elapsed_msec=20, cpu_time_used=0.017997, shared_services_generation=2, shared_services_population=1

07-16-2014 05:46:35.170 INFO UserManagerPro - Load authentication: forcing roles="admin, can_delete"

07-16-2014 05:46:35.171 WARN Thread - Main Thread: about to throw a ThreadException: pthread_create: Resource temporarily unavailable; 3 threads active

Any suggestion?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 1012127

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 32768

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 1024

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

I will recommend you to increase “max user processes “ to higer value like 10240 and restart the splunk and check if that helps.

Note that typical main splunkd is around 60-80 threads.Process-runner will use 2.

Each search will be 2 basic threads (master and search thread) + possibly 12 for (peer count), + 2 for the process runner.

Thus you would expect to hit 1024 at around:

1024 = 82 + (16n)

942 = 16n

58 = n

or around 50 concurrent searches.

However, there may be other processes going on as this user, such as shell sessions, python scripts, some java input that uses 200 threads,

etc.

On Linux I would suggest the following command:

ps -u splunk uxH

to see all threads for all programs running as the 'splunk' user.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Raj,

This is related to local search head process, right ?

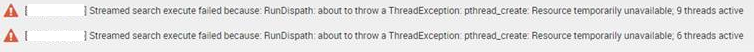

How about when I get the following error showing up on the search head in SHC and searching accross clustered indexers :

Do I really need to change ulimits on the indexers ?

Note:

Latelly i had increased queue sizes on all indexers (CM Bundle) per the addition of a bunch of new forwarders and

some messages showing up on heavy forwarders´ metrics.log .

Indexers are running with 128Gb of memory and using less then 32Gb.