- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Right number and size of hot/warm/cold buckets

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

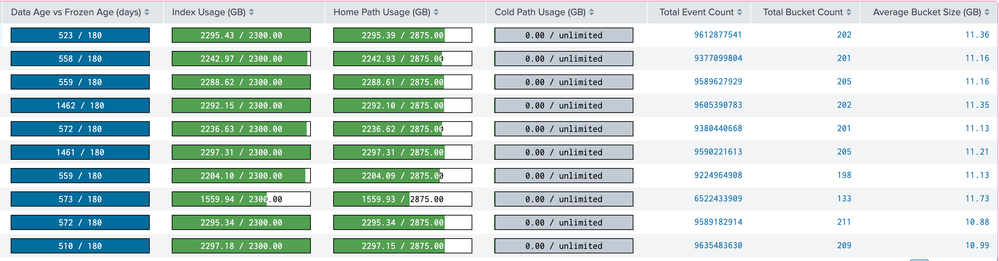

Right number and size of hot/warm/cold buckets

Here is the index stanza:

[new_dex]

homePath = volume:hotwarm/new_dex/db

coldPath = volume:cold/new_dex/colddb

thawedPath = $SPLUNK_DB/new_dex/thaweddb

maxTotalDataSizeMB = 2355200

homePath.maxDataSizeMB = 2944000

maxWarmDBCount = 4294967295 // I know this is wrong, but need help setting it

frozenTimePeriodInSecs = 15552000

maxDataSize = auto_high_volume

repFactor=auto

Also, any other key = pair should be added.

There are 18 indexers deployed each with a 16T of size.

frozenTimePeriodInSecs has been met, but data is not being moved/deleted.

what configuration/details am I missing here?

I needed data gone!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the guys already pointed out, there is more to bucket lifecycle than meets the eye. And that's why you might want to involve PS or your local friendly Splunk Partner for assistance.

First and most obvious thing is that data is not rolled to "next" lifecycle stage per event but as full buckets. It has its consequences. While the hot->warm and warm->cold roll is based on either _bucket_ size or number of buckets, rolling to frozen is based on the _latest_ event in the bucket (unless of course you hit the size limit). So the bucket will not be rolled until all events in the buckets are past the retention period set for the index.

That's the first thing which makes managing retention unintuitive (especially if you have strict compliance requirements not only regarding for how long you should retain your events but also when you should delete them).

Another more subtle thing is that Splunk creates so called "quarantine buckets" into which it inserts events which are relatively "out of order" - way too old or coming supposedly from the future. The idea is that all those events are put into a separate bucket so they don't impact performance of "normal" searches.

So it's not unusual, especially if you had some issues with data quality, to have buckets covering quite big time spans. You can list buckets with their metadata using the dbinspect command.

(Most of this should be covered by the presentation @isoutamo pointed you to).

And unless you have very strict and unusual compliance requirements you should not fiddle with the index bucket settings.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

If I understand correctly your issue is that you have some data with online access after you want that this has deleted from splunk and archived or just deleted? You have/must have to define this based on retention time as say e.g. in legislation?

There is (at least) one excellent .conf presentation how splunk handles data inside it. You should read this https://conf.splunk.com/files/2017/slides/splunk-data-life-cycle-determining-when-and-where-to-roll-... Even it's already kept couple of years ago, it's still mostly valid. Probably most important thing what is missing from it is Splunk Smart Store, but based on your configuration you are not used it.

Usually it hard or almost impossible to get data removed based on your exact retention times. As @gcusello already said and you could see it also on that presentation, events are stored into buckets and that bucket expires and removed after all events inside it have expired. For that reason there could be much older data in buckets than you want.

How you could try to avoid it?

Probably the only way how you could try to manage it, is ensure that all buckets contains only events from one day. Unfortunately this is not possible for 100% of cases. Usually there are situation when your ingested data could contains events from several days (e.g. you start with new source system and ingest also old logs etc.). One thing what you could try is set hot bucket parameters

maxHotSpanSecs = <positive integer>

* Upper bound of timespan of hot/warm buckets, in seconds.

* This is an advanced setting that should be set

with care and understanding of the characteristics of your data.

* Splunkd applies this limit per ingestion pipeline. For more

information about multiple ingestion pipelines, see

'parallelIngestionPipelines' in the server.conf.spec file.

* With N parallel ingestion pipelines, each ingestion pipeline writes to

and manages its own set of hot buckets, without taking into account the state

of hot buckets managed by other ingestion pipelines. Each ingestion pipeline

independently applies this setting only to its own set of hot buckets.

* If you set 'maxHotBuckets' to 1, splunkd attempts to send all

events to the single hot bucket and does not enforce 'maxHotSpanSeconds'.

* If you set this setting to less than 3600, it will be automatically

reset to 3600.

* NOTE: If you set this setting to too small a value, splunkd can generate

a very large number of hot and warm buckets within a short period of time.

* The highest legal value is 4294967295.

* NOTE: the bucket timespan snapping behavior is removed from this setting.

See the 6.5 spec file for details of this behavior.

* Default: 7776000 (90 days)

maxHotIdleSecs = <nonnegative integer>

* How long, in seconds, that a hot bucket can remain in hot status without

receiving any data.

* If a hot bucket receives no data for more than 'maxHotIdleSecs' seconds,

splunkd rolls the bucket to warm.

* This setting operates independently of 'maxHotBuckets', which can also cause

hot buckets to roll.

* A value of 0 turns off the idle check (equivalent to infinite idle time).

* The highest legal value is 4294967295

* Default: 0With those you could try to keep each hot buckets open max one day. But still if/when you are ingesting events which are not from current day those will mesh this!

But unless you haven't any legal reason to do this I don't propose you to close every bucket containing max events from one day. This could lead you to another issues e.g. with bucket counts in cluster, longer restart etc. times.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rickymckenzie10,

at first this isn't a question for the Community but for a Splunk PS or Splunk Certified Architect!

Anyway, if you have data that exceed the retention period, it means that in the same bucket you have events that are still in the retention period and for this reason the bucket isn't discarded.

I don't like to change the default indexes parameters.

But you reached the max dimension of some of your indexes and for this reason some of them will be discarded in short time.

What's you issue: that there are events that exceed the retention period without discarding of that you reached the max dimension?

In the first case, you have only to wait , in the second case, you have to enlarge the index max dimension.

I don't see any configuration issues, maybe the maxWarmDbCount is high.

Ciao.

Giuseppe