- Find Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- How to measure approximately the source device is ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dears

I need an advice from experts who have past experience on splunk, Please do not advise for splunk professional services or Partner help,

- How i can measure approximately the source device is generating how much number of data that i need to ingest in splunk , there must be some way to assume till some extend for example a firewall will generate more logs than a windows server.

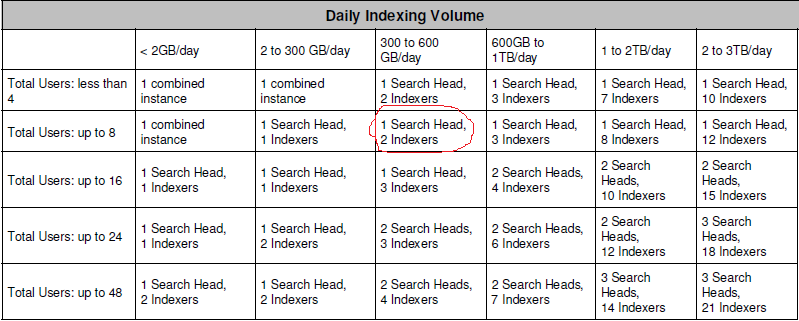

- Lets assume if i m ingesting a 300GB/day in splunk and i have 5 administrative users using search head then the highlighted below is good to follow.

- If i am adding Enterprise security module then the sizing changes,?? how much additional data ingestion needs to be added and what is the math behind this ?

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @adamgibs,

yes it's correct the number of Indexers.

About Search Heads, using two SHs you are more sure that at least one is active also during maintenance or failure, but, using only two SHs you cannot configure a Cluster (you need at least three SHs), so you don't have configuration replication between SHs and you heve to manually align them.

So, having you a large architecture (6 IDXs, 2 SHs, 1 MN, I DS, n HFs) I hint to add another SH and configure a Search Head Cluster so you don't have more problems about replication (Deployer doesn't need a dedicated server and can be configured on Master Node or on Monitoring Console).

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, that's what partners are for. There are many factors which can affect the environment size (like various apps, inputs, projected search characteristics). You might need additional heavy forwarder(s). Or not. You might need a separate deployment server. Or you may do with combining it with DS. You might need some thought to organize your onboarding process (especially syslog sources). And so on.

Yes, there are rough estimates based on typical cases but yours can turn out to be an unusual one. And believe me, you can kill any cluster with sufficiently badly created dashboards 😉

And the good approach would be to install a small trial version (or even request a poc license with your partner) and do a test onboarding of representative subset of your sorced. And just see for yourself.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @adamgibs,

if you don't want to hear an advice about Professional Services or a Partner (always my best solution because ES isn't so immediate to configure), at least I hint to follow a training about Entrerprise Security Admin.

Anyway, adding ES to your Splunk Enterprise, you don't need additional data ingestion: the data to use are always the same!

About sizing, you don't have a great requirement in data volume and users, the minimal requirements depends on the presence of HA in your architecture:

- full HA: Search Head Cluster and Indexer Cluster,

- data HA: single Search Head and Indexer Cluster,

- no HA.

In the first case, you need at least 3 SHs, n IDXs and one Master Node + Deployer.

In the second case, you need at least one SH dedicated to ES, if you want to run other apps, you need another different SH, n IDXs and a Master Node.

In the third case you need at least one SH dedicated to ES, if you want to run other apps, you need another different SH and n IDXs.

The number of IDXs depends on the data volume, usually, using ES, Splunk hints one IDX to ingest 100-150 GB/day, in your case at least 2-3 IDXs.

The reference hardware for ES is greater than Splunk Enterprise, you need at least:

- SH:

- 16 CPU

- 32 GB RAM

- 100 GB HD (system)

- IDX

- 16 CPU

- 32 GB RAM

- 100 GB HD (system)

- storage

- Master Node / Deployer

- 12 CPU

- 12 GB RAM

- 100 GB HD (system)

The storage dimensioning depends on the volume and retention of your data, then depends on the Replication Factor and Search Factor of your Indexer Cluster, you can define the storage requirements at http://splunk-sizing.appspot.com/

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Giuseppe

thanks for reply. and pls bare with me for answering my questions.

- so it seems that my requirement will be 1 SH and 3 indexers for the safer side. hence the table snippet shared in earlier post can be used as reference to start of.

- Can i know the reference hardware u proposed is from which document becz i m referring to Capacity Planning Manual 8.2.5 and the figures are different.

- i m following this link https://teskalabs.com/products/logman.io/eps-calculator/ to calculate the number of GB to be ingest, Please advice. i m m on the right path or other ideas from your side.

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @adamgibs,

the table you shared comes from the Architecting Splunk Training Course and it's only for Splunk Enterprise, but you have ES, so in the Training Course I hinted in my previous answer you could find the dimensioning I hinted.

To be more detailed, in the ES Admin Training Corse is raccomanded a maximum dayly indexing volume of 80-100 GB/day for each Indexer, but working with Splunk PS, they hinted to use a value of 100-150 GB/day.

The link you shared in item 3 could be ok to calculate the Splunk License but I hint to see with your eyes the real number of each kind of events and take a margin (20-30%).

Then anyway you have to dimensionate your store and you need of something like the link I shared or something similar.

Tell me if I can help you more.

Ciao.

Giuseppe

P.S. Karma Points are appreciated by all contributors 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Giuseppe

Pls bare with me , may be i m not able to understand your reply , and with your more elaboration i will realize what u r trying to explain to me.

The number of IDXs depends on the data volume, usually, using ES, Splunk hints one IDX to ingest 100-150 GB/day, in your case at least 2-3 IDXs.

what does this mean the above line means ??? , Enterprise Security why we are considering additional GB ??

can you elaborate with a simple math .

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The guidelines for sizing the splunk environments are just that - guidelines assuming some typical load. With a "bare" Splunk Enterprise installation there is some typical search activity assumed which makes it ok to ingest some amount of data leaving enough processing power to search this data. Notice that usually the amount of data ingested influences the amount of data used in searches so usually the more data you're putting in your indexes, the more data your later search through.

But that's calculation based on some assumptions in a "clean" Splunk installation. If you add the Enterprise Security app, it generates quite a significant load on the servers on its own so usually the specs calculated from the amount of data ingested drop significantly (again - your searches generated by ES usually cover the data you're ingesting so the more data you put into splunk, the more data you'll probably be querying with your ES searches).

Remember that with splunk the typical way of scaling your environment for search efficiency is not pumping up your single indexer specs but rather adding additional indexers so properly written searches (you can't do nothing with badly written ones - you can kill any setup with sufficiently badly written dashboard :D) distribute across nodes and each node processes data in parallel.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Rick/ Giuseppe

thanks for replying to my post and bear with me , I m proud that i m getting replies from you experts

totally agree on your post, when we add an ES APP the load will increase on the indexers and so on we need to add indexers, so lets assume i m ingesting 900 GB and i have a ES APP with 2 search admin so according to the Giuseppe advised i have to procure 6 indexers ( 150 GB / indexer) + 2 SH ( 1 SH will be enough becz i have only 2 admins but for the safer side i will procure 2 no's )

Please correct me if i m wrong,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @adamgibs,

yes it's correct the number of Indexers.

About Search Heads, using two SHs you are more sure that at least one is active also during maintenance or failure, but, using only two SHs you cannot configure a Cluster (you need at least three SHs), so you don't have configuration replication between SHs and you heve to manually align them.

So, having you a large architecture (6 IDXs, 2 SHs, 1 MN, I DS, n HFs) I hint to add another SH and configure a Search Head Cluster so you don't have more problems about replication (Deployer doesn't need a dedicated server and can be configured on Master Node or on Monitoring Console).

Ciao.

Giuseppe