- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- Failed Login Anomalies detection - EventCode=4625?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

I have the following fields on EventCode=4625 (failed login events),

Fields:

_time, Source_Network_Address,Account_Name,Workstation Name,EventCode

And i want to create anomaly creation rules based on the source address field, to check if there is a relative high amount of failed login from the same source address.

I am currently using a static threshold (...| where count > 50) but i want it to be dynamic to the week,weekends / morning night changes.

Anyone can give me some direction or a query example?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @David_Shoshany ,

if a division between working days and weekends is suffient for you, my solution can solve your problem.

if instead you want a threeshold variable ob basis the last week, it's just a little more complicated:

- you could run every week a statistic on the results of the previous week,

- extract the daily threeshold as a percentage of these results,

- write them in a lookup,

- use these values in the alert.

There's only one problem: holidays.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @David_Shoshany ,

which kind of dinamicity are you thinking?

if one for working days (e.g. 50) and one for weekend (e.g. 20) it's easy, you should add to your search this row:

| eval threeshold=if(date_wday="saturday" OR date_wday="sunday",20,50)if you want to manage also holydays it's more complicate because you should create a lookup containing all the year's days.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

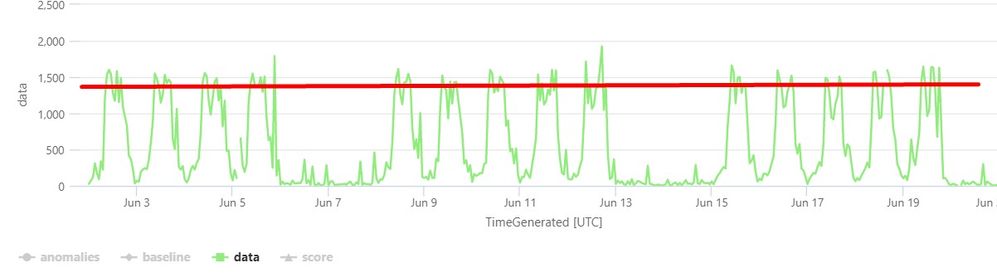

Hi i would like the query to consider the changes throughout the week in green

Which mean that the threshold would be dynamic, not static (red line)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @David_Shoshany ,

if a division between working days and weekends is suffient for you, my solution can solve your problem.

if instead you want a threeshold variable ob basis the last week, it's just a little more complicated:

- you could run every week a statistic on the results of the previous week,

- extract the daily threeshold as a percentage of these results,

- write them in a lookup,

- use these values in the alert.

There's only one problem: holidays.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm new to Splunk and have no training whatsoever. I have splunk enterprise and would like to setup a way to mitigate my login failures. It is on the main screen under active directory events. Can someone provide step by step directions? I have tried adding a dashboard but I am truly lost.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jalen1331,

it isn't a good idea to add a new question to an old and answered questions because you probably will not have an answer: open a new one and you'll have more, faster and probably better answers to yoru questins!

Anyway, it isn't a good approach to try to use Splunk without any training, even if Splunk is very easy to use, you can find in the Splunk YouTube Channel many useful videos (https://www.youtube.com/@Splunkofficial) and there are many free courses trainings (https://www.splunk.com/en_us/training/free-courses/overview.html), at least I hint to follow the Splunk Search Tutorial to understand how to use the SPL (https://docs.splunk.com/Documentation/Splunk/latest/SearchTutorial/WelcometotheSearchTutorial) and the documentation about Getting data in (https://docs.splunk.com/Documentation/SplunkCloud/latest/Data/Getstartedwithgettingdatain) otherwise it's really difficoult to try to use Splunk.

Infact, in your use case, you should:

- at first ingest data,

- then identify and parse them,

- at least run a searh to extract the results you want,

but it isn't an immediate path.

In conclusionleast I hint to follow a complete training path, at least as Splunk User.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't ask for your opinion on what you think I should do. I asked for a command to fix the issue. That isn't what the message board is for. You could have kept that to yourself.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jalen1331,

ok, I confirm both my opinions even if you don't want to hear them, maybe because they are correct.

Anyway, could you better describe your requirements?

what do you mean with "a way to mitigate my login failures"?

if you want to identify logfails in active directory, you have to run a simple search like this:

index=wineventlog EventCode=4625But you didn't describe what you want to know: a threshold for logfails, the source of logfails, what else?

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gcusello thank you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can use the timewrap command to compare a value with the previous (one week, day, month, etc...), and you could use it, but the problem is that in this case you have to search on a long time frame and many events, this means long time for the results, to have a value that you can extract once a week and reuse always the same (until the next schedulated search).

Ciao and next time.

Giuseppe

P.S. Karma Points are appreciated.