Join the Conversation

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Why are milliseconds not being parsed in cluster e...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are milliseconds not being parsed in cluster environment - Puppet Enterprise 7.0.1?

Splunk setup

- Cluster environment

- Splunk Enterprise 7.0.1

- Centos 7

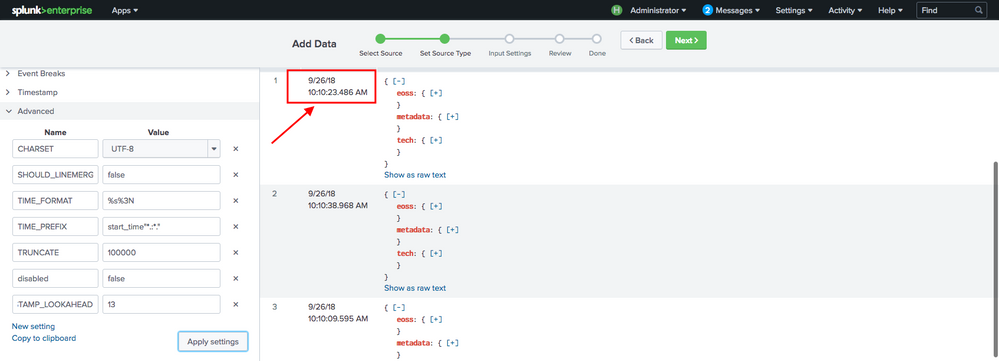

Set up props.conf at index master (./etc/master-apps/_cluster/local/props.conf):

[my_source_type]

CHARSET=UTF-8

SHOULD_LINEMERGE=false

TIME_FORMAT=%s%3N

TIME_PREFIX=start_time"*.:*."

TRUNCATE=60000

disabled=false

MAX_TIMESTAMP_LOOKAHEAD=13

Raw event example:

{"metadata":{"start_time":"150479000","delivered_by":"CDN"}}

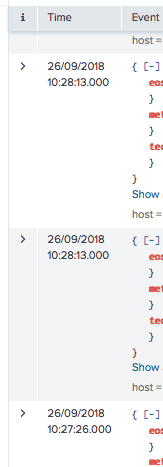

But events never get assigned a millisecond value:

When using the upload utility Splunk is able to parse timestamp correctly with the same setup:

Any ideas?

Thank you.

Related:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE:

After further debugging the reason for milliseconds not appear was very simple:

I was using a transforms.conf in the indexer with the format: DEST_KEY = _meta

Changed it to WRITE_META = true and all fine no need to force local processing anymore.

You may still read below for awareness...

I had the same issue on a non structured source also:

Splunk Enterprise

Version: 7.1.0

Build: 2e75b3406c5b

Source file: source::/var/log/springboot/dailyLogFile.log

Mask: [INFO ] 2019-02-01 11:02:13.178 ...

After trying for a couple of hours to get miliseconds parsed correctly the solution I found was to set on the Splunk Universal Forwarders property force_local_processing to true on

/opt/splunkforward/system/local/props.conf

[source::/var/log/springboot/dailyLogFile.log]

# 2019-02-01 11:02:13.178

# TIME_FORMAT=%Y-%m-%d %H:%M:%S.%3N -> It was proven not needed as datetime.xml seems to cover it ???

# TIME_PREFIX=^\[\w*\s*\]\s -> It was proven not needed as datetime.xml seems to cover it ???

=true

You need to restart Splunk Universal Forwarder to changes to take place:

/opt/splunkforwarder/bin/splunk restart

I have tried to configure the setting at the indexers using multiple configuration without sucess.

Ultimatly I found the answer in https://docs.splunk.com/Documentation/Splunk/7.2.3/Admin/Propsconf

Maybe Splunk should open the source code it would make much easier for us to debug this.

IMPORTANT: After forcing local processing I had also to localy add my trasforms.conf setup as they would no longer be parsed at the indexers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I manage to fix this by not setting up props.conf at index master but at each individual splunk universal forwarder of the cluster.

/opt/splunkforwarder/etc/system/local/props.con

[example_sourcetype]

INDEXED_EXTRACTIONS = json

CHARSET=UTF-8

SHOULD_LINEMERGE=false

TIME_FORMAT=%s%3N

TIME_PREFIX=start_time"*.:*."

TRUNCATE=60000

disabled=false

MAX_TIMESTAMP_LOOKAHEAD=13

I bumped into this when reading https://docs.splunk.com/Documentation/Splunk/latest/Forwarding/Routeandfilterdatad

On the above page there is a reference for caveats for routing and filtering structured data namely:

The forwarded data must arrive at the indexer already parsed.

This was the trigger to set this at the forwarder and not on the indexer.