- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Re: What causes splunk to decide to make a new hot...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What causes splunk to decide to make a new hot bucket?

What are the conditions that cause splunk to create a new hot bucket? I have maxDataSize=auto (750MB) set on my index. I was expecting that data would get written to the same bucket until the maxDataSize was reached (and it is rolled to warm). But that is not what's happening. It looks like splunk is creating a new bucket 1) when it gets "old" data and 2) it looks randomly. This is my current indexes.conf entry:

[foo]

homePath = $SPLUNK_DB/foo/db

coldPath = $SPLUNK_DB/foo/colddb

thawedPath = $SPLUNK_DB/foo/thaweddb

maxDataSize = auto

maxHotBuckets = 10

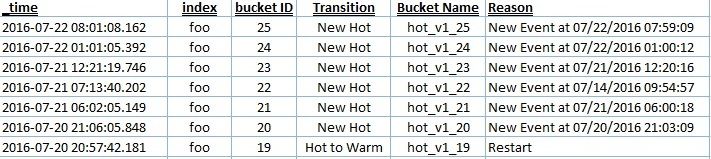

This is the results from Fire Brigade Log Activity-->Bucket Lifecycle. (I had to retype it because I am on a closed network):

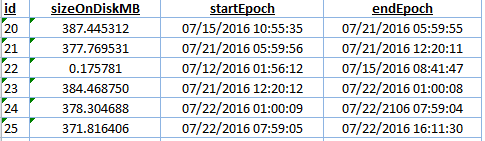

I also have the output from this search: |dbinspect index=foo | convert ctime(startEpoch) | convert ctime(endEpoch) | fields id sizeOnDiskMB startEpoch endEpoch

I really need to understand why the new buckets are getting created. I am working to update my indexes.conf entry to ensure that no data > 45 days is stored in an index. I understand that bucket 22 was created because old data came in. But I don't understand why it created #24 instead of just putting those events into bucket #23 and same with #25.

Unfortunately, this data source routinely has "older" data coming in. Typically it's minutes/hours, but sometimes if a system feeding us is down, we can get data that is days old. My end goal is to configure my index so that I can enforce a 45 day retention period. I was toying with adding a maxHotSpanSecs to limit the span of the data in any given bucket to 1 day. But I'm not sure what that will do to the number of buckets since I'm not understanding why multiple buckets are being created.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you ever received a usful anser on that?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer behind why Splunk decides to create a new hot bucket vs. just adding to one that's already open is a bit beyond me. In fact, it's probably way deep dive esoteric code that only a couple of developers understand. Subjectively, it feels like if there are multiple "timelines" in the environment, such as a skewed clock, or batch-style feeds (e.g. an hour of data all at once). But that's not the problem here.

You're talking about retention. The goal of "keep no more than" is actually a bit harder to achieve than "keep at least". But @ddrillic pointed to maxHotSpanSecs, which is what you're going to need. If you force a hot bucket to span no more than one day, then the boundaries for a bucket are much cleaner, and the retention policy can be properly enforced. There are special values of 3600 and 86400 which trigger a "snap to" functionality that is probably overkill in this scenario. Consider 86399!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is that maxDataSize ** is one of three parameters involved. The other two are **maxHotBuckets and maxHotSpanSecs.

In essence, buckets have a maximum data size and a time span and both can be configured.

Their defaults are -

maxDataSize = 750 MB

maxHotSpanSecs = 7776000

maxHotBuckets =3 or 10 - not clear to me ; -)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One reason is restarting splunkd. You can configure your retention policy based on time instead of size.