Are you a member of the Splunk Community?

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Sending logs over scp to heavy forwarder, why does...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using heavy forwarder to take logs in from a cloud ESA appliance. The logs sending over every 5 minutes via scp (deleting old files every 2 hours after modtime stops) work fine, line by line and time stamps all good. For some reason splunk is randomly ingesting some events by grabbing from a random place in the file, giving it a time stamp, and calling it an event.

For example, I most commonly get 1 - 3 characters, like ile, or id. Sometimes I get the middle to the end of an event. I don't understand why it's doing this, there is no line breaking (it's disabled) and I have enabled crcsalt by source.

I'm using splunk 6.4.6 on the indexers, and 6.6.1 on the heavy forwarder (started with 6.4.6). If I upload the file to my dev box, it's fine; for some reason the monitor feature of splunk is having issues. I also ingest WSA (but with universal forwarder) over scp, and I don't have these issues.

I have put the ESA app on the heavy forwader and search heads. Also tried just indexers and search heads, only input on heavy forwarder. None this changed anything.

This is all virtualized Linux, Ubuntu 64bit LTS servers.

props.conf:

CHARSET = utf-8

MAX_TIMESTAMP_LOOKAHEAD = 24

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

TRUNCATE = 250000

TIME_PREFIX = \w{3}\s

TRANSFORMS-drop=esa_header_drop

transforms.conf

[esa_header_drop]

REGEX=^.*Info:\s(Begin\sLogfile|Logfile\srolled\sover|End\sLogfile|Version\:\s|Time\soffset\sfrom\sUTC\:).*

DEST_KEY=queue

FORMAT=nullQueue

I log amp, mail, gui, auth, http, via scp. They all have the props above, individually configured.

Sample event (good and bad) from one, full auth file:

le

Tue Jun 6 15:12:22 2017 Info: A publickey authentication attempt by the user ***** from 0.0.0.0 failed using an SSH connection.

Tue Jun 6 15:10:40 2017 Info: logout:10.0.0.1 user:- session:blahgfregre

Tue Jun 6 15:09:28 2017 Info: logout:10.0.0.25 user:- blaggj4iogjio3

Tue Jun 6 15:09:20 2017 Info: A publickey authentication attempt by the user ***** from 0.0.0.0 failed using an SSH connection.

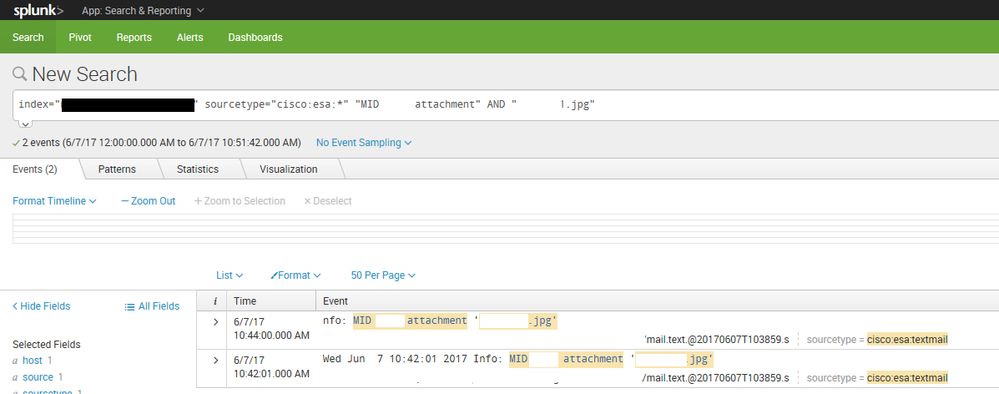

Below is a screenshot of search finding one event, that gets mangled and duplicated (using the suggestion break before regex). Checking the file, there is only one event on one line, no idea why splunk is doing this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Upgrading to 6.6.2 and it now works fine (testing for 48 hours anyway).

EDIT: To clarify, I only updated the heavy forwarder to 6.6.2, and this appears to have fixed it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Although I dont have answer to your question, but I guess you can help me with my question.

Can you list the steps to do the following for me please

1- Configure Cisco ESA to send logs to Heavy Forwarder

2- Splunk side configuration (Especially capturing all the sourcetypes correctly i.e. , authentication, textmail, http etc.)

Our design is, that all endpoints send logs to heavy forwarder, we use syslog-ng. Add-ons are pushed by deployment servers to Heavy forwarders. All logs are then sent from Heavy forwarders to indexers using Universal Forwarders.

The problem in this setup is, that, at the Heavy Forwarder level, we can assign only one sourcetype to all the logs coming for a single endpoint. In case of ESA, we are getting multiple sourcetypes from the same end points.

I hope you can help me with that as you have already got it configured.

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Upgrading to 6.6.2 and it now works fine (testing for 48 hours anyway).

EDIT: To clarify, I only updated the heavy forwarder to 6.6.2, and this appears to have fixed it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for following up!

Any tangible explanation for that?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did get some vague explanation from support about larger events not being processed correctly due to some logic on the backbend that may fix my issue.

Also, I updated the answer, appears this is specific to the forwarder component of splunk. As an FYI, I have not tested the latest 6.6.2 UF (the problem was present on both when this started).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would try using BREAK_ONLY_BEFORE = RegEx

Use the RegEx for your timestamp here (write it yourself), so your events should only get split up when a new timestamp occurs.

Skalli

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion, this also doesn't work (still breaks and ends randomly).

Thinking this is a bug with monitoring feature on the heavy forwarder, but I'm open to more suggestions.

A side note, elasticsearch and filebeat sending the events from the same box don't have this problem at all; seems indicative that this is a splunk bug. Either way, once I find something that works through support, community, or just banging my head against the splunk wall until it works, I'll post it here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I dont think its a bug, i think its the write buffers in your file. If you tail the file, you might even visually see the break. If the breaks happen often, fire up a tail -f and grab a coffee lol

You can confirm this by manually indexing the file manually AFTER it has been completely copied to the dir (oneshot) or just scp to ur desktop and upload the data into the add data wiz to confirm your props are all good

If the linebreaking is all good, then you have proven that all your config is fine, and you are dealing with a timing issue.

I would suggest, an easy way around this is to use a cronjob to move the files from the landing dir, to a different dir splunk is monitoring and I bet you dont see this issue.

I dont know enough ( or care to investigate) how Elastic and filebeat monitor the files, but Splunk is doing a live tail of the file so the time_before_close and multiline should get you close, but the main issue here is it can be an issue when monitoring a file AS it is being written.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I uploaded to the dev box, it works fine. I think you're on to something with the ESA uploading via scp several files and splunk ingesting them in near real-time. I used time_before_close=120 and it didn't do anything.

I just had a short file come in while tailing, about 10 lines that splunk mangled twice. The tail -f command showed the file as it is on the filesystem (correctly), so me tailing the file isn't showing a problem.

What is odd is, the WSA (same Cisco appliance OS) sends the logs over in a similar fashion and doesn't have this problem at all (we've had this running for years now). Not sure why now it is all of a sudden a problem.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

out of curiosity, if you find a broken event, then go back to the HF and find the real event, you can search those pieces in the index and examine the _indextime to see what the gap was....would be interesting to see if the pieces are on more than one indexer or both...

in the meantime quick cron should solve this for you while you continue to poke...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I added a script to concatenate the files to a their own respective log files after not being modified for a minute and then deleting them (only if step 1 is successful). This leaves splunk to follow these new log files completely and logrotate handles clean up. This seems to have worked for several hours.

The fact that elasticsearch can monitor the files just fine, and splunk works fine with a buffer tells me there is some kind of a bug with the monitoring feature of the heavy forwarder receiving these types of logs over scp. I will keep this open and also push to have my ticket resolved appropriately.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hey side note, are you using forceTimeBasedAutoLB on this HF??

My client was, and I thought I had proved it was unrelated, but I am going to revisit and turn it off. Technically its useless on an HF as I understand anyways...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do have "autolb" set on the output conf, but it seems 6.6.1 doesn't really even support that anymore... Is that what you're referring to?

We have two indexers, when PS set this up, it was before 6.4 came along with better support.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yeah can you try turning off forceTimeBasedAutoLB and see if it resolves the issue? It is actually not needed on an HF because an HF knows event boundaries. It was more of UF thing. Obviously monitor your HF closely to ensure it switches Idx and spreads your data nicely after the change.

http://docs.splunk.com/Documentation/Splunk/6.6.1/Admin/Outputsconf

forceTimebasedAutoLB = [true|false]

* Forces existing streams to switch to newly elected indexer every

AutoLB cycle.

* On universal forwarders, use the EVENT_BREAKER_ENABLE and

EVENT_BREAKER settings in props.conf rather than forceTimebasedAutoLB

for improved load balancing, line breaking, and distribution of events.

* Defaults to false.

I am going to have my client try it as well.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just have autoLB configured (an old deprecated setting). I see I don't need that, so I removed it. It appears that the forceTimeBasedAutoLB option is by default false, but I set it to that anyway in the output. This did not change anything, the problem persisted.

Trying the EVENT_BREAKER_ENABLE option on the HF now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nope, still happening.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

u want to leave autolb, it was just the forceTimeBasedLB that we wanted to check. You want the autoLB on.

Ok can you check the internal logs for either of these conditions?

1) If there are far too many files getting dropped at the same time. There will be a log message "File descriptor cache is full (100), trimming".

2) There are TcpOutputProc WARN message regarding read timeout.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked all log files for those, no mention of cache AND full, or TcpOutputProc.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Quick update, I have routed the original logs to a dev box (not a cluster, indexer and search head, 6.6.1). The problem is reproducible. I have turned on debug logging and support is looking at sending this to an engineering team. I won't say the b word again, but man, this has been a real pain in the rear to get such simple logs into splunk!

@mmodestino, do you have a case number on your end? Support is interested in merging cases if another customer is having issues with the ESA logs via scp or the malformed events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i have a case open already, but i think the root cause is different. the source is not scp and ESA...but i think the debug is the right move to put this one to bed. looking forward to seeing what you guys find.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SSH has pretty bad performance, and therefore, so does scp. Once the network buffer is full, it's slow slow slow. There are a couple of ways around this: a) use the high performance network patches for SSH available here PSC HPN Patches (I use these myself on servers which do large file transfers), or switch to an ftp over SSL implementation, netcat, or similar.

For example, I had a customer using straight ftp to constantly transfer high volume firewall logs like this without issue.

I'm not even sure the HPN patches would do what is needed in this case, because of connection pauses. If logs are not coming continuously through that pipe, then you're going to have the connection restart overhead throwing off your time_before_close. So maybe add something like the following to /etc/ssh/sshd_config:

ClientAliveInterval 120

ClientAliveCountMax 720

I presume there's some sort of security policy to prevent you from just using syslog here?