Are you a member of the Splunk Community?

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Re: How to predict end and start time of jobs when...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to predict end time and start time of some jobs and I am currently using "Linear Regression" algorithm Predict numeric field of Machine learning Toolkit. I am having some personal work of prediction, but I am not sure if this approach is right or wrong.

I need more details on this and I don't have additional details.

Fields that can be used for prediction can - Job Group (Under which group it's coming). But I don't have many fields for predicting start time and end time.

If anybody knows about a different approach, please let me know.

Thanks in Advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is an interesting case. Firstly, ML can only derive (and predict) logical relationships if the same exist. I have seen people trying to retro fit data into models. almost as if everything can be explained and predicted by ML. Trust me it can't.

Now coming to your case, as you say correctly the start time is not a good dependent variable to predict a job duration. The model you are trying to use is linear regression, which means there is a linear relationship between start time and job duration. For example assuming a 24 hour scale as the clock ticks from 1-24 your job duration either generally increases or decreases with the clock hours. I think that assumption is not correct. What is more likely is that for some particular hours the job duration might either increase or decrease, which is usually the case with most systems , say for example at 18:00 hours the end of the business day jobs run and as a result of multiple jobs running at 6 PM job duration is inordinately high. This is not at all a linear process and you can not assume that your model of choice has in fact a linear relationship.

The same is the case with your job groups as a dependent variable.

What is more apt for your case is the random forest regression, since it takes a sub set of decision tress and then identifies the best fit decision tree path to make predictions. Even in this case there has to be some underlying pattern for the model to detect and predict.

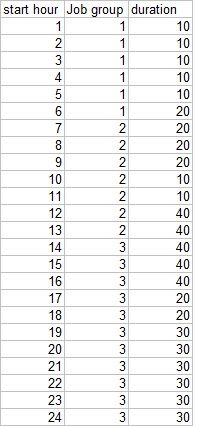

I suggest have a historical data set of something like this-

Now, try running 3 models under random forest or even the decision tree regression.

1st- select only the start hour (if you feel that the start minute is a more likely candidate you can replace start hour with start minute) and try to predict the duration.

2nd - try the same exercise with only job group as your dependent variable

3rd try the same exercise with BOTH start hour and job group as dependent variables.

See your R square values, if it is reasonably high 0.8 or above you have a valid prediction model.

NOTE - do not go by feel good examples where R square is always 0.95 and above, life is not that sweet!

Also, if your data does not fit any regression model it simply means your data in reality has no logical pattern to make a prediction upon.

Please update on your results as this case looks interesting

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is an interesting case. Firstly, ML can only derive (and predict) logical relationships if the same exist. I have seen people trying to retro fit data into models. almost as if everything can be explained and predicted by ML. Trust me it can't.

Now coming to your case, as you say correctly the start time is not a good dependent variable to predict a job duration. The model you are trying to use is linear regression, which means there is a linear relationship between start time and job duration. For example assuming a 24 hour scale as the clock ticks from 1-24 your job duration either generally increases or decreases with the clock hours. I think that assumption is not correct. What is more likely is that for some particular hours the job duration might either increase or decrease, which is usually the case with most systems , say for example at 18:00 hours the end of the business day jobs run and as a result of multiple jobs running at 6 PM job duration is inordinately high. This is not at all a linear process and you can not assume that your model of choice has in fact a linear relationship.

The same is the case with your job groups as a dependent variable.

What is more apt for your case is the random forest regression, since it takes a sub set of decision tress and then identifies the best fit decision tree path to make predictions. Even in this case there has to be some underlying pattern for the model to detect and predict.

I suggest have a historical data set of something like this-

Now, try running 3 models under random forest or even the decision tree regression.

1st- select only the start hour (if you feel that the start minute is a more likely candidate you can replace start hour with start minute) and try to predict the duration.

2nd - try the same exercise with only job group as your dependent variable

3rd try the same exercise with BOTH start hour and job group as dependent variables.

See your R square values, if it is reasonably high 0.8 or above you have a valid prediction model.

NOTE - do not go by feel good examples where R square is always 0.95 and above, life is not that sweet!

Also, if your data does not fit any regression model it simply means your data in reality has no logical pattern to make a prediction upon.

Please update on your results as this case looks interesting

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your valuable suggestion Sukisen!!

We have applied Random Forest Regressor Algorithm, and found that this algorithm is giving much better results than the previous one.

We are getting R square in the range of "0.80-0.85", but RMSE is coming in the range of "150-180".

So can we consider it as good result?

And also i doubt like if we use the JobGroup as a String as a value "use for predicting", then it will work ? Or else do we need to give only numeric value to form relationship for prediction?

Thanks in advance

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I have the below suggestions and questions.

1- How big is your sample data size and what is the split you have done between test and sample data

2-Without knowing how many distinct values your JobGroup has, is it possible to convert them to numeric like you said? It might be a bit too much if you have too many jobgroups but I would still suggest trying.

3- About RMSE. Now, having a high RMSE in general is not good. What does this mean? It means that the cases where there is a variance between predicted values and actual values, the variance is high. So even if say for 95% of your predictions you are reasonably accurate, it could still mean the rest 5% predictions are so huge that your total RMSE is getting too big.

4- This does not invalidate your model, remember this is an IT scenario. For example say at around 11 AM on 5-6 days someone executed other unplanned jobs (or the jobs normally taking 2 minutes took 20 mins/1 hour / even got hung and had to be killed off). It would mean a very high RMSE , does not mean your model is invalid. Typically jobs by nature will have this kind of scenarios in any IT environment.

5- Have you considered applying pre-processing? Just choose standard scaler and apply it to both the dependent and independent variables, it should improve your prediction.

6- How does your adjusted r square look like?

So what I am suggesting as next steps is:

1-Apply a split of 80-20 or 90-10 between your sample and test data and see how accurate your predictions are. If you apply a split of 80-20, the last 20% of your predicted values can be accurately compared with the actual values and you can see how well the model really behaves.

2-Quantify your jobgroup and run random forest again, let us see how long it takes to run 🙂

3-Lastly and most importantly verify the number of occasions your predicted values are different from actuals. Say, for example you have 10000 data points but only 10-20 cases of really high RMSE is present, your model is good. It simply means that on some rare occasions something happened (bad code/system outage) which took the jobs more time to execute than normal. What would be of concern is if your RMSE is evenly spread like 10-20% of your prediction is skewed.

Once again try applying preprocessing, it might still improve your model.

It would be great to see samples of your actual data , I don't know if that is possible.

Keep me posted 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks You SukiSen,

Please find below Answers to your questions :-

1- How big is your sample data size and what is the split you have done between test and sample data -

I have around 51 k events and split is of 80-20

2-Without knowing how many distinct values your JobGroup has, is it possible to convert them to numeric like you said? It might be a bit too much if you have too many jobgroups but I would still suggest trying.

I have converted JobGroups into numeric fields and it has more than 90 distinct values.

3- About RMSE. Now, having a high RMSE in general is not good. What does this mean? It means that the cases where there is a variance between predicted values and actual values, the variance is high. So even if say for 95% of your predictions you are reasonably accurate, it could still mean the rest 5% predictions are so huge that your total RMSE is getting too big.

Yes, for some result variance is too big.

4- This does not invalidate your model, remember this is an IT scenario. For example say at around 11 AM on 5-6 days someone executed other unplanned jobs (or the jobs normally taking 2 minutes took 20 mins/1 hour / even got hung and had to be killed off). It would mean a very high RMSE , does not mean your model is invalid. Typically jobs by nature will have this kind of scenarios in any IT environment.

True.

5- Have you considered applying pre-processing? Just choose standard scaler and apply it to both the dependent and independent variables, it should improve your prediction.

I tried this, my R square value increased up to 0.98 which is really good, but RMSE value is still high up to 198. I am not getting what to do,but RMSE value was good previously.

6- How does your adjusted r square look like?

It's between 90-98 now.

So what I am suggesting as next steps is:

1-Apply a split of 80-20 or 90-10 between your sample and test data and see how accurate your predictions are. If you apply a split of 80-20, the last 20% of your predicted values can be accurately compared with the actual values and you can see how well the model really behaves.

Done.Still same result as above.

2-Quantify your jobgroup and run random forest again, let us see how long it takes to run 🙂

I didn't get this.

3-Lastly and most importantly verify the number of occasions your predicted values are different from actuals. Say, for example you have 10000 data points but only 10-20 cases of really high RMSE is present, your model is good. It simply means that on some rare occasions something happened (bad code/system outage) which took the jobs more time to execute than normal. What would be of concern is if your RMSE is evenly spread like 10-20% of your prediction is skewed.

Okay,

Still can i consider this model as Better than previous

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am sorry I was off the forum due to some burning issues @ work.

The most important thing to consider here is the RMSE. I did not think I was able to explain.

RMSE being high is not good GIVEN that the RMSE is high across 10-20% of your data set.

However, if say for example only a few values have very high variance, your model is correct.

Now, How many (or what percent) of the 51 K events that you have are giving very high RMSE? You did not mention that.

Also, you say that without applying pre-processing you were anyways getting RMSE in the range of 150-200 and after applying pre-processing you get it ~ 198. Considering the jump in your R square to 0.98 after applying pre-processing it might be worth a shout.

And now for my summary

1- Point to consider here (and always remember) RMSE is in the same units as your dependent variable. So, assuming you are predicting duration what are some typical duration values? Is it in the range of 100-300 or something like that? So, if you have dependent values 200-300 your RMSE is pretty good! However, if you have your dependent variable in the range of say always 50-150, this is too high and indicates some cases where the prediction and actuals have a huge variance.

2-If you can quantify your job groupings (90 distinct values is okish)

you can again try running the random forest with both start time & job group as independent variables, job duration being the sole dependent one AND also try having just job group as the sole independent variable. See the model generated out of these two runs as well, see r square and RMSE, if either of these two former scenarios look better, maybe you should consider this model.

3-Finally the RMSE for your test and sample data should be similar, is your test data RMSE values too high in general as compared to your sample data RMSE? If yes, you have over fit your data

4-If our model does much better on the training set than on the test set, then we’re likely overfitting.For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set.

Keep me posted 🙂 and spry once again for the delay, but I was really caught up in office work

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it possible to determine one of the values (e.g. start time) and then re-phrase the question as a problem of predicting duration?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

Yes,I tried to predict duration by giving start time in epoch, as it require the fields in numeric for Predict numeric field of Machine learning Toolkit.

But still m not getting good result, the gap between original duration and predicted duration is coming too differ or we can say wast.

Suppose the actual duration is 1 sec then it's coming to be around 2000.

I know that the start time won't be helpful for calculating duration as if two jobs are starting at same start time it's not necessary that will end at the same time.

But when i just giving job group as field to use to predict , then it's saying that " Dropping field(s) with too many distinct values: JobGroup"

How to approach now for getting end time correcting ?

Thanks in advance!