Join the Conversation

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- How can I test if I am overfitting?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How can I test if I am overfitting?

Hi

I would like to know if I am overfitting. Why are my results too good?

The algorithm has never seen the JUNE dataset. I trained it with the MAY dataset. But the prediction is very good.

Also, I have tested with a "dummy" dataset. It is the one that comes by default with MLTK. Results are bad.

I have been thinking that "maybe" my SPL is wrong. But I am not sure.

Thank you

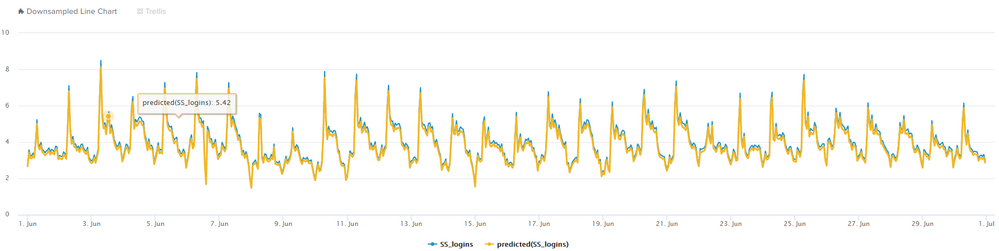

IMAGE 1

TRAIN

| inputlookup fortigate_QC_May2019_logins.csv //loading the dataset **MAY** company A

| fit StandardScaler "logins" with_mean=false with_std=true //normalizing data

| fit DBSCAN "SS_logins" //finding outliers

| where NOT isOutlier==-1 //erasing the outliers

| fit LinearRegression SS_logins from * into "authentication_profiling_LinearRegression" //applying the algorithm and saving it

TEST

| inputlookup fortigate_QC_June2019_logins.csv //loading the dataset **JUNE** --company A

| fit StandardScaler "logins" with_mean=false with_std=true //normalizing the data

| apply "authentication_profiling_LinearRegression" //applying the saved model

| table _time, "SS_logins", "predicted(SS_logins)" //making predictions

TESTING WITH DUMMY DATASET

| inputlookup logins.csv //this is the dummy dataset: the logins are from company B

| fit StandardScaler "logins" with_mean=false with_std=true //normalizing

| apply "authentication_profiling_LinearRegression" //applying the model from company A

| table _time, "SS_logins", "predicted(SS_logins)"

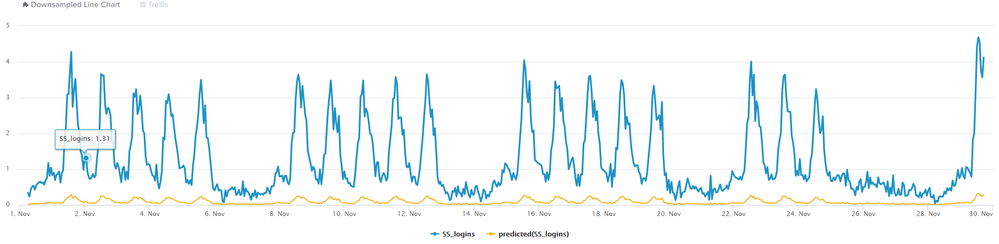

THIS IS THE PLOT WITH THE DUMMY DATASET

Results are not good.

IMAGE 2

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rosho

The way to test over fitting is with the training dataset itself. When you build the training model for May, what you need to do is to split the data set for May into 50-50, then 60-40 and so on till you reach 100-0. if you see that your prediction accuracy is increasing as you increase the percentage from 50 to 100, you are over fitting data.

This does not mean that your prediction accuracy should not increase as you take more and more data into the training forecast set, but it should not be too much.

Look at the splunk MLTK examples, almost all of them have a split of 50-50 between training and sample data.

I have a concern with your algo as well. you seem to trying to predict logins, and I have 2 major concerns here:

1 - You are using too limited a data set(May only) and trying to predict data for November, this would not work out. Remember most business have cycles, ideally if you plan to predict log ins for jan-dec, your training dataset should have data for the last 2 years , from jan-dec or at least for the last one year. I do not believe that just having 1 month's data is a good enough base.

2-You are using the linear regression model. may I know what are the dependent and independent variables? Are you sure they in fact do have a linear relationship? Example of a linear relationship - cpu usage with server load. Example where any linear regression model will fail - cpu usage with the hour of the day. There is no linear relationship here. In fact at 23:00 hours the cpu usage will be less than at 10:00 . There is no reverse linear relationship as well - if you have some 24X7 campaigns that increase server load(and hence cpu usage) , load during the campaign days during normal times will be significantly more and not at all linear. Please check you algo as well

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sukisen1981

I trained the model with 100% of the May dataset. Then I tested it with the June dataset. As the prediction is too good (this can be seen in the image 1), I used the dataset that comes by default in MLTK (image 2) to see if I had leaked information to the algorithm (I tested a Linear Regression and an SGDRegressor).

Why are the results in image 1 too good if there is no linear relationship?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @rosho

If you train a model with 100% of the dataset, you will end up over fitting your model drastically.

Questions -

1)What happens if you reduce split to 50-50 or 80-20 in the training dataset? What is the r square value 2)you see? does it decrease significantly compared to using a dataset with 100% data?

2)Once again, is it possible to have a larger training dataset, like for last 1-2 years data from jan-dec? And them test for overfitting by changing the split ratio?

3)Are you sure there in fact exists some linear / sgdr relationship with the way your dataset is structured?

You are perhaps doing 2 fundamental mistakes in machine learning.

The training data set (for any model, be it regression or clustering or forecasting) should be much larger than the prediction set , and never use a 100% dataset for training. This leads to overfitting.

More importantly, you are trying to predict something, which might not be predictable in the first place with the given data set/ variables

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using 100% of the dataset, of the month of MAY, for training. Then I do the testing with the month of JUNE.

June has not been used for the training. It is only for the testing. And that is why I do not understand why the results are too good.

Maybe is my SPL code.

TRAIN: MAY dataset

TEST: JUNE dataset

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rosho

Have you tried the options I suggested above?

You might be getting valid predictions for june based on may data and that could be a coincidence and is definitely a case of over fitting when you use 100% of the training data set to generate a model.

At the end of the day, it is your requirement and you are free to decide how you want to approach this. Once again, having just 1 month of training data with 100% usage might give you good results for 1-2 months but will definitely fail in the long run.

The question is not why you are getting good results for june, the question is why is your model not working for all months.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not have too much data.

I changed the SPL. Now I am using the moving average to create more features. Then I do the regression.

| inputlookup fortigate_QC_May2019_logins.csv

| trendline sma5(logins) as sma5_logins ema5(logins) as ema5_logins wma5(logins) as wma5_logins

| eval this_date_day = strftime(_time, "%w")

| eval this_date_hour = strftime(_time, "%H")

| eval this_date_day = strftime(_time, "%w")

| eval this_date_day = this_date_day."_"

| eval this_date_hour = this_date_hour."_"

| reverse

| streamstats current=f window=5 first(logins) as LoginsFromTheFuture

| reverse

| fit StandardScaler "LoginsFromTheFuture" "ema5_logins" "sma5_logins" "wma5_logins" with_mean=false with_std=true into "authentication_profiling_StandardScaler2"

| fit LinearRegression "SS_LoginsFromTheFuture" from "SS_ema5_logins" "SS_sma5_logins" "SS_wma5_logins" "this_date_day" "this_date_hour" fit_intercept=true into "authentication_profiling_LinearRegression2"