- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Re: Does the Azure AD add on retrieve the complete...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the basis of the data I see from our tenant the add on is not retrieving all of the sign in records when compared with the Azure Portal sign in page.

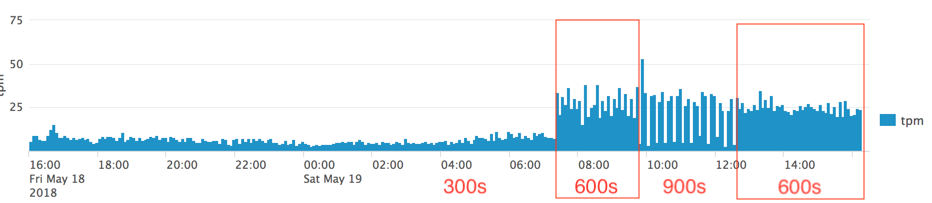

The number of records loaded appears correlated with the polling frequency set. I have tried 300s (5m), 600s (10m) and 900s (15m). In each case the number of underlying events that the add on loads appears different. The effect is quite marked.

Query for the chart above:

index=liquid_it sourcetype="ms:aad:signin"

| timechart span=5m count

| eval tpm=round(count / 5, 2)

| fields - count

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

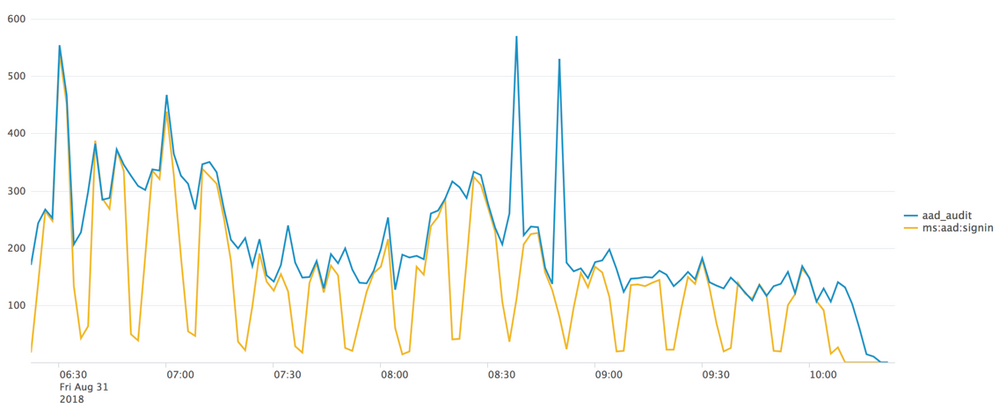

I set up an alternate ingest pipeline: AAD --> Event Hub --> Azure function --> Splunk HEC

That reliably produces a full set of the events in the graph the new ingest is "aad_audit" and the reporting add-in is shown as "ms:aad:signin". The difference is quite marked.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's a simple fix to the app if developer is watching this thread - in the api call add '+and+signinDateTime+le+(current time - delay minutes)'. So the new filter query will look like:

&$filter=signinDateTime+gt+(check point time)+and+signinDateTime+le+(current time - delay minutes)

With delay minutes set to 5, this will get 99% of the data considering MS's less than 5 minute latency for 99% of events. And if you're making the change, please let the user control the delay minutes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This Microsoft article https://docs.microsoft.com/en-us/azure/active-directory/reports-monitoring/reference-reports-latenci... talks about the latency for sign-ins and audit logs in Azure. The latency is between 2 to 5 mins. My understand would be that the logs will be available in Azure portal (also ready for the API to pull) within 5 mins of the originating event. So I think setting the polling frequency to >300s should be OK. However, I have concern about this Add-on using the largest siginDateTime/activityDateTime seen during the query as the checkpoint timestamp. My reasoning is that Azure logs may come in different order, and we will miss some events came in late but their originating event timestamps are before the checkpoint.

I have the following scenario in mind:

- My Signins Input starts at 1:10pm (with polling interval 10 mins) and the current checkpoint is 1:00pm

- 1st input/query ran and pulling logs from 1:00pm to 1:10pm. The Add-on set the largest siginDataTime as the checkpoint. (Let’s say the largest signin time seen from the query is 1:07pm, now the checkpoint is 1:07pm)

- If I have a originating sign-in event happened at 1:06pm but this log is not made available until 1:11pm (5 mins delay). So my 1st query that ran at 1:10pm missed this log and that’s OK as I will expect the next query will pick it up.

- Now at 1:20pm my 2nd input ran. This query however just pulled log from 1:07pm (current checkpoint) to 1:20pm. At this point, my 1:06pm sign-in event is going to be skipped.

As suggested by jconger, the "Azure Monitor Add-on for Splunk" may be the better way to collect near real time from the an Event Hub.

FYI... I have been trying to collect Azure AD logs (sign-in, audit), Azure AD risk events, as well as Office 365 logs into Splunk. I feel in general the latencies in the Microsoft reporting infrastructure causing lots of confusion/issues on how we can properly schedule our data ingestion without incomplete/duplicate data problem. It makes it harder to use the data for near real time monitoring/reporting solution.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Completely agree with your statement:

the latencies in the Microsoft reporting infrastructure causing lots of confusion/issues on how we can properly schedule our data ingestion without incomplete/duplicate data problem

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Version 1.0.3 of the Azure AD Reporting Add-on has some data collection improvements that should address your issue. Also Azure AD logs can be sent to Event Hubs now. The Azure Monitor Add-on for Splunk can be used to collect them from an Event Hub.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion, will try this out. Have set up the event hub and can see activity. Will be interesting to do a side-by-side and see if I get a more complete set of events via this route than the API-based reporting add-on.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, the results are in and on this basis I can see that the Azure AD Reporting Add-on is missing events.

I set up an alternate ingest pipeline: AAD --> Event Hub --> Azure function --> Splunk HEC

That reliably produces more events than the reporting add-on.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so there is some relationship between the frequency of polling and how many events get ingested. The more frequent the polling the fewer events (the more missing events). The less frequent the polling the more events get ingested for any given period.

I changed the polling from 300s to 600s and the number of events per minute went up by a factor of 3.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried the Microsoft cloud services app? It may do what you’re looking for too.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, will try that. Initially I did not think it did the sign-ins, but on closer reading it may do.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some further details:

- Splunk Enterprise 7.0.2

- Set the AAD Reporting add-on to retrieve every 300s

- After 24 hours of running am still only getting a subset of the audit records

- Can discern no pattern to the missing events; no obvious time boundary issue, no attribute of the events not present in splunk that stands out

Needless to say this is a deal-breaker. If the audit in Splunk is not complete it is all but useless.

Not sure how to progress in diagnosing this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changed the polling frequency to 600s to see if that makes a difference.